Tech

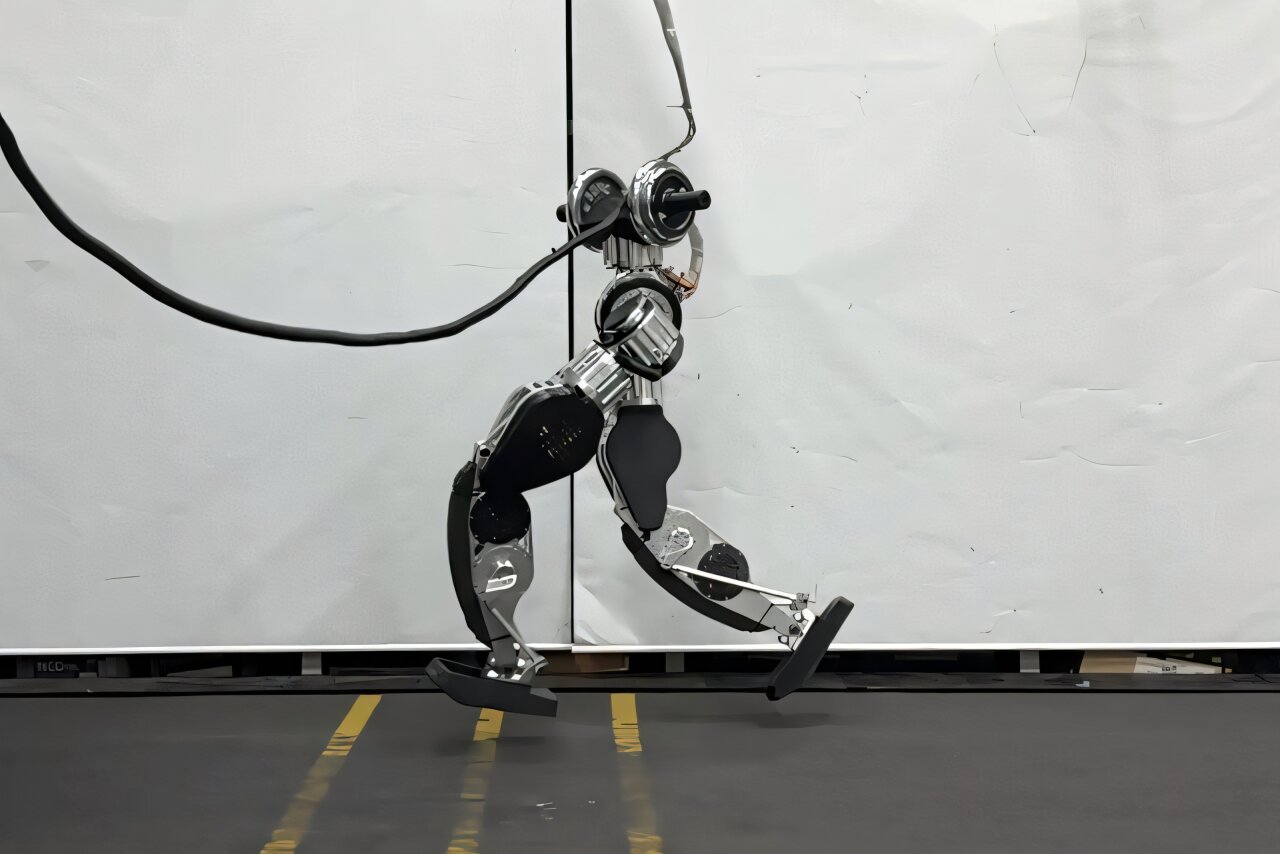

Next-generation humanoid robot can do the moonwalk

KAIST research team’s independently developed humanoid robot boasts world-class driving performance, reaching speeds of 12km/h, along with excellent stability, maintaining balance even with its eyes closed or on rough terrain. Furthermore, it can perform complex human-specific movements such as the duckwalk and moonwalk, drawing attention as a next-generation robot platform that can be utilized in actual industrial settings.

Professor Park Hae-won’s research team at the Humanoid Robot Research Center (HuboLab) of KAIST’s Department of Mechanical Engineering developed the lower body platform for a next-generation humanoid robot. The developed humanoid is characterized by its design tailored for human-centric environments, targeting a height (165cm) and weight (75kg) similar to that of a human.

The significance of the newly developed lower body platform is immense as the research team directly designed and manufactured all core components, including motors, reducers, and motor drivers. By securing key components that determine the performance of humanoid robots with their own technology, they have achieved technological independence in terms of hardware.

In addition, the research team trained an AI controller through a self-developed reinforcement learning algorithm in a virtual environment, successfully applied it to real-world environments by overcoming the Sim-to-Real Gap, thereby securing technological independence in terms of algorithms as well.

Currently, the developed humanoid can run at a maximum speed of 3.25m/s (approximately 12km/h) on flat ground and has a step-climbing capability of over 30cm (a performance indicator showing how high a curb, stairs, or obstacle can be overcome). The team plans to further enhance its performance, aiming for a driving speed of 4.0m/s (approximately 14km/h), ladder climbing, and over 40cm step-climbing capability.

Professor Hae-Won Park’s team is collaborating with Professor Jae-min Hwangbo’s team (arms) from KAIST’s Department of Mechanical Engineering, Professor Sangbae Kim’s team (hands) from MIT, Professor Hyun Myung’s team (localization and navigation) from KAIST’s Department of Electrical Engineering, and Professor Jae-hwan Lim’s team (vision-based manipulation intelligence) from KAIST’s Kim Jaechul AI Graduate School to implement a complete humanoid hardware with an upper body and AI.

Through this, they are developing technology to enable the robot to perform complex tasks such as carrying heavy objects, operating valves, cranks, and door handles, and simultaneously walking and manipulating when pushing carts or climbing ladders. The ultimate goal is to secure versatile physical abilities to respond to the complex demands of actual industrial sites.

During this process, the research team also developed a single-leg “hopping” robot. This robot demonstrated high-level movements, maintaining balance on one leg and repeatedly hopping, and even exhibited extreme athletic abilities such as a 360-degree somersault.

Especially in a situation where imitation learning was impossible due to the absence of a biological reference model, the research team achieved significant results by implementing an AI controller through reinforcement learning that optimizes the center of mass velocity while reducing landing impact.

Professor Park Hae-won stated, “This achievement is an important milestone that has achieved independence in both hardware and software aspects of humanoid research by securing core components and AI controllers with our own technology.

“We will further develop it into a complete humanoid, including an upper body to solve the complex demands of actual industrial sites and furthermore, foster it as a next-generation robot that can work alongside humans.”

The results of this research will be presented by JongHun Choe, a Ph.D. candidate in Mechanical Engineering, as the first author, on hardware development at Humanoids 2025, an international humanoid robot specialized conference held on October 1st.

Additionally, Ph.D. candidates Dongyun Kang, Gijeong Kim, and JongHun Choe from Mechanical Engineering will present the AI algorithm achievements as co-first authors at CoRL 2025, the top conference in robot intelligence, held on September 29th.

The presentation papers are available on the arXiv preprint server.

More information:

Dongyun Kang et al, Learning Impact-Rich Rotational Maneuvers via Centroidal Velocity Rewards and Sim-to-Real Techniques: A One-Leg Hopper Flip Case Study, arXiv (2025). DOI: 10.48550/arxiv.2505.12222

JongHun Choe et al, Design of a 3-DOF Hopping Robot with an Optimized Gearbox: An Intermediate Platform Toward Bipedal Robots, arXiv (2025). DOI: 10.48550/arxiv.2505.12231

Citation:

Next-generation humanoid robot can do the moonwalk (2025, September 24)

retrieved 24 September 2025

from https://techxplore.com/news/2025-09-generation-humanoid-robot-moonwalk.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

Tech

The Best Presidents’ Day Deals on Gear We’ve Actually Tested

Presidents’ Day Deals have officially landed, and there’s a lot of stuff to sift through. We cross-referenced our myriad buying guides and reviews to find the products we’d recommend that are actually on sale for a truly good price. We know because we checked! Find highlights below, and keep in mind that most of these deals end on February 17.

Be sure to check out our roundup of the Best Presidents’ Day Mattress Sales for discounts on beds, bedding, bed frames, and other sleep accessories. We have even more deals here for your browsing pleasure.

WIRED Featured Deals

Branch Ergonomic Chair Pro for $449 ($50 off)

The Branch Ergonomic Chair Pro is our very favorite office chair, and this price matches the lowest we tend to see outside of major shopping events like Black Friday and Cyber Monday. It’s accessibly priced compared to other chairs, and it checks all the boxes for quality, comfort, and ergonomics. Nearly every element is adjustable, so you can dial in the perfect fit, and the seven-year warranty is solid. There are 14 finishes to choose from.

Tech

Zillow Has Gone Wild—for AI

This will not be a banner year for the real estate app Zillow. “We describe the home market as bouncing along the bottom,” CEO Jeremy Wacksman said in our conversation this week. Last year was dismal for the real estate market, and he expects things to improve only marginally in 2026. (If January’s historic drop in home sales is indicative, that even is overoptimistic.) “The way to think about it is that there were 4.1 million existing homes sold last year—a normal market is 5.5 to 6 million,” Wacksman says. He hastens to add that Zillow itself is doing better than the real estate industry overall. Still, its valuation is a quarter of its high-water mark in 2021. A few hours after we spoke, Wacksman announced that Zillow’s earnings had increased last quarter. Nonetheless, Zillow’s stock price fell nearly 5 percent the next day.

Wacksman does see a bright spot—AI. Like every other company in the world, generative AI presents both an opportunity and a risk to Zillow’s business. Wacksman much prefers to dwell on the upside. “We think AI is actually an ingredient rather than a threat,” he said on the earnings call. “In the last couple years, the LLM revolution has really opened all of our eyes to what’s possible,” he tells me. Zillow is integrating AI into every aspect of its business, from the way it showcases houses to having agents automate its workflow. Wacksman marvels that with Gen AI, you can search for “homes near my kid’s new school, with a fenced-in yard, under $3,000 a month.” On the other hand, his customers might wind up making those same queries on chatbots operated by OpenAI and Google, and Wacksman must figure out how to make their next step a jump to Zillow.

In its 20-year history—Zillow celebrated the anniversary this week—the company has always used AI. Wacksman, who joined in 2009 and became CEO in 2024, notes that machine learning is the engine behind those “Zestimates” that gauge a home’s worth at any given moment. Zestimates became a viral sensation that helped make the app irresistible, and sites like Zillow Gone Wild—which is also a TV show on the HGTV network—have built a business around highlighting the most intriguing or bizarre listings.

More recently, Zillow has spent billions aggressively pursuing new technology. One ongoing effort is upleveling the presentation of homes for sale. A feature called SkyTour uses an AI technology called Gaussian Splatting to turn drone footage into a 3D rendering of the property. (I love typing the words “Gassian Splatting” and can’t believe an indie band hasn’t adopted it yet.) AI also powers a feature inside Zillow’s Showcase component called Virtual Staging, which supplies homes with furniture that doesn’t really exist. There is risky ground here: Once you abandon the authenticity of an actual photo, the question arises whether you’re actually seeing a trustworthy representation of the property. “It’s important that both buyer and seller understand the line between Virtual Staging and the reality of a photo,” says Wacksman. “A virtually staged image has to be clearly watermarked and disclosed.” He says he’s confident that licensed professionals will abide by rules, but as AI becomes dominant, “we have to evolve those rules,” he says.

Right now, Zillow estimates that only a single-digit percentage of its users take advantage of these exotic display features. Particularly disappointing is a foray called Zillow Immerse, which runs on the Apple Vision Pro. Upon rollout in February 2024, Zillow called it “the future of home tours.” Note that it doesn’t claim to be the near-future. “That platform hasn’t yet come to broad consumer prominence,” says Wacksman of Apple’s underperforming innovation. “I do think that VR and AR are going to come.”

Zillow is on more solid ground using AI to make its own workforce more productive. “It’s helping us do our job better,” says Wacksman, who adds that programmers are churning out more code, customer support tasks have been automated, and design teams have shortened timelines for implementing new products. As a result, he says, Zillow has been able to keep its headcount “relatively flat.” (Zillow did cut some jobs recently, but Wacksman says that involved “a handful of folks that were not meeting a performance bar.”)

Tech

Do Waterproof Sneakers Keep the Slosh In or Out? Let WIRED Explain

Running with wet feet, in wet socks, in wet shoes is the perfect recipe for blisters. It’s also a fast track to low morale. Nothing dampens spirits quicker than soaked socks. On ultra runs, I always carry spares. And when faced with wet, or even snowy, mid-winter miles, the lure of weatherproof shoes is strong. Anything that can stem the soggy tide is worth a go, right?

This isn’t as simple an answer as it sounds. In the past, a lot of runners—that includes me—felt waterproof shoes came with too many trade-offs, like thicker, heavier uppers that change the feel of your shoes or a tendency to run hot and sweaty. In general, weatherproof shoes are less comfortable.

But waterproofing technology has evolved, and it might be time for a rethink. Winterized shoes can now be as light as the regular models, breathability is better, and the comfort levels have improved. Brands are also starting to add extra puddle protection to some of the most popular shoes. So it’s time to ask the questions again: Just how much difference does a bit of Gore-Tex really make? Are there still trade-offs for that extra protection? And is it really worth paying the premium?

I spoke to the waterproofing pros, an elite ultra runner who has braved brutal conditions, and some expert running shoe testers. Here’s everything you need to know about waterproof running shoes in 2026. Need more information? Check out our guide to the Best Running Shoes, our guide to weatherproof fabrics, and our guide to the Best Rain Jackets.

Jump To

How Do Waterproof Running Shoes Work?

On a basic level, waterproof shoes add extra barriers between your nice dry socks and the wet world outside. If you’re running through puddles deep enough to breach your heel collars, you’re still going to get wet feet. But waterproof shoes can protect against rain, wet grass, snow, and smaller puddles.

Gore-Tex is probably the most common waterproofing tech in footwear, but it’s not the only solution in town. Some brands have proprietary tech, or you might come across alternative systems like eVent and Sympatex. That GTX stamp is definitely the one you’re most likely to encounter, so here’s how GTX works.

The water resistance comes from a layered system that is composed of a durable water repellent (DWR) coating to the uppers with an internal membrane, along with other details like taped seams, more sealed uppers with tighter woven mesh, gusseted tongues, and higher, gaiter-style heel collars.

-

Entertainment1 week ago

Entertainment1 week agoHow a factory error in China created a viral “crying horse” Lunar New Year trend

-

Business3 days ago

Business3 days agoAye Finance IPO Day 2: GMP Remains Zero; Apply Or Not? Check Price, GMP, Financials, Recommendations

-

Tech1 week ago

Tech1 week agoNew York Is the Latest State to Consider a Data Center Pause

-

Tech1 week ago

Tech1 week agoPrivate LTE/5G networks reached 6,500 deployments in 2025 | Computer Weekly

-

Tech1 week ago

Tech1 week agoNordProtect Makes ID Theft Protection a Little Easier—if You Trust That It Works

-

Business1 week ago

Business1 week agoStock market today: Here are the top gainers and losers on NSE, BSE on February 6 – check list – The Times of India

-

Fashion3 days ago

Fashion3 days agoComment: Tariffs, capacity and timing reshape sourcing decisions

-

Business1 week ago

Business1 week agoMandelson’s lobbying firm cuts all ties with disgraced peer amid Epstein fallout