Tech

Silicon Valley Billionaires Panic Over California’s Proposed Wealth Tax

Did California lose Larry Page? The Google and Alphabet cofounder, who left day-to-day operations in 2019, has seen his net worth soar in the years since—from around $50 billion at the time of his departure to somewhere approximating $260 billion today. (Leaving his job clearly didn’t hurt his wallet.) Last year, a proposed ballot initiative in California threatened billionaires like Page with a one-time 5 percent wealth tax—prompting some of them to consider leaving the state before the end of the year, when the tax, if passed, would retroactively kick in. Page seems to have been one of those defectors; The Wall Street Journal reported that he recently spent more than $170 million on two homes in Miami. The article also indicated his cofounder Sergey Brin also might become a Florida man.

The Google guys, formerly California icons, are only two of approximately 250 billionaires subject to the plan. It’s not certain whether many of them have departed for Florida, Texas, New Zealand, or a space station. But it is clear that a lot of vocal billionaires and other super rich people are publicly losing their minds about the proposal, which will appear on the November ballot if it garners around 875,000 signatures. Hedge fund magnate Bill Ackman calls it “catastrophic.” Elon Musk, the world’s richest man, boasted that he already pays plenty of taxes, so much so that one year he claims his tax return broke the IRS computer.

Still, when considered as a percentage of income, even the big sums paid by some billionaires are way lower than the tax rates many teachers, accountants, and plumbers pay every year. If Musk, currently worth an estimated $716 billion, had to pay a 5 percent wealth tax, he’d probably manage to scrape by with a $680 billion nest egg—enough to buy Ford, General Motors, Toyota, and Mercedes, and still remain the world’s richest person. (In any case, he’s safe from California taxes; a few years ago he moved to Texas.)

California’s politicians, including Governor Gavin Newsom, are generally opposed to the initiative. A glaring exception is Representative Ro Khanna, who said to WIRED in a statement that he’s on board with “a modest wealth tax on billionaires to deal with staggering inequality and to make sure people have healthcare.”

Khanna might pay a price for taking on the wealthy and may face a primary challenge backed by oligarch bucks because of it. A safer position for Bay Area politicians is the one taken by San Jose mayor Matt Mahan. He recently posted a tweet stream opposing the bill, saying that if California passed the wealth tax it would be cutting off its nose to spite its face. When I speak to Mahan, he emphasizes the risk of California standing alone in taxing the net worth of billionaires. “It puts at risk our innovation economy that is the real engine of economic growth and opportunity,” he says. (Mahan isn’t super rich, but he is billionaire-adjacent: He once was CEO of a company cofounded by former Facebook president Sean Parker.)

Because of the mobility of rich people, California does have real worries about the impact of a state wealth tax. Not being a billionaire myself, I find the idea baffling—moving away from one’s ideal home simply to avoid a tax that makes no impact on your living situation seems, to use Mahan’s words, like cutting off your nose to spite your face.

Also, I don’t see why an exodus of billionaires necessarily means the end of Silicon Valley as the heart of tech innovation. If you want to become a billionaire, there’s no place better than the Bay Area, with an ecosystem that nurtures innovative businesses. That’s not changing. A few years ago, some tech people moved to Miami, claiming it was going to become the new Silicon Valley. That didn’t happen.

Tech

Everyone Speaks Incel Now

At the beginning of the year, The Cut kicked off a brief discourse cycle by declaring a new lifestyle trend: “friction-maxxing.”

The idea, in a nutshell, is that people have overconvenienced themselves with apps, AI, and other means of near-instant gratification—and would be better off with increased friction in their daily lives, which is to say those mundane challenges that ask some minor effort of them.

Whatever your feelings on that philosophy, the use of “maxxing” as a suffix assumed to be familiar or at least intelligible to most readers of a mainstream news outlet is evidence of another trend: the assimilation of incel terminology across the broader internet. The online ecosystem of incels, or “involuntarily celibate” men, is saturated with this sort of clinical jargon; its aggrieved participants insulate, isolate, and identify themselves through in-group codespeak that is meant to baffle and repel outsiders. So how did non-incels (“normies,” as incels would label them) end up adopting and recontextualizing these loaded words?

Slang, no matter its origins, has a viral nature. It tends to break containment and mutate. The buzzword “woke,” as it pertains to our current politics, comes from African American Vernacular English and once referred to an awareness of racial and social injustice—this usage dates to the middle of the 20th century, preceding even the civil rights movement. But the culture wars of this century have turned “woke” into a favorite pejorative of right-wingers, who wield it as a catchall term for anything that threatens their ideology, such as Black pilots or gender-neutral pronouns.

Back in 2014, the eruption of the Gamergate harassment campaign set the stage for a different linguistic realignment. An organized backlash to women working in the video game industry, and eventually any sort of diversity or progressivism within the medium, it exposed a vein of reactionary anger that would gain a fuller voice during Donald Trump’s 2016 presidential campaign. This was a period when many in the digital mainstream got their first taste of the trollish nihilism and invective that fuels toxic message boards such as 4chan and gave rise to a network of anti-feminist manosphere sites collectively known as the “PSL” community: PUAHate (a board for venting about pickup artists, it was shut down soon after the 2014 Isla Vista killing spree carried out by Elliot Rodger, who frequented the forum), SlutHate (a straightforward misogyny hub), and Lookism (where incels viciously critique each other’s appearance).

Lookism, named for the idea that prejudice against the less attractive is as common and pernicious as sexism or racism, is the only forum of the PSL trifecta that survives today, and while we don’t know who coined the “maxxing” idiom, it’s the likeliest source for the first verb with this construction. “Looksmaxxing,” which borrows from the role-playing game concept of “min-maxing,” or elevating a character’s strengths while limiting weaknesses, became the preferred expression for attempts to improve one’s appearance in pursuit of sex. This could mean something as simple as a style makeover or as extreme as “bonesmashing,” a supposed technique of achieving a more defined jaw by tapping it with a hammer.

If the 2000s introduced people to pickup lingo like “game” and “negging,” the 2010s ushered in language that extended the Darwinian vision of the dating pool as a cutthroat and strictly hierarchical marketplace. “AMOG,” an initialism for “alpha male of the group,” gave us “mogging,” a display where one man flexes his physical superiority over a rival. An ideally masculine specimen might also be recognized as a “Chad,” who allegedly enjoys his pick of attractive partners, while a Chad among Chads is, of course, a “Gigachad.” Women were disparaged as “female humanoids,” then “femoids,” and finally just “foids.”

Tech

OpenClaw Users Are Allegedly Bypassing Anti-Bot Systems

In San Francisco, it feels like OpenClaw is everywhere. Even, potentially, some places it’s not designed to be. According to posts on social media, people appear to be using the viral AI tool to scrape websites and access information, even when those sites have taken explicit anti-bot measures.

One of the ways they are allegedly doing this is through an open source tool called Scrapling, which is designed to bypass anti-bot systems like Cloudflare Turnstile. While Scrapling, which was built with Python, works with multiple types of AI agents, OpenClaw users appear to be particularly fond of the software. On Monday, viral posts promoting Scrapling as a tool for OpenClaw users started to spread on X. Since its release, Scrapling has been downloaded over 200,000 times.

“No bot detection. No selector maintenance. No Cloudflare nightmares,” reads one viral post this week about the open source tool. “OpenClaw tells Scrapling what to extract. Scrapling handles the stealth.”

Cloudflare is not enthused. The company already blocked previous versions of Scrapling, since users of the open source software kept trying to get around anti-scraping protections. This week, the company was working on a patch for Scrapling’s most recent iteration. “We make changes, and then they make changes,” says Dane Knecht, chief technology officer at Cloudflare. He says the company’s trove of website data and its ability to track trends has given it the upper hand.

“We already had a signal that they’re starting to get a higher ability to get around us,” says Knecht. “The team of security operations engineers had already been working on a new set of mediations.”

Large language models were trained on the corpus of the internet—and the process involved a lot of scraping. In some sense, Scrapling users are following in the footsteps of the original model builders, but on a more individualized scale.

Over the past few years, website owners have attempted to put up additional anti-bot protections, either to block software like Scrapling or to find a way to make money off of the bots trying to access their sites. In turn, Cloudflare has been working overtime to keep blocking increasingly powerful bots attempting to get around these protections.

In July 2024, Cloudflare started to offer its customers additional tools that block AI crawlers, unless the bots pay for access. In less than the span of a year, the company claims to have blocked 416 billion unsolicited scraping attempts.

“I Didn’t Know What I was Getting Into”

As Scrapling gained traction in recent days, crypto enthusiasts capitalized on the attention by launching a $Scrapling memecoin. Karim Shoair, who claims to be the sole developer of Scrapling, posted about the memecoin on X (those posts have since been deleted). After the price skyrocketed for around five hours, $Scrapling quickly fell off a cliff as users sold off their stakes. “Bunch of fucking scammers,” reads one comment on the Pump.Fun site that hosts the coin.

“I didn’t know what I was getting into when people made that coin and I endorsed it,” says Shoair, in a direct message with WIRED. “But once I knew, I didn’t want any association with it and the money I withdrew before will go to charity, I won’t benefit from it in anyway. Or maybe just leave it to be wasted.”

In the fallout of this event, the unofficial GitHub Projects Community account, which has over 300,000 followers on X, deleted its posts from this week highlighting Scrapling’s open source software, and appeared to distance itself from the project. “We do not support, promote, or engage in crypto assets, token offerings, trading activity, or crypto-based fundraising,” it said in a post late Monday night.

Putting the crypto forays aside, most software leaders continue to see agents and autonomous AI tools as the future of the web. Even Knecht from Cloudflare, whose work includes blocking bots from nonconsensual scraping, wants to build toward a world where humans and agents benefit from online data and the wishes of website owners are respected. “I see a path forward for an internet that is both friendly to agents and humans,” he says.

This is an edition of Will Knight’s AI Lab newsletter. Read previous newsletters here.

Tech

The AirPods Pro 3 Are $20 Off

Looking for a new pair of earbuds to pair with your favorite iPhone or iPad? Right now, you can grab the Apple AirPods Pro 3 for just $229 on Amazon or Best Buy, a $20 break from their usual price. They’re our favorite wireless headphones for iPhone owners, with great noise-canceling, easy connectivity, and unique features like heart rate and live translation.

The active noise-canceling on the third generation AirPods Pro has improved a great deal, with our reviewer Parker Hall comparing them to the Bose QuietComfort Ultra 2 Earbuds when it comes to filtering out all but the highest frequency, loudest noises. The improved ear tips, now lined with foam, are more comfortable and fit better in smaller ears, with four different sizes to choose from. They also have better sound isolation, which improves the noise canceling and transparency mode performance noticeably.

While Android owners have a variety of choices when it comes to earbuds and headphones, iOS users will appreciate the extra features specifically built for anyone in the Apple ecosystem. If you’re into running with minimal devices, the AirPods Pro 3 can actually take your heart rate through your ears, a neat trick that we found surprisingly consistent with other fitness trackers. Another unique feature, live translation, will bring up the Translate app on iOS and relay what someone else is saying directly into your ears in your own language. Once again, we were impressed by how fast and accurate the system was, and as more languages are added it will become even more useful.

We really only had two minor complaints about the AirPods Pro 3, one of which was that the default EQ is a bit V-shaped, with a slightly overdone bass that’s either really appealing or slightly grating. Thankfully you can tweak your EQ in Spotify or Apple Music to dial in that experience. The other issue is that these have limited compatibility with Android devices, so if you’re on a Samsung or Pixel, you’ll want to check out our other favorite earbuds. For iPhone and iPad owners looking for the latest and greatest for their listening experience, the discounted AirPods Pro 3 are an excellent choice.

-

Entertainment1 week ago

Entertainment1 week agoQueen Camilla reveals her sister’s connection to Princess Diana

-

Tech1 week ago

Tech1 week agoRakuten Mobile proposal selected for Jaxa space strategy | Computer Weekly

-

Politics1 week ago

Politics1 week agoRamadan moon sighted in Saudi Arabia, other Gulf countries

-

Entertainment1 week ago

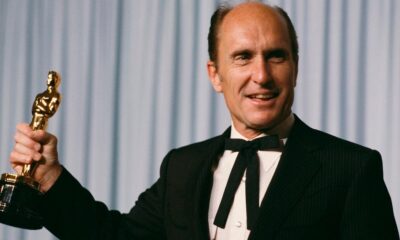

Entertainment1 week agoRobert Duvall, known for his roles in "The Godfather" and "Apocalypse Now," dies at 95

-

Business1 week ago

Business1 week agoTax Saving FD: This Simple Investment Can Help You Earn And Save More

-

Politics1 week ago

Politics1 week agoTarique Rahman Takes Oath as Bangladesh’s Prime Minister Following Decisive BNP Triumph

-

Tech1 week ago

Tech1 week agoBusinesses may be caught by government proposals to restrict VPN use | Computer Weekly

-

Fashion1 week ago

Fashion1 week agoAustralia’s GDP projected to grow 2.1% in 2026: IMF