Tech

The Instagram iPad App Is Finally Here

Apple debuted the iconic and now wildly popular iPad in 2010. A few months later, Instagram landed on the App Store to rapid success. But for 15 years, Instagram hasn’t bothered to optimize its app layout for the iPad’s larger screen.

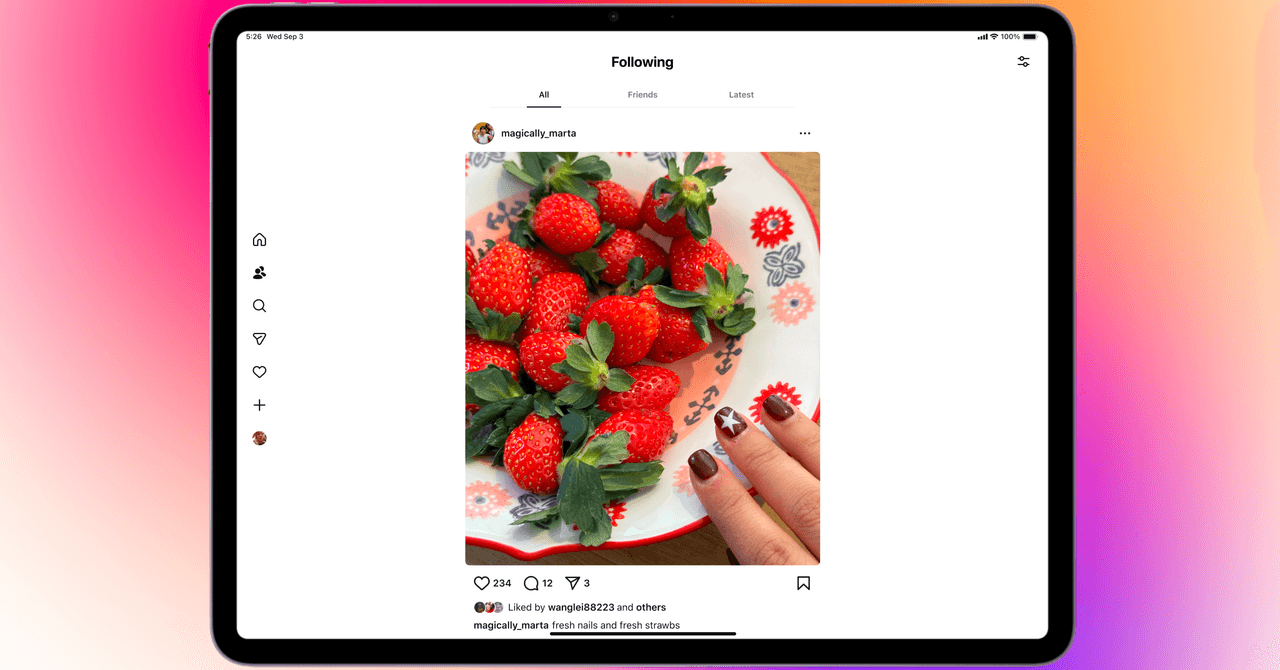

That’s finally changing today: There’s now a dedicated Instagram iPad app available globally on the App Store.

It has been a long time coming. Even before Apple began splitting its mobile operating system from iOS into iOS and iPadOS, countless apps adopted a fresh user interface that embraced the larger screen size of the tablet. This was the iPad’s calling card at the time, and those native apps optimized for its precise screen size are what made Apple’s device stand out from a sea of Android tablets that largely ran phone apps inelegantly blown up to fit the bigger screen.

Except Instagram never went iPad-native. Open the existing app right now, and you’ll see the same phone app stretched to the iPad’s screen size, with awkward gaps on the sides. And you’ll run into the occasional problems when you post photos from the iPad, like low-resolution images. Weirdly, Instagram did introduce layout improvements for folding phones a few years ago, which means the experience is better optimized on Android tablets today than it is on iPad.

Instagram’s chief, Adam Mosseri, has long offered excuses, often citing a lack of resources despite being a part of Meta, a multibillion-dollar company. Instagram wasn’t the only offender—Meta promised a WhatsApp iPad app in 2023 and only delivered it earlier this year. (WhatsApp made its debut on phones in 2009.)

The fresh iPad app (which runs on iPadOS 15.1 or later) offers more than just a facelift. Yes, the Instagram app now takes up the entire screen, but the company says users will drop straight into Reels, the short-form video platform it introduced five years ago to compete with TikTok. The Stories module remains at the top, and you’ll be able to hop into different tabs via the menu icons on the left. There’s a new Following tab (the people icon right below the home icon), and this is a dedicated section to see the latest posts from people you actually follow.

Tech

This Unique Air Fryer Cooks Your Food in Heat-Proof Glass—It’s on Sale Right Now

Want to be the hero of your next Super Bowl party? Check out the Ninja Crispi Portable Glass Air Fryer, an intriguing twist on the air fryer that’s a perfect option for air frying your favorite frozen snacks at your next potluck—it’ll help you win the day by frying up a batch of wings at your buddy’s house. It’s currently marked down as low as $150 on Amazon, depending on your color preference.

Basically, every other air fryer works by circulating hot air through a purpose-built, egg-like basket. But the genius of the Crispi is that its glass fryer tray doubles as a serving tray and a sealable fryer dish. The heating element and fan live in a hat-like unit with clamps that attach to heat-shock-resistant borosilicate glass frying baskets with ceramic-coated trays inside. While the actual “fry” setting can be a little intense, the “bake” setting works perfectly for softer foods like veggies. Either way, the results spoke for themselves in our testing, with crispy fries and nuggets even outside in freezing temperatures.

Our reviewer Matthew Korfhage found the “recrisp” setting particularly useful for bringing leftover pizza slices and noodle dishes back to life. That makes it a great choice for office lunchtimes, where you can leave the Crispi itself in a drawer at your desk and ferry leftovers from home. You only have to wash a normal glass dish, rather than the entire fry basket like most options, so this would also work well for dorms or even camping, if there’s an outlet nearby.

Of course, you’ll have to make a few compromises in order to take your precious air-fried snacks with you on the go. While the glass container does a surprisingly good job of insulating the food during cooking, the temperature range isn’t quite as exact as some of our other favorite air fryers.

You’ll also have to be a little bit flexible on your aesthetic choices. While the pastel-hued Cherry Crush, Frosted Lilac, and Ginger Snap colors are marked down to the lower $150 price, the less pronounced Sage, Stone, and Cyberspace Gray are slightly higher at $160, but still under the usual $180 price tag. There’s also a Racing Green Bundle that includes all three sizes of glass crisping tray for $190, if you think you’ll end up buying them anyway.

Tech

All-Clad Is the Expensive Gold Standard. The Factory Seconds Sale Makes It More Affordable

All-Clad Deals used to be difficult to find, but thankfully, the Factory Seconds Sale has come back around for a little while. These sales tend to only last for a few days—this one expires at midnight tomorrow, January 21—though they sometimes are extended. In any case, these sales offer a reliable way to score a solid deal on All-Clad kitchenware, which is normally very expensive. We love and swear by All-Clad, as do many professional chefs.

Factory Seconds are products with minor imperfections that still perform as intended. Sometimes an item is “second quality,” meaning it might have some blemishes or dents. Sometimes an item just has packaging damage. Every product page lists the exact reason for the “Factory Seconds” designation, as well as its warranty; most items are backed by All-Clad’s lifetime warranty. Note that you’ll need to enter your email to access the sale, and flat-rate shipping adds $10. Orders ship in 10 to 15 business days. We’ve highlighted our favorite deals below.

Make sure to check out our related buying guides, including the Best Chef’s Knives, Best Meal Kit Subscriptions, and Best Coffee Makers.

Best All-Clad Factory Seconds Deals

We include this pan at every possible opportunity when it’s on sale because it’s such a solid kitchen companion. Many WIRED Reviews team members have it in their kitchens. The shape allows you to make a pan sauce or sear up some steaks. The high walls prevent grease splatter, and you can use it like a wok or Dutch oven in addition to a regular ol’ pan. It’s dishwasher-safe for easy cleanup.

This roaster is a staple in my kitchen during the colder months of the year. It’s safe to use in the oven and under the broiler at up to 600 degrees Fahrenheit, and it has enough room for roasting meats or vegetables in large portions (it can hold up to a 20-pound turkey). You can also transfer it to the stovetop to whip up a quick sauce with the roasted drippings. The manufacturer recommends hand-washing.

This hard-anodized nonstick pan is versatile enough to make just about anything. Eggs, vegetables, a pan sauce, and stir-fries are all contenders. It’s made with a PTFE coating. Make sure not to get it too hot, and use nonstick-safe tools and hand-wash it to preserve that coating for as long as you can.

This nonstick pot has a PTFE coating and therefore should be hand-washed. It can be used to simmer, stew, or steam thanks to its tall sides and included lid. It also comes with a steamer basket for all of your vegetable and/or dumpling needs. The pieces nest together for easier storage.

So technically, this thing isn’t a spatula, but in my house that’s what we’d call it. Whether you’re Team Turner or not, nonstick-safe tools can be difficult to come by and they’re crucial to keep around if you’re cooking on nonstick cookware. I like having backups so I don’t have to constantly do dishes. This turner is heat-safe up to 425 degrees Fahrenheit and will come in handy for everything from eggs to grilled cheese sandwiches.

The exact reasoning for this being a Factory Seconds item isn’t listed, but a good cast-iron skillet is indispensable for every home chef. It has two pour spouts for easier siphoning or serving, and the finish is resistant to scratches and stains. The skillet is oven-safe up to 650 degrees Fahrenheit.

What Are All-Clad Factory Seconds?

The Factory Seconds Event is run by Home and Cook Sales, an authorized reseller for All-Clad and several other cookware brands. The items featured in the sale (usually) have minor imperfections, like a scuff on the pan, a misaligned name stamp, or simply a dented box. Every product on the website lists the nature of the imperfection in the title (e.g., packaging damage). You’ll need to enter an email address to access the sale.

While the blemishes vary, the merchant says all of the cookware will perform as intended. Should any issue arise, nearly every All-Clad Factory Seconds product is backed by All-Clad’s limited lifetime warranty. (Electric items have a slightly different warranty; check individual product pages for details.) We’ve used more than a dozen Factory Seconds pots, pans, and accessories, and they’ve all worked exactly as advertised. Just remember that all sales are final, and note that you’ll have to pay $10 for shipping. It’s also worth noting that the “before” prices are based on buying the items new, but we still think it offers a good indication of how much you’re saving versus the value you’re getting.

Power up with unlimited access to WIRED. Get best-in-class reporting and exclusive subscriber content that’s too important to ignore. Subscribe Today.

Tech

‘Veronika’ Is the First Cow Known to Use a Tool

Justice for Far Side cartoonist Gary Larson: A team of scientists has observed, for the first time, a cow using a tool in a flexible manner. The ingenuity of “Veronika,” as the animal is called, shows that cattle possess enough intelligence to manipulate elements of their environment and solve challenges they would otherwise be unable to overcome.

Veronika is a pet cow in Austria. Her owners don’t use her for meat or milk production. Nor was she trained to do tricks; on the contrary, for the past 10 years she has developed the ability to find branches in the grass, choose one, hold it with her mouth, and scratch herself with it to relieve skin irritation.

Until now, only chimpanzees had convincingly demonstrated the ability to employ tools to improve their living conditions. Recent studies also point to whales as the only marine animals capable of using complex tools. This European cow is about to join that exclusive group of ingenious animals.

Videos of Veronika circulating online caught the attention of veterinary researchers in Vienna. They visited the farm, conducted behavioral tests, and carried out controlled trials. “In repeated sessions, they verified that her decisions were consistent and functionally appropriate,” a press release stated.

Veronika’s abilities go beyond simply using a point to scratch herself, explain the authors of the study published in Current Biology. In the tests, the cow was offered different textures and objects, and she adapted according to her needs. Sometimes she chose soft bristles and other times a stiffer point. The researchers say she used different parts of the same tool for specific purposes and even modified her technique depending on the type of object or the area of her body she wanted to scratch.

Although they consider using a tool to relieve irritation “less complex” compared to, for example, using a sharp rock to access seeds, the specialists greatly value Veronika’s ability. For now, she demonstrates that she can decide which part of the tool is most useful to her. The finding suggests that we have underestimated the cognitive capacity of cattle, according to the authors.

Why Is Veronika So Skilled?

The team acknowledges that it’s still too early to say that all cows can use tools with the same skill as Veronika. For now, the researchers are trying to determine how this cow developed an awareness of her surroundings.

Researchers believe her particular circumstances played a role. Veronika has lived for 10 years in a complex, open environment filled with manipulable objects—a very different experience from that of cattle raised for milk and meat production. These conditions fostered exploratory and innovative behavior, they say. They are now searching for more videos of cattle using tools to gather further evidence about their cognitive abilities.

“Until now, tool use was considered a select club, almost exclusively for primates (especially great apes, but also macaques and capuchins), some birds like corvids and parrots, and marine mammals like dolphins. Finding it in a cow is a fascinating example of convergent evolution: intelligence arises as a response to similar problems, regardless of how different the animal’s ‘design’ may be,” said Miquel Llorente, director of the Department of Psychology at the University of Girona, who was not involved in the study, in a statement to the Science Media Centre Spain.

-

Tech1 week ago

Tech1 week agoNew Proposed Legislation Would Let Self-Driving Cars Operate in New York State

-

Entertainment1 week ago

Entertainment1 week agoX (formerly Twitter) recovers after brief global outage affects thousands

-

Sports6 days ago

Sports6 days agoPak-Australia T20 series tickets sale to begin tomorrow – SUCH TV

-

Politics4 days ago

Politics4 days agoSaudi King Salman leaves hospital after medical tests

-

Business5 days ago

Business5 days agoTrump’s proposed ban on buying single-family homes introduces uncertainty for family offices

-

Tech5 days ago

Tech5 days agoMeta’s Layoffs Leave Supernatural Fitness Users in Mourning

-

Fashion4 days ago

Fashion4 days agoBangladesh, Nepal agree to fast-track proposed PTA

-

Tech6 days ago

Tech6 days agoTwo Thinking Machines Lab Cofounders Are Leaving to Rejoin OpenAI