Tech

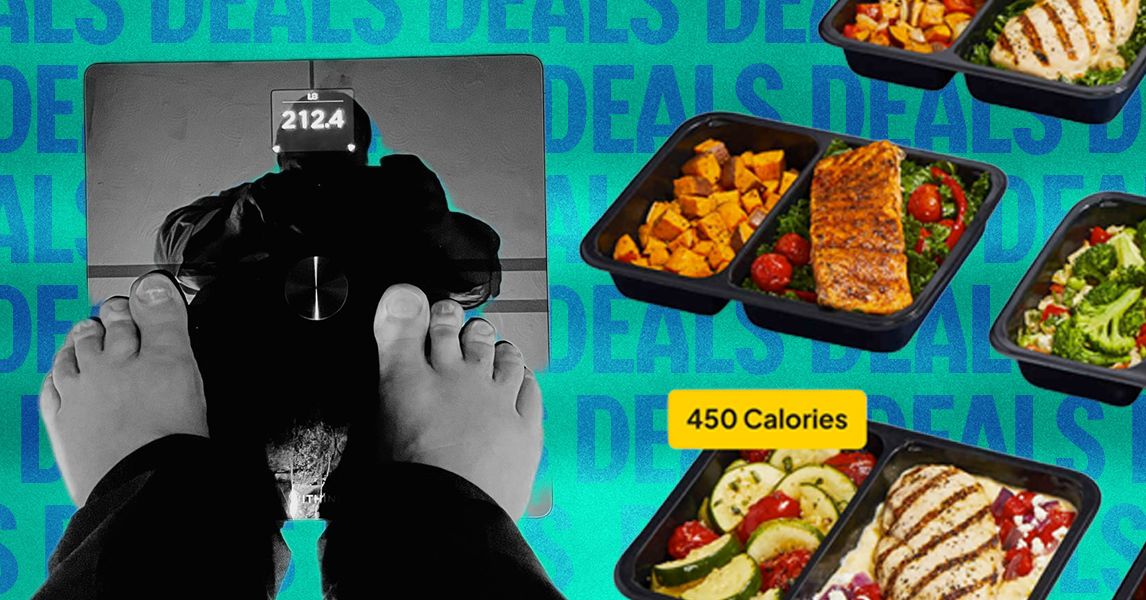

Oh No! A Free Scale That Tells Me My Stress Levels and Body Fat

I will admit to being afraid of scales—the kind that weigh you, not the ones on a snake. And so my first reaction to the idea I’d be getting a free body-scanning scale with a Factor prepared meal kit subscription was something akin to “Oh no!”

It’s always bad or shameful news, I figured, and maybe nothing I don’t already know. Though, as it turned out, I was wrong on both points.

Factor is, of course, the prepared meal brand from meal kit giant HelloFresh, which I’ve tested while reviewing dozens of meal kits this past year. Think delivery TV dinners, but actually fresh and never frozen. Factor meals are meant to be microwaved, but I found when I reviewed Factor last year that the meals actually tasted much better if you air-fry them (ideally using a Ninja Crispi, the best reheating device I know).

Especially, Factor excels at the low-carb and protein-rich diet that has become equally fashionable among people who want to lose weight and people who like to lift it. Hence, this scale. Factor would like you to be able to track your progress in gaining muscle mass, losing fat, or both. And then presumably keep using Factor to make your fitness or wellness goals.

While your first week of Factor comes at a discount right now, regular-price meals will be $14 to $15 a serving, plus $11 shipping per box. That’s less than most restaurant delivery, but certainly more than if you were whipping up these meals yourself.

If you subscribe between now and the end of March, the third Factor meal box will come with a free Withings Body Comp scale, which generally retails north of $200. The Withings doesn’t just weigh you. It scans your proportions of fat and bone and muscle, and indirectly measures stress levels and the elasticity of your blood vessels. It is, in fact, WIRED’s favorite smart scale, something like a fitness watch for your feet.

Anyway, to get the deal, use the code CONWITHINGS on Factor’s website, or follow the promo code link below.

Is It My Body

The scale that comes with the Factor subscription is about as fancy as it gets: a $200 Body Comp scale from high-tech fitness monitoring company Withings. The scale uses bioelectrical impedance analysis and some other proprietary methods in order to measure not just your weight but your body fat percentage, your lean muscle mass, your visceral fat, and your bone and water mass, your pulse rate, and even the stiffness of your arteries.

To get all this information, all you really need to do is stand on the scale for a few minutes. The scale will recognize you based on your weight (you’ll need to be accurate in describing yourself when you set up your profile for this to work), and then cycle through a series of measurements before giving you a cheery weather report for the day.

Your electrodermal activity—the “skin response via sweat gland stimulation in your feet”—provides a gauge of stress, or at least excitation. The Withings also purports to measure your arterial age, or stiffness, via the velocity of your blood with each heartbeat. This sounds esoteric, but it has some scientific backing.

Note that many physicians caution against taking indirect measurements of body composition as gospel. Other physicians counter that previous “gold standard” measurements aren’t perfectly accurate, either. It’s a big ol’ debate. For myself, I tend to take smart-scale measurements as a convenient way to track progress, and also a good home indicator for when there’s a problem that may require attention from a physician.

And so of course, I was petrified. So much bad news to get all at once! I figured.

Tech

You May Think You Have Enough Headphones, but I Keep Finding Reasons for More

You might think you’ve got enough headphones. Most of us have accrued at least two or three pairs over the years, and might have a few more half-working pairs stuck in a drawer somewhere. But I’m here to tell you, if you haven’t fully explored the fabulous world of specialized headphones that has exploded in the past decade, from open earbuds to sports and travel cans, you’re not maximizing your sonic potential. The best headphones are now so varied that they often depend entirely on what you intend to use them for. That means the best headphones for you probably come in many shapes and sizes, and you might actually want more than one pair.

Balling out on lots of headphones for every one of life’s situations doesn’t necessarily mean spending a lot of money. Unless you’re successfully riding the “boomcession,” there’s a fair chance spare cash is tight right now. Luckily, there’s a veritable explosion of impressive budget brands making great stuff, alongside household brands pitching to the cheap seats.

As an audio reviewer for well over a decade, I’ve tried hundreds of models across every color in the headphone rainbow. Here’s how to shop right so you can get the most out of any and all of them, as well as the best headphones I can think of in every category right now.

Noise-Canceling Earbuds: The 2026 Baseline

Let’s start with the modern one-and-done choice: Noise-canceling earbuds are the ultimate jack-of-all-trades. If you only want to own one pair, this is it. The best noise-canceling earbuds sound great, fit neatly in your pocket, and are equally adept at letting in or blocking out environmental sounds to adapt to any situation.

It’s probably no surprise that the wildly popular AirPods Pro are the best headphones for iPhone owners, thanks to impressive performance, loads of features, and seamless integration with all things Apple. They’re a massive step up from the standard AirPods, which offer similar Apple-friendly features and a touch of noise canceling, but don’t perform nearly as well as even most budget earbuds.

For more phone-agnostic options, Bose’s QuietComfort Ultra 2 are the ultimate noise killers, while Sony’s new WF-1000XM6 offer great sound and utterly natural transparency mode. Technics’ EAH AZ100 are among my favorites for sound quality.

If those are too pricey, fear not! Budget earbud options are varied and plentiful, from Android-optimized midrangers like the Google Pixel Buds 2a to the stylish Nothing Ear (a) or highly affordable Soundcore Space A40. If you’re keeping to one pair, I’d put in all your chips for better performance, but there’s no shortage of great affordable options, and new pairs at all prices keep rolling in.

Noise-Canceling Over-Ears: Comfort Meets Performance

Anyone who’s taken a long flight can probably relate to the fact that sticking something in your ears for five hours-plus isn’t an exercise in comfort. Enter travel headphones like the best noise-canceling over-ears, which have adapted from early models like Bose’s stalwart QC 25 to become among the most advanced and downright luxurious audio products for your money. They’re great for other tasks too, from working in a busy office to commuting or simply chilling at home in tranquil reverie.

The latest and greatest, like Sony’s WH-1000XM6 and Bose’s QuietComfort Ultra, apply uncompromising noise cancellation, plush comfort for long listening, and a pile of advanced features like pausing when you speak or automatic sleeping and waking. The Ultra are my favorite for sheer comfort, but there are plenty of rivals like Sonos’ equally comfy Ace, the iconic (and heavy) AirPods Max, or the utterly immersive B&W Px7 S3. Cheaper options abound, but some of my favorites include Sony’s high-punching WH-CH720, the crazy-affordable Soundcore Life Q30, or its newer cousin, the Space One. These won’t bring the same level of performance or tranquility, but they still work great for long flights and beyond.

Open Earbuds: For Keeping Alert

Here’s where things get really fun: Open earbuds have exploded faster than any other audio segment in recent memory, with a kaleidoscope of options from virtually every audio brand. Designed to keep your ears open while delivering satisfying sound, the best open earbuds aren’t ideal for everything you do, but they’re fantastic for specialized activities like ebike riding, where wind resistance renders artificial transparency modes useless. Over time, I’ve found tons of other cool use cases, from walking the dog to sneaking in some Olympics at the bar.

Open Earbuds come in various design types, from wacky bone-conductors to wraparound models and—my personal favorite—clip-ons. Their light and ergonomic housings aim to essentially disappear on your ears for all-day listening, and it works better than you might think.

You could spend a lot on pairs like Bose’s excellent Open Ultra, but you really don’t have to, since even the best models are limited in performance. Soundcore’s Aeroclip are my favorite value-to-performance pair, but plenty of cheaper options get the job done, like Acefast’s nearly free Acefit Air or Soundpeat’s Pearclip Pro. Open earbuds are easily my favorite new audio trend.

Workout Headphones: Keep Moving

There’s an obvious Venn diagram overlap between open earbuds and workout headphones, but if you don’t like the idea of keeping your ears open, traditional sports models are a great alternative. My favorite is the revamped Beats Powerbeats Pro 2, which offer tons of features in a wraparound design that’s nearly unshakeable. I like that they’re optimized for Apple devices, but still work well for Android, and they come with great noise canceling and transparency mode, but also a high price.

Like open earbuds, there are plenty of cheap options, including the similarly unshakeable (but much more basic) Jlab Go Air Sport, which run a mere $30 or less on sale. If you’re not into the minimalist thing, WIRED editor Adrienne So swears by the BlueAnt Pump X over-ear headphones for weightlifting, in large part due to their cooling-gel earpads that go in the fridge overnight to keep sweat at bay. They’ve also got noise canceling and plenty of battery life at over 50 hours per charge. For jogging or cycling, open earbuds are likely a better fit, while some folks simply use the AirPods Pro, but it can be nice to just have a dedicated pair in your gym bag.

Wired Headphones: Plug It In

The youth delight in resurrecting old tech that the more seasoned among us have long left for dead (cassette tapes?!). In the case of wired headphones, there’s good reason to plug in, starting with improved performance for your dollars. If you’re a content creator, musician, or simply a cash-savvy sound connoisseur, you can get an impressive return from wired options that avoid the sound degradation of many wireless options.

Some of our favorite affordable options include affordable and classic-looking earbuds like Shure’s iconic SE-112 and Sennheiser’s impressive IE200, and studio-friendly over-ears like Audio-Technica’s ATH-M20x, If you’re willing to spend a bit more, the fantastic Sennheiser HD6XX offer the best sound for the money I’ve heard in any headphone segment. Based on the much pricier HD 650, these are open-back headphones that let in exterior sounds, but the performance is incredible. If you want even better sound and design, there are tons of options, but they’ll cost you.

Fancy Headphones: The Audiophile Angle

If you really want to optimize the wired connection, there’s a whole segment of audiophile headphones made with high-quality materials, innovative speaker technologies, elevated designs, and accordingly elevated pricing. There are a whole bunch of varieties, but for the sake of levity I’ll break them down into two categories: in-ear monitors (IEMs), the fancy version of in-ear headphones, and over-ears.

IEMs generally use dynamic drivers, the traditional driver type in most headphones and speakers, balanced armatures, much smaller and more accurate speakers, or a mix of both. My favorite pairs come from Ultimate Ears, like the UE 18+ Pro, which are customized for your ears using 3D printing and other techniques. (You’ve likely seen these on TV for musicians and broadcasters.) Other IEMs we like include Sennheiser’s IE 900 and models from Campfire Audio.

For over-ear headphones, Audeze’s planar magnetic headphones are among my favorites, starting as low as $500 (yeah, I know) in the excellent LCD-S20 closed-back headphones. Another incredible pair I recently tested are Meze’s Poet, which are not only the most gorgeous-looking pair I’ve reviewed, but also offer among the clearest and most articulate sound I’ve laid ears on. There are dozens more to try, as audiophilia is its own journey, but this is a good starting point. We recently reviewed the Grado Signature S750, which have an effortlessly expansive sound that feels like it can’t possibly be coming from mere centimeters away from your eardrums.

Other Headphone Types and Upcoming Features

As I’m sure some of you have already noted, there are still more types of specialty headphones, including Gaming Headsets, which are another animal altogether, and even TV Headphones, which quickly transition from the screen’s internal speakers to let you listen in silence without latency. I’m currently testing a new TV headphones bundle from Sennheiser, the RS 275, which includes a dedicated pair of headphones in the HDR 275 and Sennheiser’s new BTA1 Auracast transmitter (verdict to come).

Speaking of Auracast, it’s an increasingly cool new type of Bluetooth protocol that allows connection of an infinite number of devices at up to 100 meters, like an FM radio signal. Its implementation is still in its early stages, but it’s a good feature to look for in new headphones. Other features to consider include an app with an EQ and presets (which the majority of my recommendations include), multipoint pairing to connect to two or more devices at once (again, pretty ubiquitous), and spatial audio features for video formats like Dolby Atmos.

Tech

Factor Offers High Protein Meal Delivery Options

I should probably add the disclaimer that I like to cook, was a professional chef for many years, and my family of five rarely eats anything other than home cooked meals. But I get it. Many people are looking for a way to eat healthier in the midst of busy schedules, and maybe have never learned how to cook, or want to follow some specific diet like keto that requires a lot of research, planning, and effort.

In those situations I can see the appeal of a solution like Factor. Dial in what you want, it shows up, you microwave it, eat, and you’re on your way without caving and ordering pizza for the third time this week.

While Factor’s meals are generally enjoyable and reasonably tasty—for whatever reason, the dishes tending toward Mexican food seemed to be better than the rest—there’s just no denying that eating food out of segmented plastic tray is, um, uninspiring. At the very least, put your heated results on a real plate. It’ll taste better that way. Trust me, there’s a reason your plate is carefully arranged when it reaches your table at the fancy restaurant. Aesthetics matter.

Photograph: Scott Gilbertson

Factor’s proteins, especially the meats, were the highlight of most of the meals. Options I tried included a meatball and pasta dish with green beans, a bunless burger, shrimp pasta with some zucchini, a faux grits meal (cauliflower grits), and a chicken taco bowl. In every case, the protein was quite tasty, the sauces were a mixed bag, while the vegetables fared less well in the whole, cook it, pack it, ship it, reheat it process. Green beans were especially what I could call “grim”, rather than the “vibrant and fresh” that I suspect Factor was going for.

But you need to step back from the aesthetic experience and remember the context in which these meals exist. This is not fine dining or even a home cooked meal, but a healthy alternative to frozen microwavable meals high in artificial ingredients and often with unnecessary added sugars. When you remember that, Factor start to look not only better, but downright appealing.

Tech

Everyone Speaks Incel Now

At the beginning of the year, The Cut kicked off a brief discourse cycle by declaring a new lifestyle trend: “friction-maxxing.”

The idea, in a nutshell, is that people have overconvenienced themselves with apps, AI, and other means of near-instant gratification—and would be better off with increased friction in their daily lives, which is to say those mundane challenges that ask some minor effort of them.

Whatever your feelings on that philosophy, the use of “maxxing” as a suffix assumed to be familiar or at least intelligible to most readers of a mainstream news outlet is evidence of another trend: the assimilation of incel terminology across the broader internet. The online ecosystem of incels, or “involuntarily celibate” men, is saturated with this sort of clinical jargon; its aggrieved participants insulate, isolate, and identify themselves through in-group codespeak that is meant to baffle and repel outsiders. So how did non-incels (“normies,” as incels would label them) end up adopting and recontextualizing these loaded words?

Slang, no matter its origins, has a viral nature. It tends to break containment and mutate. The buzzword “woke,” as it pertains to our current politics, comes from African American Vernacular English and once referred to an awareness of racial and social injustice—this usage dates to the middle of the 20th century, preceding even the civil rights movement. But the culture wars of this century have turned “woke” into a favorite pejorative of right-wingers, who wield it as a catchall term for anything that threatens their ideology, such as Black pilots or gender-neutral pronouns.

Back in 2014, the eruption of the Gamergate harassment campaign set the stage for a different linguistic realignment. An organized backlash to women working in the video game industry, and eventually any sort of diversity or progressivism within the medium, it exposed a vein of reactionary anger that would gain a fuller voice during Donald Trump’s 2016 presidential campaign. This was a period when many in the digital mainstream got their first taste of the trollish nihilism and invective that fuels toxic message boards such as 4chan and gave rise to a network of anti-feminist manosphere sites collectively known as the “PSL” community: PUAHate (a board for venting about pickup artists, it was shut down soon after the 2014 Isla Vista killing spree carried out by Elliot Rodger, who frequented the forum), SlutHate (a straightforward misogyny hub), and Lookism (where incels viciously critique each other’s appearance).

Lookism, named for the idea that prejudice against the less attractive is as common and pernicious as sexism or racism, is the only forum of the PSL trifecta that survives today, and while we don’t know who coined the “maxxing” idiom, it’s the likeliest source for the first verb with this construction. “Looksmaxxing,” which borrows from the role-playing game concept of “min-maxing,” or elevating a character’s strengths while limiting weaknesses, became the preferred expression for attempts to improve one’s appearance in pursuit of sex. This could mean something as simple as a style makeover or as extreme as “bonesmashing,” a supposed technique of achieving a more defined jaw by tapping it with a hammer.

If the 2000s introduced people to pickup lingo like “game” and “negging,” the 2010s ushered in language that extended the Darwinian vision of the dating pool as a cutthroat and strictly hierarchical marketplace. “AMOG,” an initialism for “alpha male of the group,” gave us “mogging,” a display where one man flexes his physical superiority over a rival. An ideally masculine specimen might also be recognized as a “Chad,” who allegedly enjoys his pick of attractive partners, while a Chad among Chads is, of course, a “Gigachad.” Women were disparaged as “female humanoids,” then “femoids,” and finally just “foids.”

-

Entertainment1 week ago

Entertainment1 week agoQueen Camilla reveals her sister’s connection to Princess Diana

-

Politics1 week ago

Politics1 week agoRamadan moon sighted in Saudi Arabia, other Gulf countries

-

Entertainment1 week ago

Entertainment1 week agoRobert Duvall, known for his roles in "The Godfather" and "Apocalypse Now," dies at 95

-

Politics1 week ago

Politics1 week agoTarique Rahman Takes Oath as Bangladesh’s Prime Minister Following Decisive BNP Triumph

-

Business1 week ago

Business1 week agoTax Saving FD: This Simple Investment Can Help You Earn And Save More

-

Fashion1 week ago

Fashion1 week agoPhilippines expands logistics network to address supply chain issues

-

Entertainment1 week ago

Entertainment1 week ago5 surprising facts about Mardi Gras you may not know

-

Business1 week ago

Business1 week agoAir India and Lufthansa Group sign MoU to expand India-Europe flight network

-Reviewer-Photo-SOURCE-Ryan-Waniata.jpg)