Tech

Generative and agentic AI in security: What CISOs need to know | Computer Weekly

Artificial intelligence (AI) is now embedded across almost every layer of the modern cyber security stack. From threat detection and identity analytics to incident response and automated remediation, AI-backed capabilities are no longer emerging features but baseline expectations. For many organisations, AI has become inseparable from how security tools operate.

Yet as adoption accelerates, many chief information security officers (CISOs) are discovering an uncomfortable reality. While AI is transforming cyber security, it is also introducing new risks that existing evaluation and governance approaches were never designed to manage. This has created a widening gap between what AI-backed security tools promise and what organisations can realistically control.

When “AI-powered” becomes a liability

Security leaders are under pressure to move quickly. Vendors are racing to embed generative and agentic AI into their platforms, often promoting automation as a solution to skills shortages, alert fatigue, and response latency. In principle, these benefits are real, but many AI-backed tools are being deployed faster than the controls needed to govern them safely.

Once AI is embedded in security platforms, oversight becomes harder to enforce. Decision logic can be opaque, model behaviour may shift over time, and automated actions can occur without sufficient human validation. When failures occur, accountability is often unclear, and tools designed to reduce cyber risk can, if poorly governed, amplify it.

Gartner’s 2025 Generative and Agentic AI survey highlights this risk, with many companies deploying AI tools reporting gaps in oversight and accountability. The challenge grows with agentic AI – systems capable of making multi-step decisions and acting autonomously. In security contexts, this can include dynamically blocking users, changing configurations, or triggering remediation workflows at machine speed. Without enforceable guardrails, small errors can cascade quickly, increasing operational and business risk.

Why traditional buying criteria fall short

Despite this shift, most security procurement processes still rely on familiar criteria such as detection accuracy, feature breadth and cost. These remain important, but they are no longer sufficient. What is often missing is a rigorous assessment of trust, risk and accountability in AI-driven systems. Buyers frequently lack clear answers about how AI decisions are made, how training and operational data are protected, how AI model, application and agent behaviour is monitored over time, and how automated actions can be constrained or overridden when risk thresholds are exceeded. In the absence of these controls, organisations are effectively accepting black-box risk.

This is why a Trust, Risk and Security Management (TRiSM) framework for AI becomes increasingly relevant for CISOs. AI TRiSM shifts governance away from static policies and towards enforceable technical controls that operate continuously across AI systems. It recognises that governance cannot rely on intent alone when AI systems are dynamic, adaptive and increasingly autonomous.

From policy to enforceable control

One of the most persistent misconceptions about AI governance is that policies, training and ethics committees are sufficient. While these elements remain important, they do not scale in environments where AI systems make decisions in real time. Effective governance requires controls that are embedded directly into workflows. These controls must validate data before it is used, monitor AI model, application and agent behaviour as it evolves, enforce policies contextually rather than retrospectively, and provide transparent reporting for audit, compliance and incident response.

The rise of “guardian” capabilities

Independent guardian capabilities are a notable step forward in AI governance. Operating separately from AI systems, they continuously monitor, enforce, and constrain AI behaviour, helping organisations maintain control as AI systems become more autonomous and complex.

AI is already delivering value-improving pattern recognition, behavioural analytics, and prioritisation of security signals. But speed without oversight introduces risk. Even the most advanced AI cannot fully replace human judgement, particularly in automated response.

The true competitive advantage will go to organisations that govern AI effectively, not just adopt it quickly. CISOs should prioritise enforceable controls, operational transparency, and independent oversight. In environments where AI is both a defensive asset and a new attack surface, disciplined governance is essential for sustainable cyber security.

Gartner analysts will further explore how AI-backed security tools and governance strategies are reshaping cyber risk management at the Gartner Security & Risk Management Summit in London, from 22–24 September 2026.

Avivah Litan is distinguished vice president analyst at Gartner

Tech

This Defense Company Made AI Agents That Blow Things Up

Like many Silicon Valley companies today, Scout AI is training large AI models and agents to automate chores. The big difference is that instead of writing code, answering emails, or buying stuff online, Scout AI’s agents are designed to seek and destroy things in the physical world with exploding drones.

In a recent demonstration, held at an undisclosed military base in central California, Scout AI’s technology was put in charge of a self-driving off-road vehicle and a pair of lethal drones. The agents used these systems to find a truck hiding in the area, and then blew it to bits using an explosive charge.

“We need to bring next-generation AI to the military,” Colby Adcock, Scout AI’s CEO, told me in a recent interview. (Adcock’s brother, Brett Adcock, is the CEO of Figure AI, a startup working on humanoid robots). “We take a hyperscaler foundation model and we train it to go from being a generalized chatbot or agentic assistant to being a warfighter.”

Adcock’s company is part of a new generation of startups racing to adapt technology from big AI labs for the battlefield. Many policymakers believe that harnessing AI will be the key to future military dominance. The combat potential of AI is one reason why the US government has sought to limit the sale of advanced AI chips and chipmaking equipment to China, although the Trump administration recently chose to loosen those controls.

“It’s good for defense tech startups to push the envelope with AI integration,” says Michael Horowitz, a professor at the University of Pennsylvania who previously served in the Pentagon as deputy assistant secretary of defense for force development and emerging capabilities. “That’s exactly what they should be doing if the US is going to lead in military adoption of AI.”

Horowitz also notes, though, that harnessing the latest AI advances can prove particularly difficult in practice.

Large language models are inherently unpredictable and AI agents—like the ones that control the popular AI assistant OpenClaw—can misbehave when given even relatively benign tasks like ordering goods online. Horowitz says it may be especially hard to demonstrate that such systems are robust from a cybersecurity standpoint—something that would be required for widespread military use.

Scout AI’s recent demo involved several steps where AI had free rein over combat systems.

At the outset of the mission the following command was fed into a Scout AI system known as Fury Orchestrator:

A relatively large AI model with over a 100 billion parameters, which can run either on a secure cloud platform or an air-gapped computer on-site, interprets the initial command. Scout AI uses an undisclosed open source model with its restrictions removed. This model then acts as an agent, issuing commands to smaller, 10-billion-parameter models running on the ground vehicles and the drones involved in the exercise. The smaller models also act as agents themselves, issuing their own commands to lower-level AI systems that control the vehicles’ movements.

Seconds after receiving marching orders, the ground vehicle zipped off along a dirt road that winds between brush and trees. A few minutes later, the vehicle came to a stop and dispatched the pair of drones, which flew into the area where it had been instructed that the target was waiting. After spotting the truck, an AI agent running on one of the drones issued an order to fly toward it and detonate an explosive charge just before impact.

Tech

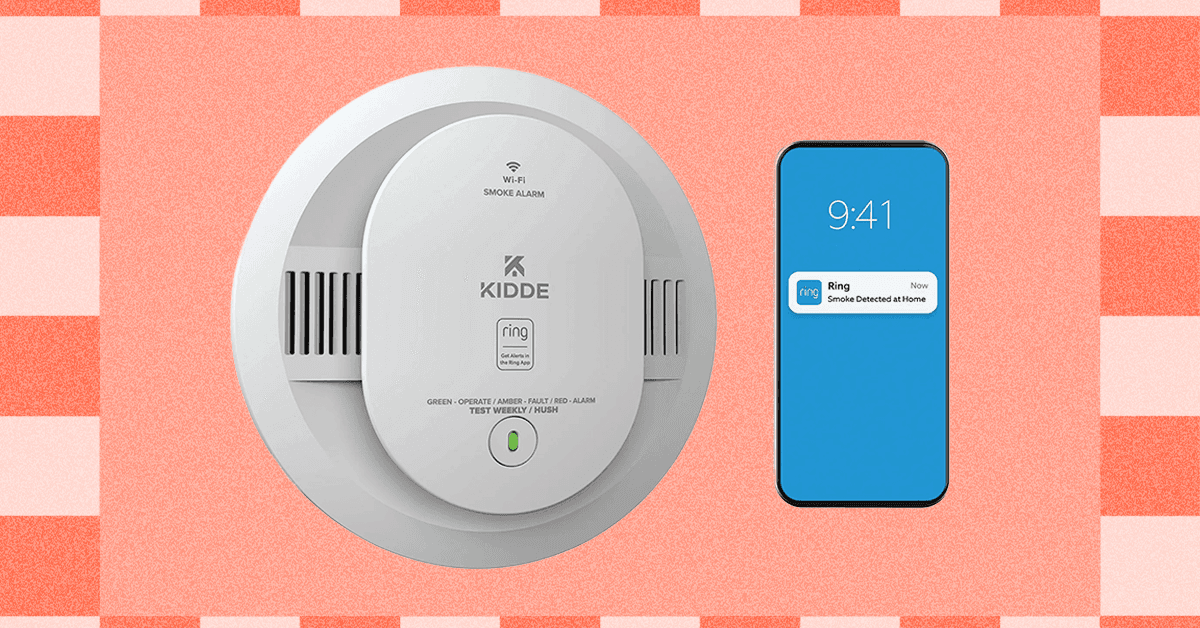

Shopping for a Smart Smoke Detector? Check Out the New Kidde Model

Kidde has become the best-known name in the world of smart smoke detectors—a relatively low bar given how few people know the brand of their smoke detector. Still, you’ll find Kidde recommended by reviewers and customers across the internet with surprising enthusiasm, which has only increased since the brand started collaborating with Ring and Amazon, making it an easy add-on to Alexa-powered smart homes.

Until now, if you wanted a Kidde smart smoke alarm connected to your other devices, one that would send you alerts by Ring app, you could only choose a hardwired model. Anyone who wanted something battery-powered from Kidde along the lines of the now-discontinued Google Nest Protect had to skip the smarts.

At the CES trade show in January, Kidde announced its first battery-only smart smoke alarm, once again in collaboration with Ring. The Kidde Ring Smart Smoke + CO Alarm has been available for preorder since the announcement, but as of today is fully available to buy.

How It’ll Work

Kidde’s Ring Smart Smoke + CO Alarm will use two AA batteries (included in the box). It’ll come with a mounting bracket for installation, and you can choose to mount it wherever you see fit, thanks to the battery flexibility. Kidde recommends not installing a smoke detector within six feet of heating appliances, less than four inches from an A-frame type ceiling, or in areas like garages or near things like lights, fans, vents, windows, and anything that could directly expose it to the weather.

Once it’s installed, you can connect it to your Wi-Fi and the Ring app. You won’t need any additional Ring technology—no hub is required, even though you’ll find one in most Alexa speakers these days—to have it work with the Ring app. Ideally, you’d already have an app and be a Ring user if you’ve chosen this smoke detector, but if you haven’t, make sure to get the app and set up your account.

Third-Party Smoke

Both Amazon and Google have chosen to partner with brands instead of making their own in-house smoke detectors. Google now partners with First Alert for a smart smoke and carbon monoxide alarm replacement after discontinuing the Google Nest Protect, and Ring both has this partnership with Kidde and can work with Z-Wave models from First Alert too.

It’s not a surprising move from Google, which has been moving to make less of its own hardware and instead place its smarts in other brand’s products. Amazon usually likes to make its own massive range of hardware, so it’s worth noting that if Amazon isn’t making this device itself, there’s a reason. It may be a poor investment to maintain such a specific line of products, or maybe because it’s hard enough to do well that the company would rather leverage someone else’s tech. Whichever reason—maybe both—if you’re shopping for a new smart smoke alarm, Kidde’s newest model is one to consider, especially if you have an Alexa household.

Power up with unlimited access to WIRED. Get best-in-class reporting and exclusive subscriber content that’s too important to ignore. Subscribe Today.

Tech

Flaws in Google, Microsoft products added to Cisa catalogue | Computer Weekly

Flaws in the Google Chromium web browser engine and Microsoft Windows Video ActiveX Control are among six issues added to the Cybersecurity and Infrastructure Security Agency’s (Cisa’s) Known Exploited Vulnerabilities (Kev) catalogue this week.

Their inclusion on the regularly-updated Kev list mandates remedial action by agencies of the US government by a certain date – 10 March 2026 in this instance – but more broadly, for private sector organisations all over the world, it serves as a timely guide to what vulnerabilities are being actively exploited in the wild and which warrant urgent attention.

The Google Chromium issue, tracked as CVE-2026-2441, is a remote code execution (RCE) flaw arising from a use-after-free condition in which the application continues to point to a memory location after it has been freed. It is classed as a zero-day.

Google said it was “aware” that an exploit for the flaw exists in the wild and has updated the Stable channel to 145.0.7632.75/76 for Windows and Macintosh, and 144.0.7559.75 for Linux.

The Microsoft flaw dates back almost 20 years and carries the identifier CVE-2008-0015. It is also an RCE vulnerability, but it arises from a stack-based buffer overflow in the ActiveX component of Windows Video and is triggered if a vulnerable user can be convinced to visit a malicious web page.

Its reemergence now implies threat actors are using it to target organisations that either failed or forgot to patch years ago and are still running legacy systems and discontinued software.

The other vulnerabilities on Cisa’s radar are CVE-2020-7796, a server-side request forgery (SSRF) vulnerability in Synacor Zimbra Collaboration Suite, and CVE-2024-7694 in Team T5 ThreatSonar Anti-Ransomware, in which a failure to properly validate the content of uploaded files enable a remote attacker with admin rights to upload malicious files in order to achieve arbitrary system command.

Also added to the Kev catalogue this week are CVE-2026-22769, a hardcoded credential vulnerability in Dell RecoverPoint for Virtual Machines that enables an unauthenticated, remote attacker to gain access to the operating system, and CVE-2021-22175, another SSRF issue in GitLab.

Gunter Ollman, chief technology officer (CTO) at Cobalt, a supplier of penetration-testing services, said that Cisa’s latest Kev additions highlighted a persistent reality for cyber security pros – namely that attackers are pragmatic, not fashionable.

“They will exploit a brand-new Chrome heap corruption vulnerability just as readily as a 2008-era ActiveX buffer overflow if it gives them reliable access,” said Ollman. “What stands out here is the diversity of attack surface, from browsers and collaboration platforms to endpoint software that is supposed to defend against ransomware.”

Ollman said this reinforced a clear need for continuous, adversary-driven testing that reflects the reality of how threat attackers chain exploits, SSRF flaws, and legacy weaknesses into practical intrusion paths.

He added: “Organisations cannot treat patching as a quarterly hygiene exercise. They need ongoing validation that exposed services, client-side software, and defensive tooling are resilient under real-world attack conditions. The Kev catalog is not just a list of bugs, it is a blueprint of what adversaries are successfully monetising today.”

-

Business1 week ago

Business1 week agoAye Finance IPO Day 2: GMP Remains Zero; Apply Or Not? Check Price, GMP, Financials, Recommendations

-

Fashion1 week ago

Fashion1 week agoComment: Tariffs, capacity and timing reshape sourcing decisions

-

Business1 week ago

Business1 week agoGold price today: How much 18K, 22K and 24K gold costs in Delhi, Mumbai & more – Check rates for your city – The Times of India

-

Business6 days ago

Business6 days agoTop stocks to buy today: Stock recommendations for February 13, 2026 – check list – The Times of India

-

Fashion7 days ago

Fashion7 days agoIndia’s PDS Q3 revenue up 2% as margins remain under pressure

-

Tech1 week ago

Tech1 week agoRemoving barriers to tech careers

-

Fashion1 week ago

Fashion1 week agoSaint Laurent retains top spot as hottest brand in Q4 2025 Lyst Index

-

Politics6 days ago

Politics6 days agoIndia clears proposal to buy French Rafale jets