Tech

OpenAI reaches new agreement with Microsoft to change its corporate structure

OpenAI has reached a new tentative agreement with Microsoft and said its nonprofit, which technically controls its business, will now be given a $100 billion equity stake in its for-profit corporation.

The maker of ChatGPT said it had reached a new nonbinding agreement with Microsoft, its longtime partner, “for the next phase of our partnership.”

The announcements on Thursday include a few details about these new arrangements. OpenAI’s proposed changes to its corporate structure have drawn the scrutiny of regulators, competitors and advocates concerned about the impacts of artificial intelligence.

OpenAI was founded as a nonprofit in 2015 and its nonprofit board has continued to control the for-profit subsidiary that now develops and sells its AI products. It’s not clear whether the $100 billion equity stake the nonprofit will get as part of this announcement represents a controlling stake in the business.

California Attorney General Rob Bonta said last week that his office was investigating OpenAI’s proposed restructuring of its finances and governance. His office said they could not comment on the new announcements but said they are “committed to protecting charitable assets for their intended purpose.”

Bonta and Delaware Attorney General Kathy Jennings also sent the company a letter expressing concerns about the safety of ChatGPT after meeting with OpenAI’s legal team earlier last week in Delaware, where OpenAI is incorporated.

“Together, we are particularly concerned with ensuring that the stated safety mission of OpenAI as a non-profit remains front and center,” Bonta said in a statement last week.

Microsoft invested its first $1 billion in OpenAI in 2019 and the two companies later formed an agreement that made Microsoft the exclusive provider of the computing power needed to build OpenAI’s technology. In turn, Microsoft heavily used the technology behind ChatGPT to enhance its own AI products.

The two companies announced on Jan. 21 that they were altering that agreement, enabling the smaller company to build its own computing capacity, “primarily for research and training of models.” That coincided with OpenAI’s announcements of a partnership with Oracle to build a massive new data center in Abilene, Texas.

But other parts of its agreements with Microsoft remained up in the air as the two companies appeared to veer further apart. Their Thursday joint statement said they were still “actively working to finalize contractual terms in a definitive agreement.” Both companies declined further comment.

OpenAI had given its nonprofit board of directors—whose members now include a former U.S. Treasury secretary—the responsibility of deciding when its AI systems have reached the point at which they “outperform humans at most economically valuable work,” a concept known as artificial general intelligence, or AGI.

Such an achievement, per its earlier agreements, would cut off Microsoft from the rights to commercialize such a system, since the terms “only apply to pre-AGI technology.”

OpenAI’s corporate structure and nonprofit mission are also the subject of a lawsuit brought by Elon Musk, who helped found the nonprofit research lab and provided initial funding. Musk’s suit seeks to stop OpenAI from taking control of the company away from its nonprofit and alleges it has betrayed its promise to develop AI for the benefit of humanity.

© 2025 The Associated Press. All rights reserved. This material may not be published, broadcast, rewritten or redistributed without permission.

Citation:

OpenAI reaches new agreement with Microsoft to change its corporate structure (2025, September 13)

retrieved 13 September 2025

from https://techxplore.com/news/2025-09-openai-agreement-microsoft-corporate.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

Tech

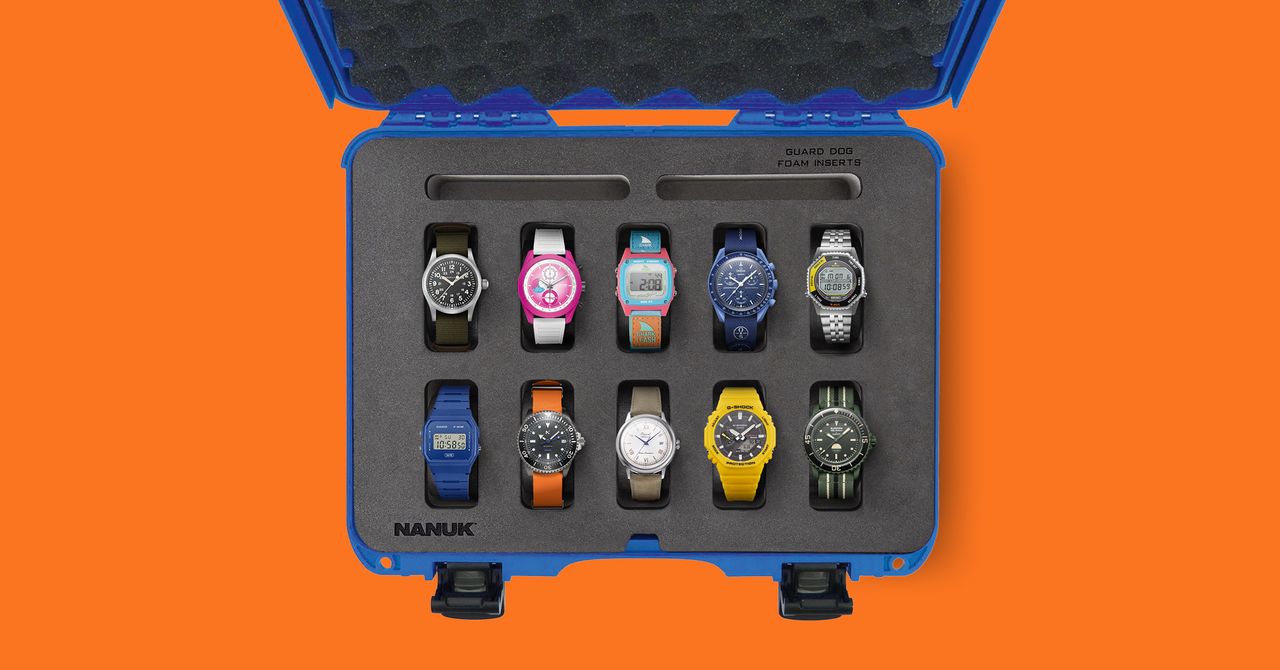

Building a Watch Collection on a Budget? Here’s Where to Start

You don’t need a four-figure Swiss movement to know what time it is—or look good doing it. One of the most wonderful things about “budget” watches today (although it’s kinder, or more appropriate, to say “affordable”) is that brands have learned to take design cues from luxury timepieces while quietly getting very good at the fundamentals: reliable movements, thoughtful materials, and proportions that don’t scream “cheap.” Take a look at the Orient in WIRED’s selection below as a prime example.

It could easily be argued that we’re in a golden age of affordable horology (see our full guide here for definitive proof), where, if you choose wisely, $350 or less can buy everything from a desirable dress watch, or a high-end collaboration, and even a supremely capable and classically chic diver. Pieces that will see you right from sunken wreck to boardroom table. And let’s not forget the retro allure of digital watches right now, either, with the Shark Classic not only being one of our favorites here, but at $70, it’s also the most affordable.

Moreover, should you decide to bag more than a few (and who could blame you at these prices?), we’ve even got the perfect carry case picked out: Nanuk’s IP67 waterproof and dustproof NK-7 resin $175 910 Watch Case (pictured above) with patented PowerClaw latching system—ideal for securing any timepiece collection, be it bargain or big budget.

Be sure to check out our other wearable coverage, including the Best Budget Watches Under $1,000, Best Smartwatches, Best Fitness Trackers, and Best Smart Rings.

Tech

I Tested 10 Popular Date-Night Boxes With My Hinge Dates

Same as the Five Senses deck above, this scratch-off card set happens in sequence, with optional “level up” cards to really push intimacy, and separate cards for each partner with secret directions. For this date, you’ll both bring a red item that you show at certain points to signify that you’re open to physical touch. Then you’ll go out to dinner and have intentional conversation, and every time a partner pulls out the red item, you’ll follow the prompts to initiate increasingly intimate physical acts, ranging from hand holding to neck kisses. So there we were, at Illegal Taqueria, edging each other over al pastor tacos (I kid).

Many of the cards urged a partner not to interrupt or solve problems, but ask questions and talk dirty. My date said, “I think this may be for couples who hate each other.” I had to agree. The second part of the date involved driving and stoplights, but since we were in Brooklyn, we walked down the trash-filled sidewalk and pretended to be a suburban couple on the fritz instead.

The rest of the date included buying things for sexy time, like whipped cream and blindfolds. I’m vegan and had no desire to lick cream from chest hair, so we came home, stripped, and did our best to keep our eyes closed (in lieu of a blindfold). It was overall a strange experience for us both, I think. If you and your partner need a lot of prompting to connect, compliment, and be physical, this set is for you.

Date: Greg, 10/10 (Note: I didn’t find this man on Hinge; I met him the old-fashioned way, in a bar at 2 am.)

Box: 6/10

Tech

WIRED’s Guide to Actually Fun Valentine’s Day Gifts

Valentine’s Day is a sneaky one. It’s easy to let grabbing fun and unique Valentine’s Day gifts fall to the wayside while you recover from the Christmas holidays, but it’s not one to miss if you have a partner you want to shower with a little extra love.

If you’re feeling too wiped to shop, good news: I’ve got you covered. I’ve rounded up some of our favorite ideas for the year’s most romantic holiday, from Lego sets you can build as a date and date boxes filled with ideas to last you all year long to gorgeous flowers you can get delivered in a snap and cozy robes you’ll want to lounge in together. This guide all the Valentine’s Day gifts we’re excited to give this year.

Curious about what else we recommend? Don’t miss our Gifts for Lovers, Gifts for Moms, Gifts for Plant Lovers, Gifts for People Who Work from Home, and Best Blind Boxes for more gifts and shopping ideas.

Table of Contents

For a Gift That’s a Date

My husband and I are planning our fourth or fifth year of our favorite Valentine’s Day Date: building Lego sets together. We’ve done this for years, and then we get to enjoy the fruits (well, flowers) of our labor around the home forevermore. These sets serve as both the gift and the activity. Building the dried-flower centerpiece together was probably my all-time favorite, since you can each simultaneously work on one half and then click it together at the end, followed by each building a different-color bonsai tree.

For a Daytime Adventure

Building on the idea of date activities that involve gifts, this multi-person paddleboard is a fun way to spend time outdoors while staying together the entire time. It’s massive, almost raftlike, so that it can support the weight of up to three adults, but once we got the hang of the size, it wasn’t hard to maneuver. Sometimes we’d both row together, sometimes I’d let my husband do all the work. It made for a lovely daytime adventure together, and I can’t wait for the next warm day for my husband and me to take this out on our local harbor again. It’s big enough that we could bring our son, though it’s much more peaceful as a date activity. It’s inflatable, and I’d recommend grabbing an electric filler since it takes a lot of manual pumping otherwise.

For Flowers on Demand

The classic go-to for Valentine’s Day is, of course, flowers. WIRED reviewer Boutayna Chokrane tested several flower delivery services to find the best one to get sent to your home, and her favorite is the Ode à la Rose, specifically the Edith arrangements. The business was created by two former French bankers, and the arrangements’ design choices feel distinctly chic in a way only French romance can. The Edith bouquet is entirely Columbus double tulips from Holland, and come hand-tied in a travel vase a fun pink box. The flowers ship nationwide, and there’s same-day shipping in New York, Chicago, Los Angeles, Austin, Miami, and Washington, DC.

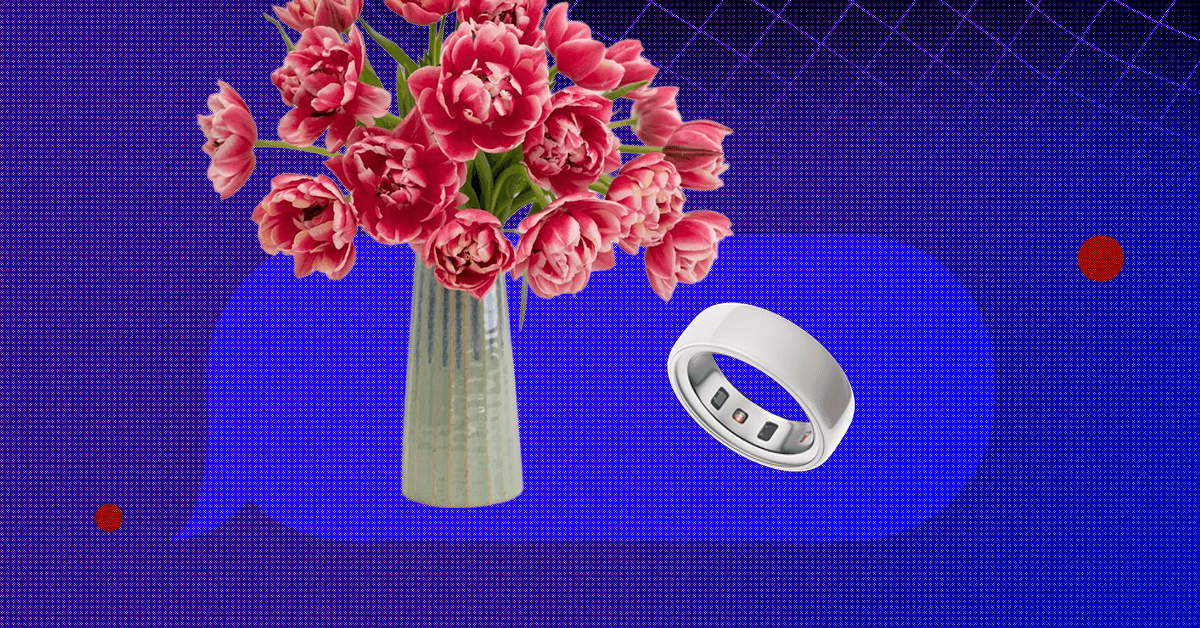

For a Jewelry Upgrade

Maybe you’ve already exchanged rings, or maybe you’re looking for your first set without committing to I do. Either way, the most popular fitness tracker to get these days is a smart ring, and Oura is the ruler of the space. The latest model is the Ring 4, and it comes in both metallic and ceramic finishes. Many of my friends love theirs. I wish I had one, but they don’t make sense for my husband and me since we’re an aerialist and rock climber duo. Live my dreams for me and get this for your valentine (and yourself)!

For Your Fave Photographer

If your romantic partner loves to capture photos, a digital photo frame is the perfect gift (and you’ll benefit, too, as likely the number one fan of their photography!). I’m the photographer of our house, and our Aura frame is my husband’s favorite gadget because it showcases photos I’ve captured of our son and life together over the years. Our wedding photos can be found on there too, as well as the occasional good photo of me that he’s captured. It’s a monthly ritual for me to go through my camera roll and add my latest favorites. Aura’s my favorite because the range of frames is beautiful, and the storage is unlimited with no fees or subscriptions.

For the Cozy Couple

One of my favorite souvenirs I have around the house is a matching robe set that my husband and I bought on our honeymoon. Our all-cotton robes are from the Ten Thousand Waves Japanese spa in New Mexico (the final destination of a Southwestern US road trip) and are great for taking to the pool or using after a shower on a hot day. But I still love a good fluffy robe during the colder season, especially since it can double as a towel. Get your partner one of these cozy robes to give them something luxurious to use after their next everything shower or quick rinse-off. Cozy Earth’s robe is crazy-soft thanks to its blend of cotton and bamboo viscose, while this flannel robe from L.L.Bean is one of our favorites for anyone who works from home.

For Your Inner Theater Kids

If your partner loves to sing along to the Wicked soundtrack and is regularly suggesting karaoke as a group activity, then give them the gift of making karaoke happen anywhere with these gadgets. The Bonaok Karaoke Microphone is one of our favorite karaoke microphones, letting you sing anywhere without lugging bulky equipment. The Ikarao Shell S2 is a portable device with two mics, a built-in screen, and support for streaming services, so you can sing along to your favorite songs on Spotify.

For the Fitness Couple

After the Christmas season, I saw a video on my For You page that roasted how every mom had clearly gotten a matching workout outfit set for Christmas and was out wearing it on Boxing Day. As a mom myself, all I could think of was how much I would love another matching workout set. I’m serious. They’re great for workouts, quick errands, and day care or school drop-off. My latest favorite set is from Bombshell Sportswear. The set is both super soft and fits securely without any annoying squeezing. It’s getting the most compliments of all my sets. I wish I’d sized up with the bolero, but as an aerialist, my lat muscles are a little bulkier than an everyday person’s.

Have a partner who doesn’t need a matching set? Try some fantastic running shoes instead, which are even more useful for both workouts and daily life. WIRED reviewer Adrienne So says these R.A.D. shoes are fantastic for a range of uses, as they’re designed for gym, HIIT, CrossFit, and hybrid workouts and are soft enough for treadmill running. They look fantastic, too.

For the Beloved Bookworms

A Kindle is always a great gift for anyone who reads in any format. Funny enough, my siblings and I are about to buy one for my dad for his birthday (two weeks before Valentine’s Day), and I recommended my favorite pick, the Kindle Paperwhite, since the standard Kindle is a little too small for his 6-foot-4 frame to hunch down over, and he doesn’t read enough illustrated books to make the Colorsoft the right jump for him. If they already have a Kindle, I’m still in love with my matching PopSockets Kindle case and grip, and they’ve since launched a new Bookish collection with beautiful designs.

For Some Bedroom Spice

Looking to spice things up? These adventure boxes can add more fun to the bedroom without creating additional mental work for you and your partner. An offshoot from the Adventure Challenge, “The Adventure Challenge … In Bed” scratch-off date book has 50 date ideas designed specifically to help facilitate fun and connection in the bedroom. The dates are categorized by activity type in sections like food, dancing, “sexploration,” and more. Each date is covered by a black box, with only icons indicating required fields such as duration, cost, and more. Meanwhile, the Fantasy Box is a date-night box service offering a range of themes, from sexy wine tasting to a kinky poker night, all designed to help couples communicate and connect more intimately. Before opening the box, each partner will fill out a questionnaire of potential intimate acts, and this box comes with everything needed for a truly kinky night in: a satin blindfold, pleather paddle, lingerie, lube, massage gel, feather wand, mini vibrator, and silky wrist restraints. —Molly Higgins

Power up with unlimited access to WIRED. Get best-in-class reporting and exclusive subscriber content that’s too important to ignore. Subscribe Today.

-

Sports6 days ago

Sports6 days agoPSL 11: Local players’ category renewals unveiled ahead of auction

-

Entertainment5 days ago

Entertainment5 days agoClaire Danes reveals how she reacted to pregnancy at 44

-

Tech1 week ago

Tech1 week agoICE Asks Companies About ‘Ad Tech and Big Data’ Tools It Could Use in Investigations

-

Business6 days ago

Business6 days agoBanking services disrupted as bank employees go on nationwide strike demanding five-day work week

-

Fashion1 week ago

Fashion1 week agoSpain’s apparel imports up 7.10% in Jan-Oct as sourcing realigns

-

Sports5 days ago

Sports5 days agoCollege football’s top 100 games of the 2025 season

-

Politics1 week ago

Politics1 week agoFresh protests after man shot dead in Minneapolis operation

-

Business1 week ago

Business1 week agoShould smartphones be locked away at gigs and in schools?