Tech

Europe’s fastest supercomputer to boost AI drive

Europe’s fastest supercomputer Jupiter is set to be inaugurated Friday in Germany with its operators hoping it can help the continent in everything from climate research to catching up in the artificial intelligence race.

Here is all you need to know about the system, which boasts the power of around one million smartphones.

What is the Jupiter supercomputer?

Based at Juelich Supercomputing Center in western Germany, it is Europe’s first “exascale” supercomputer—meaning it will be able to perform at least one quintillion (or one billion billion) calculations per second.

The United States already has three such computers, all operated by the Department of Energy.

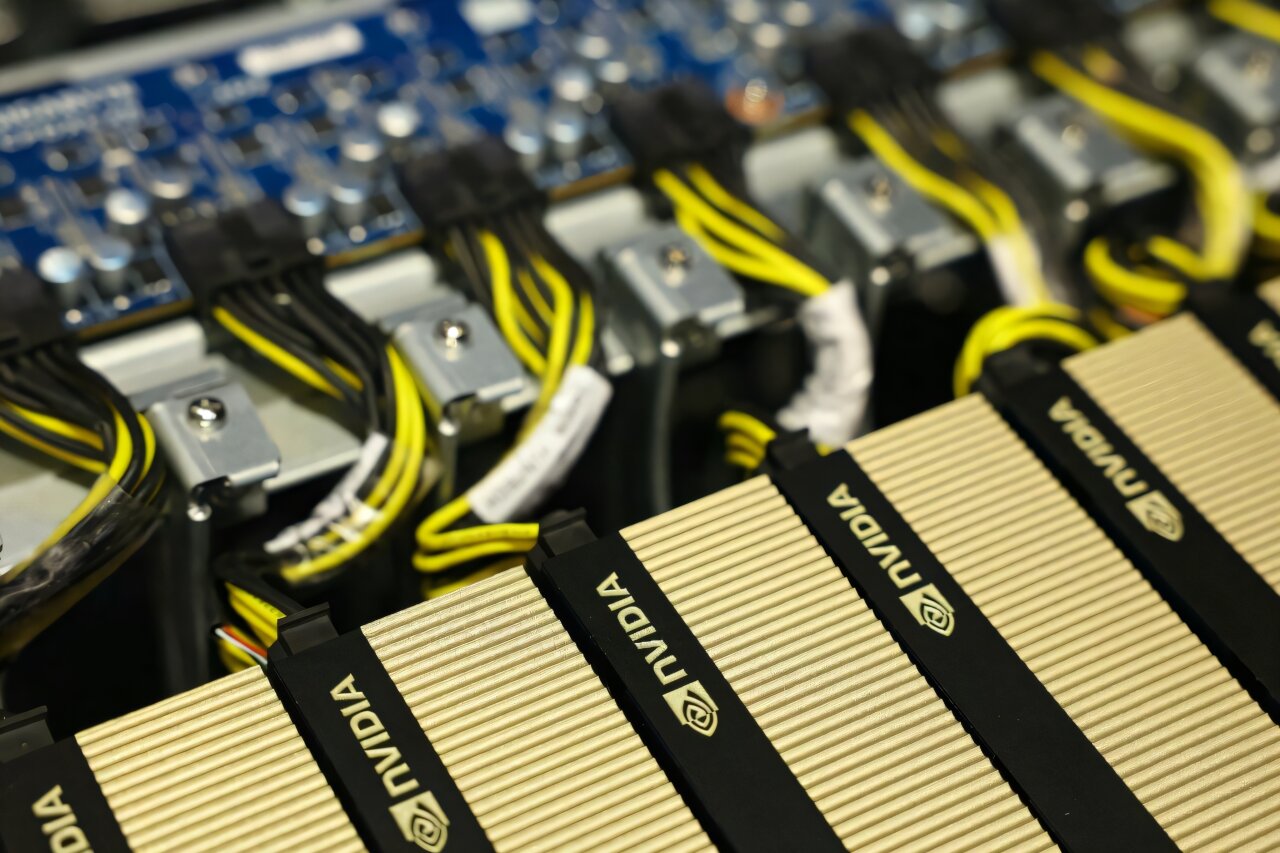

Jupiter is housed in a center covering some 3,600 meters (38,000 square feet)—about half the size of a football pitch—containing racks of processors, and packed with about 24,000 Nvidia chips, which are favored by the AI industry.

Half the 500 million euros ($580 million) to develop and run the system over the next few years comes from the European Union and the rest from Germany.

Its vast computing power can be accessed by researchers across numerous fields as well as companies for purposes such as training AI models.

“Jupiter is a leap forward in the performance of computing in Europe,” Thomas Lippert, head of the Juelich center, told AFP, adding that it was 20 times more powerful than any other computer in Germany.

How can it help Europe in the AI race?

Lippert said Jupiter is the first supercomputer that could be considered internationally competitive for training AI models in Europe, which has lagged behind the US and China in the sector.

According to a Stanford University report released earlier this year, US-based institutions produced 40 “notable” AI models—meaning those regarded as particularly influential—in 2024, compared to 15 for China and just three for Europe.

“It is the biggest artificial intelligence machine in Europe,” Emmanuel Le Roux, head of advanced computing at Eviden, a subsidiary of French tech giant Atos, told AFP.

A consortium consisting of Eviden and German group ParTec built Jupiter.

Jose Maria Cela, senior researcher at the Barcelona Supercomputing Center, said the new system was “very significant” for efforts to train AI models in Europe.

“The larger the computer, the better the model that you develop with artificial intelligence,” he told AFP.

Large language models (LLMs) are trained on vast amounts of text and used in generative AI chatbots such as OpenAI’s ChatGPT and Google’s Gemini.

Nevertheless with Jupiter packed full of Nvidia chips, it is still heavily reliant on US tech.

The dominance of the US tech sector has become a source of growing concern as US-Europe relations have soured.

What else can the computer be used for?

Jupiter has a wide range of other potential uses beyond training AI models.

Researchers want to use it to create more detailed, long-term climate forecasts that they hope can more accurately predict the likelihood of extreme weather events such as heat waves.

Le Roux said that current models can simulate climate change over the next decade.

“With Jupiter, scientists believe they will be able to forecast up to at least 30 years, and in some models, perhaps even up to 100 years,” he added.

Others hope to simulate processes in the brain more realistically, research that could be useful in areas such as developing drugs to combat diseases like Alzheimer’s.

It can also be used for research related to the energy transition, for instance by simulating air flows around wind turbines to optimize their design.

Does Jupiter consume a lot of energy?

Yes, Jupiter will require on average around 11 megawatts of power, according to estimates—equivalent to the energy used to power thousands of homes or a small industrial plant.

But its operators insist that Jupiter is the most energy-efficient among the fastest computer systems in the world.

It uses the latest, most energy-efficient hardware, has water-cooling systems and the waste heat that it generates will be used to heat nearby buildings, according to the Juelich center.

© 2025 AFP

Citation:

Europe’s fastest supercomputer to boost AI drive (2025, September 5)

retrieved 5 September 2025

from https://techxplore.com/news/2025-09-europe-fastest-supercomputer-boost-ai.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

Tech

What It’s Like to Have a Brain Implant for 5 Years

Initially, Gorham used his brain-computer interface for single clicks, Oxley says. Then he moved on to multi-clicks and eventually sliding control, which is akin to turning up a volume knob. Now he can move a computer cursor, an example of 2D control—horizontal and vertical movements within a two-dimensional plane.

Over the years, Gorham has gotten to try out different devices using his implant. Zafar Faraz, a field clinical engineer for Synchron, says Gorham directly contributed to the development of Switch Control, a new accessibility feature Apple announced last year that allows brain-computer interface users the ability to control iPhones, iPads, and the Vision Pro with their thoughts.

In a video demonstration shown at an Nvidia conference last year in San Jose, California, Gorham demonstrates using his implant to play music from a smart speaker, turn on a fan, adjust his lights, activate an automatic pet feeder, and run a robotic vacuum in his home in Melbourne, Australia.

“Rodney has been pushing the boundaries of what is possible,” Faraz says.

As a field clinical engineer, Faraz visits Gorham in his home twice a week to lead sessions on his brain-computer interface. It’s Faraz’s job to monitor the performance of the device, troubleshoot problems, and also learn the range of things that Gorham can and can’t do with it. Synchron relies on this data to improve the reliability and user-friendliness of its system.

In the years he’s been working with Gorham, the two have done a lot of experimenting to see what’s possible with the implant. Once, Faraz says, he had Gorham using two iPads side by side, switching between playing a game on one and listening to music on the other. Another time, Gorham played a computer game in which he had to grab blocks on a shelf. The game was tied to an actual robotic arm at the University of Melbourne, about six miles from Gorham’s home, that remotely moved real blocks in a lab.

Gorham, who was an IBM software salesman before he was diagnosed with ALS in 2016, has relished being such a key part of the development of the technology, his wife Caroline says.

“It fits Rodney’s set of life skills,” she says. “He spent 30 years in IT, talking to customers, finding out what they needed from their software, and then going back to the techos to actually develop what the customer needed. Now it’s sort of flipped around the other way.” After a session with Faraz, Gorham will often be smiling ear to ear.

Through field visits, the Synchron team realized it needed to change the setup of its system. Currently, a wire cable with a paddle on one end needs to sit on top of the user’s chest. The paddle collects the brain signals that are beamed through the chest and transmits them via the wire to an external unit that translates those signals into commands. In its second generation system, Synchron is removing that wire.

“If you have a wearable component where there’s a delicate communication layer, we learned that that’s a problem,” Oxley says. “With a paralyzed population, you have to depend on someone to come and modify the wearable components and make sure the link is working. That was a huge learning piece for us.”

Tech

Barkbox Offers: Themed Dog Toys, All-Natural Treats, and Subscription Deals

As my fellow pet parents will know, it’s amazing how quickly even the tiniest of dogs can demolish their toys and treat stash. We love and spoil them nonetheless. When you subscribe to BarkBox a fresh batch of cleverly themed treats and toys arrives at your doorstep. The costs of pet ownership can stack up quickly, especially if you’re buying your pooch a random gift box that goes well beyond the essentials. That’s why we have Barkbox promo codes and discount options ready to go for you.

Barkbox Promo: Enjoy a Free Toy for a Year at Barkbox

When your monthly Barkbox arrives, it’s like Christmas morning for your dogs. I watch as my two dogs, Rosi and Randy, shake their little Chihuahua mix bodies with barely restrained excitement. They’re never gentle on their toys but the stimulation that comes from textures and chewing is good for their little brains. With Barkbox you get a steady supply of two unique toys and two bags of all-natural treats every month. If you want to see how your dogs react, this Barkbox coupon is good for new Barkbox subscription customers and adds an additional toy in your box every month for a year.

Save 50% on Your First Barkbox Food Subscription With a Barkbox Coupon Code

Another reason why Barkbox is the best dog subscription box is how easy the company makes it to keep your pantry stocked with your dog’s food. Use this Barkbox coupon to save 50% off your first Barkbox food subscription, so you won’t have to end up running out to the grocery store in the middle of the night when your scooper scrapes across the bottom of an empty kibble bin.

Fly Travel Stress-Free With Your Dog and Get $300 Off BARK Air Flights

If you live in a Barkbox flight hub destination, please know I am insanely jealous of you. It’s no secret that flying is stressful and can be very dangerous for pets, especially if they have to ride in a cargo hold. Barkbox makes them the VIP with BARK Air, letting them ride in the cabin with you and get doted on, so things are a lot less scary. This is another perk of having a BarkBox subscription, with the opportunity to save $300 off BARK Air Flights.

Support Your Dog’s Dental Health and Get $10 Off With a Barkbox Coupon

Dental health is crucial for dogs, as it can prevent disease not just in their mouths, but their vital organs. Don’t forget to schedule your yearly cleaning with your vet, but in the meantime, use this BarkBox discount code to get $10 off a special BarkBox Dental kit.

Get an Extra Premium Toy in Every BarkBox With the Extra Toy Club

For having such tiny mouths, my dogs can gnaw through toys with surprising speed. If you’re also buried in a pile of shredded fluff and squeakers from disemboweled toys, the Extra Toy Club can help. This subscription includes dog toys for aggressive chewers of all ages, breeds, and sizes, offering extra durable toys meant to last longer. So far, so good at my house. To upgrade to this subscription box, it’s an extra $9 per month.

Get Exclusive BarkBox Discounts: Join the Email List

If you assume that the punchy branding and witty lingo extend to Barkbox’s email subscribers and not just the box subscription, you’d be correct. As a bonus, you can get exclusive BarkBox discount codes when you sign up to receive these emails. Who also doesn’t love a furry face and reminder of their pet in between work subject lines and bill payment reminders, too?

Tech

Here’s Why Trump Posted About Iran ‘Stealing’ the 2020 Election Hours After the US Attacked

At 2:30 am Eastern time on Saturday, President Donald Trump posted a video to his Truth Social account announcing that the US had joined Israel in launching attacks on Iran.

His next post, just two hours later, appeared to suggest that the attacks were, at least in part, motivated by a wild claim that Iran had helped rig the 2020 US elections. “Iran tried to interfere in 2020, 2024 elections to stop Trump, and now faces renewed war with United States,” the president wrote on Truth Social.

The post linked to an article on Just the News, a conspiracy-filled, pro-Trump outlet that offered no explanation for its claim beyond the vague assertion that Iran operated “a sophisticated election influence effort” in 2020.

The White House did not respond to a request for comment on whether the alleged interference factored into the decision to attack Iran or what exactly the so-called interference amounted to.

Trump has spent the years since 2020 boosting numerous baseless conspiracy theories about the 2020 election being rigged. Since his return to the White House last year, he has empowered his administration to use those debunked conspiracy theories to inform decisionmaking, from election office raids in Fulton County, Georgia, to lawsuits over unredacted voter rolls.

It’s not exactly clear what supposed Iranian interference Trump was alluding to in his Truth Social post, but Patrick Byrne, a prominent conspiracy theorist who urged Trump to seize voting machines in the wake of the 2020 election, claims to WIRED that it is related to a broader conspiracy theory that also involves Venezuela and China.

Like most election-related conspiracy theories, this one is convoluted and based on no concrete evidence. In broad terms, the conspiracy theory, which first emerged in the weeks and months after the 2020 election and has grown more complex in the years since, claims that the Venezuelan government has been rigging elections across the globe for decades by creating the voting software company Smartmatic as a vehicle to remotely rig elections. (Smartmatic has repeatedly denied all allegations against it and successfully sued right-wing outlet Newsmax for promoting conspiracy theories and defaming the company.)

Byrne laid out the entire conspiracy theory in a 45-minute-long presentation posted to X in 2024. His claims have been widely shared within the election-denial community since it was posted.

Iran’s role in all of this, claims Byrne, was to hide the money trail. “They act as paymasters. They keep certain payments that would reveal this [operation] out of the banking system, out of the Swift system so you can’t see it,” claimed Byrne during this presentation “It’s done through a transfer pricing mechanism run through Iran in oil.”

When asked for evidence of Iran’s role in this conspiracy theory, Byrne did not respond. In fact, none of Byrne’s claims have ever been verified, and most have been repeatedly debunked. Smartmatic did not immediately respond to a request to comment.

There have been two actual documented instances of Iranian election interference, however: In 2021, the Justice Department charged two Iranians for conducting an influence operation designed to target and threaten US voters. And in 2024, the three Iranian hackers working for the government were charged with compromising the Trump campaign as part of an effort to disrupt the 2024 election.

Byrne’s allegations, however, have been wholly different. And while Byrne’s claims have been circulating among online conspiracy groups for years, they have been emailed directly to Trump in recent months by Peter Ticktin, a lawyer who has known Trump since they attended the New York Military Academy together. Ticktin also represents former Colorado election official turned election denial superstar Tina Peters.

-

Business1 week ago

Business1 week agoHouseholds set for lower energy bills amid price cap shake-up

-

Politics6 days ago

Politics6 days agoUS arrests ex-Air Force pilot for ‘training’ Chinese military

-

Politics6 days ago

Politics6 days agoWhat are Iran’s ballistic missile capabilities?

-

Business5 days ago

Business5 days agoIndia Us Trade Deal: Fresh look at India-US trade deal? May be ‘rebalanced’ if circumstances change, says Piyush Goyal – The Times of India

-

Business6 days ago

Business6 days agoAttock Cement’s acquisition approved | The Express Tribune

-

Fashion6 days ago

Fashion6 days agoPolicy easing drives Argentina’s garment import surge in 2025

-

Sports1 week ago

Sports1 week agoTop 50 USMNT players of 2026, ranked by club form: USMNT Player Performance Index returns

-

Sports6 days ago

Sports6 days agoSri Lanka’s Shanaka says constant criticism has affected players’ mental health