Tech

Interrupting encoder training in diffusion models enables more efficient generative AI

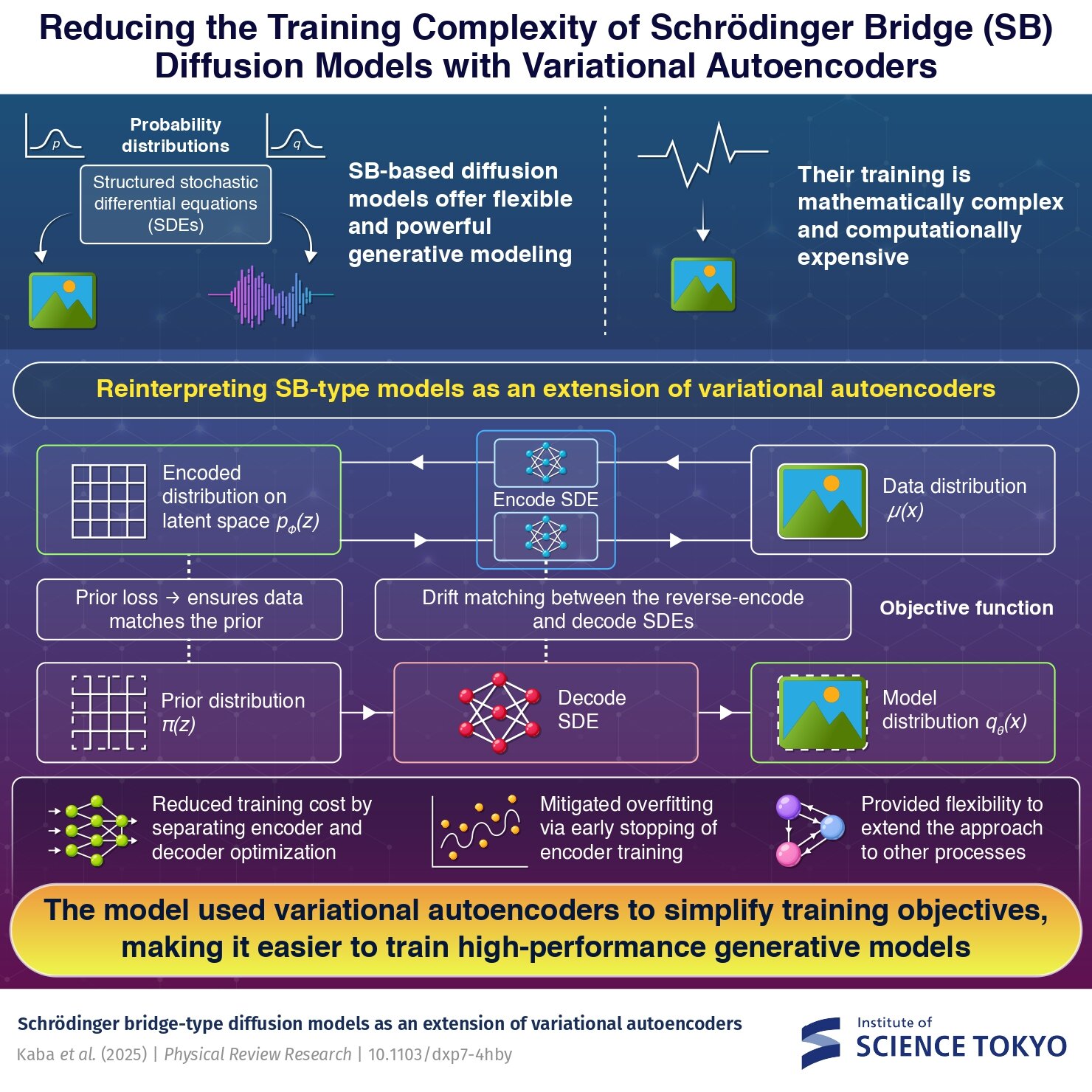

A new framework for generative diffusion models was developed by researchers at Science Tokyo, significantly improving generative AI models. The method reinterpreted Schrödinger bridge models as variational autoencoders with infinitely many latent variables, reducing computational costs and preventing overfitting. By appropriately interrupting the training of the encoder, this approach enabled development of more efficient generative AI, with broad applicability beyond standard diffusion models.

Diffusion models are among the most widely used approaches in generative AI for creating images and audio. These models generate new data by gradually adding noise (noising) to real samples and then learning how to reverse that process (denoising) back into realistic data. A widely used version, the score-based model, achieves this by the diffusion process connecting the prior to the data with a sufficiently long-time interval. This method, however, has a limitation that when the data differs strongly from the prior, the time intervals of the noising and denoising processes become longer, which causes slowing down sample generation.

Now, a research team from Institute of Science Tokyo (Science Tokyo), Japan, has proposed a new framework for diffusion models that is faster and computationally less demanding. They achieved this by reinterpreting Schrödinger bridge (SB) models, a type of diffusion model, as variational autoencoders (VAEs).

The study was led by graduate student Mr. Kentaro Kaba and Professor Masayuki Ohzeki from the Department of Physics at Science Tokyo, in collaboration with Mr. Reo Shimizu (then a graduate student) and Associate Professor Yuki Sugiyama from the Graduate School of Information Sciences at Tohoku University, Japan. Their findings were published in the Physical Review Research on September 3, 2025.

SB models offer greater flexibility than standard score-based models because they can connect any two probability distributions over a finite time using a stochastic differential equation (SDE). This supports more complex noising processes and higher-quality sample generation. The trade-off, however, is that SB models are mathematically complex and expensive to train.

The proposed method addresses this by reformulating SB models as VAEs with multiple latent variables. “The key insight lies in extending the number of latent variables from one to infinity, leveraging the data-processing inequality. This perspective enables us to interpret SB-type models within the framework of VAEs,” says Kaba.

In this setup, the encoder represents the forward process that maps real data onto a noisy latent space, while the decoder reverses the process to reconstruct realistic samples, and both processes are modeled as SDEs learned by neural networks.

The model employs a training objective with two components. The first is the prior loss, which ensures that the encoder correctly maps the data distribution to the prior distribution. The second is drift matching, which trains the decoder to mimic the dynamics of the reverse encoder process. Moreover, once the prior loss stabilizes, encoder training can be stopped early. This allows us to complete learning faster, reducing the risk of overfitting and preserving high accuracy in SB models.

“The objective function is composed of the prior loss and drift matching parts, which characterizes the training of neural networks in the encoder and the decoder, respectively. Together, they reduce the computational cost of training SB-type models. It was demonstrated that interrupting the training of the encoder mitigated the challenge of overfitting,” explains Ohzeki.

This approach is flexible and can be applied to other probabilistic rule sets, even non-Markov processes, making it a broadly applicable training scheme.

More information:

Kentaro Kaba et al, Schrödinger bridge-type diffusion models as an extension of variational autoencoders, Physical Review Research (2025). DOI: 10.1103/dxp7-4hby

Citation:

Interrupting encoder training in diffusion models enables more efficient generative AI (2025, September 29)

retrieved 29 September 2025

from https://techxplore.com/news/2025-09-encoder-diffusion-enables-efficient-generative.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

Tech

DHS Wants a Single Search Engine to Flag Faces and Fingerprints Across Agencies

The Department of Homeland Security is moving to consolidate its face recognition and other biometric technologies into a single system capable of comparing faces, fingerprints, iris scans, and other identifiers collected across its enforcement agencies, according to records reviewed by WIRED.

The agency is asking private biometric contractors how to build a unified platform that would let employees search faces and fingerprints across large government databases already filled with biometrics gathered in different contexts. The goal is to connect components including Customs and Border Protection, Immigration and Customs Enforcement, the Transportation Security Administration, US Citizenship and Immigration Services, the Secret Service, and DHS headquarters, replacing a patchwork of tools that do not share data easily.

The system would support watchlisting, detention, or removal operations and comes as DHS is pushing biometric surveillance far beyond ports of entry and into the hands of intelligence units and masked agents operating hundreds of miles from the border.

The records show DHS is trying to buy a single “matching engine” that can take different kinds of biometrics—faces, fingerprints, iris scans, and more—and run them through the same backend, giving multiple DHS agencies one shared system. In theory, that means the platform would handle both identity checks and investigative searches.

For face recognition specifically, identity verification means the system compares one photo to a single stored record and returns a yes-or-no answer based on similarity. For investigations, it searches a large database and returns a ranked list of the closest-looking faces for a human to review instead of independently making a call.

Both types of searches come with real technical limits. In identity checks, the systems are more sensitive, and so they are less likely to wrongly flag an innocent person. They will, however, fail to identify a match when the photo submitted is slightly blurry, angled, or outdated. For investigative searches, the cutoff is considerably lower, and while the system is more likely to include the right person somewhere in the results, it also produces many more false positives that necessitate human review.

The documents make clear that DHS wants control over how strict or permissive a match should be—depending on the context.

The department also wants the system wired directly into its existing infrastructure. Contractors would be expected to connect the matcher to current biometric sensors, enrollment systems, and data repositories so information collected in one DHS component can be searched against records held by another.

It’s unclear how workable this is. Different DHS agencies have bought their biometric systems from different companies over many years. Each system turns a face or fingerprint into a string of numbers, but many are designed only to work with the specific software that created them.

In practice, this means a new department-wide search tool cannot simply “flip a switch” and make everything compatible. DHS would likely have to convert old records into a common format, rebuild them using a new algorithm, or create software bridges that translate between systems. All of these approaches take time and money, and each can affect speed and accuracy.

At the scale DHS is proposing—potentially billions of records—even small compatibility gaps can spiral into large problems.

The documents also contain a placeholder indicating DHS wants to incorporate voiceprint analysis, but it contains no detailed plans for how they would be collected, stored, or searched. The agency previously used voiceprints in its “Alternative to Detention” program, which allowed immigrants to remain in their communities but required them to submit to intensive monitoring, including GPS ankle trackers and routine check-ins that confirmed their identity using biometric voiceprints.

Tech

Metadata Exposes Authors of ICE’s ‘Mega’ Detention Center Plans

A PDF that Department of Homeland Security officials provided to New Hampshire governor Kelly Ayotte’s office about a new effort to build “mega” detention and processing centers across the United States contains embedded comments and metadata identifying the people who worked on it.

The seemingly accidental exposure of the identities of DHS personnel who crafted Immigration and Customs Enforcement’s mega detention center plan lands amid widespread public pushback against the expansion of ICE detention centers and the department’s brutal immigration enforcement tactics.

Metadata in the document, which concerns ICE’s “Detention Reengineering Initiative” (DRI), lists as its author Jonathan Florentino, the director of ICE’s Newark, New Jersey, Field Office of Enforcement and Removal Operations.

In a note embedded on top of an FAQ question, “What is the average length of stay for the aliens?” Tim Kaiser, the deputy chief of staff for US Citizenship and Immigration Services, asked David Venturella, a former GEO Group executive whom The Washington Post described as an adviser overseeing an ICE division that manages detention center contracts, to “Please confirm” that the average stay for the new mega detention centers would be 60 days.

Venturella replied in a note that remained visible on the published document, “Ideally, I’d like to see a 30-day average for the Mega Center but 60 is fine.”

DHS did not respond to a request for comment about what the three men’s role in the DRI project is, nor did it answer questions about whether Florentino had access to a PDF processor subscription that might have enabled him to scrub metadata and comments from the PDF before sending it to the New Hampshire governor. (The so-called Department of Government Efficiency spent last year slashing the number of software licenses across the federal government.)

The document itself says that ICE intends to update a new detention model by the end of September of this year. ICE says it will create “an efficient detention network by reducing the total number of contracted detention facilities in use while increasing total bed capacity, enhancing custody management, and streamlining removal operations.”

“ICE’s surge hiring effort has resulted in the addition of 12,000 new law enforcement officers,” the DHS document says. “For ICE to sustain the anticipated increase in enforcement operations and arrests in 2026, an increase in detention capacity will be a necessary downstream requirement.”

ICE plans on having two types of facilities: regional processing centers that will hold between 1,000 to 1,500 detainees for an average stay of three to seven days, and the mega detention facilities, which will hold an average of 7,000 to 10,000 people for an average of 60 days. It’s been referred to as a “hub and spoke model,” where the smaller facilities will feed into the mega ones.

“ICE plans to activate all facilities by November 30, 2026, ensuring the timely expansion of detention capacity,” the document says.

Beyond detention centers, ICE plans to buy or lease offices and other facilities in more than 150 locations, in nearly every state in the US, according to documents first reported by WIRED.

The errant comment in the PDF sent to New Hampshire’s governor is not the only issue the set of documents apparently had; according to the New Hampshire Bulletin, a previous version of an accompanying document, an economic impact analysis of a processing site in Merrimack, New Hampshire, referenced “the Oklahoma economy” in the opening lines. The errant document remains on the governor’s website, as of publication.

Across the country, ICE’s mega detention center projects have sparked controversy. ICE’s purchase of a warehouse in Surprise, Arizona, spurred hundreds to attend a city council meeting on the topic, according to KJZZ in Phoenix. In Social Circle, Georgia, city officials have pushed back against DHS’s proposal to build a mega center there, because officials say the city’s water and sewage treatment infrastructure would not be able to handle the influx of people.

Tech

We Used Particle Analysis (And Lots of Sipping) to Find the Best Coffee Grinders

Compare Our Top 5 Grinders

Best Budget Coffee Grinders

As mentioned above, the best bang for your buck will always be a hand grinder like my favorite, the Kingrinder K6 manual coffee grinder ($100). A precisely machined manual coffee grinder can rival coffee grinders many hundreds of dollars more expensive, both in precision and durability. And so the best manual coffee grinder will also be the budget option that’ll lead to the best coffee. I’ve personally come to love the routine, and the control.

But I get it. You’ll happily grind your pepper with the best pepper grinder, but you draw the line at grinding coffee. Mornings are hard. Electricity helps. These are the budgetiest of budget electric coffee grinder options for each style of brew, all blessedly hands-off. None of these will lead to the clarity of flavors or sweetness or delicacy of our top picks. But they’re the absolute lowest-cost devices we recommend for each category of brew.

Best Budget Coffee Grinder for Drip Coffee

Just when you thought Oxo had already cornered the market on affordable conical burr coffee grinders, they came in at an even lower price with this compact model. This lower-cost compact Oxo Brew is stacked like a wee layer cake. And so the grind cup is housed within the column of the device itself, and can be pulled out when you’re done grinding. But while this is quite clever, neither consistency of grind nor ease of use is quite on par with Oxo’s $110 basic conical burr, which remains my pick for an entry-level coffee grinder. But it’s also very easy to move from the cabinet to the counter, and $30 less is $30 less. This is the lowest-price electric grinder I could actually recommend for Aeropress, drip, pour-over, French press, or cold brew. I wouldn’t attempt espresso, though.

Best Budget Coffee Grinder for Espresso

I’m continuing to test this, but for the moment, the lowest-cost electric espresso-capable grinder I can recommend with a clean conscience is the Wirsh Geimori T38 Plus for $130. This portable conical-burr grinder is about the size of a Christmas nutcracker, and looks alarmingly ike Pinocchio’s left leg. But it offers surprisingly low coffee retention, stepless grind adjustments, and far better precision than expected for a grinder of its price. It achieves this by grinding at low rpms—meaning it grinds quite slowly and carefully for an electric grinder. This also means the T38 Plus takes more than 30 seconds to grind enough beans for a double shot of espresso. This is disqualifiyingly slow for batches large enough for drip or French press, and the T38 doesn’t really have the clarity you want for pour-over. Still, it might be the only electric grinder I’ve tested south of $150 that can make decent espresso on non-pressurized baskets. It’s also wee, and great for small kitchens or as a travel coffee grinder. It’s the grinder I’d definitely take with me to a hotel room if I didn’t feel like grinding coffee by hand.

Best Coffee Grinder for $50 or Less

Look, blade grinders like this KitchenAid won’t offer the powdery fineness and full-bodied coffee pleasures of a great conical burr, nor the precision of WIRED’s top flat burr pick. Blade grinders chop the heck out of beans, offering an uneven grind. But this is a very affordable coffee grinder, it’s simple as pancakes to use, and blade-ground fresh beans are still a little better than the stuff in the supermarket. That said, they’re probably still worse than getting beans fresh-grounda t a cafe and using them within a week. When non-bean-geek friends ask for a grinder that costs less than dinner for two at Arby’s, this is the one I offer up—especially if they’re using darker grinds, and favor French press or a less expressive drip coffee maker. At the very least, it’s enough so you’re not crippled when you get whole-bean coffee as a gift. But let’s be clear. The $75 Oxo Compact burr grinder above is about five times as good for $25 more.

Results of Particle Size Analysis of Coffee Grinders

Photograph: Matthew Korfhage

I of course assessed coffee grinders by tasting the resulting coffee, across a number of brew styles and beans. But I also backed up my taste buds with scientific instruments. I analyze the flavor profile and the grind consistency of each of WIRED’s top burr coffee grinder picks using particle analysis by a device called the DiFluid Omni. You can read a broader discussion of that particle analysis here.

Specifically, I tested each of WIRED’s top burr grinders on multiple grind settings using the same medium-grind coffee beans — at both espresso-fine grinds and medium grinds more suitable for drip or pourover coffee. Since September 2025, I also test every grinder I review or consider as a tip pick, including the Moccamaster KM5, whose results are included here. I tested at least 5 times for each grind sample, collating the results into a chracteristic curve for that bean and grinder.

Expand the discussion below for detailed discussion, and bar graphs and such.

Particle Size Analysis of Top Coffee Grinder Picks

Particle size analysis of coffee grinds is not a cut-and-dried test: It’s more a clue as the the probable character of a brew. Patterns begin to emerge that correlate to the experiences I’ve had tasting coffee from each grinder. Taste is the ultimate test, alongside consistency of finicky espresso pulls. But quantitative analysis helps me (and you) actually trust and maybe understand those sensory test results.

When looking at these bar graph curves below, there are also a few rules of thumb. Big boulders north of a thousand microns will often lead to muddier character. Too many fines below 100 microns might lead to bitterness. A tight particle size distribution is associated with greater clarity of flavor. Look at the standard deviation (SD) for a clue as to overall precision: Smaller numbers indicate likely greater clairty. This said,a broad distribution of coffee ground sizes can also lead to better body, and more perceived sweetness.

Our top pick for most people, the Baratza Encore ESP, proved itself to have quite precise results at very fine grinds—with standard deviation below 200 microns on espresso grinds, and 30 percent of particles concentrated within a single range. At its price range, this is admirable precision matched by very few grinders.

Courtesy of Matthew Korfhage

The same wasn’t as true at pour-over coffee settings for the Baratza Encore ESP (seen here at setting 22), which showed a broader and more heterogeneous particle size distribution—with both small and large particle sizes. In practice, this led to a full-bodied and rounder cup, but with a little bit less of the precise aromatics one can get from our favorite grinder for drip, the Fellow Ode Gen 2.

Courtesy of Matthew Korfhage

The Ode showed a characteristic Bell-curve shape, surrounding a single high peak, which corresponded with the precise aromatics I taste when brewing drip or pour-over coffee using the Ode.

Even greater precision was on display with the Technivorm Moccamaster KM5, a flat burr grinder that showed precise results across the board—rivaling the Encore ESP at fine grinds and the Ode Gen 2 at grinding for drip. It’s not as user-friendly as some of the top-pick devices, and the resulting brews can sometimes feel clinically clean, with a thinner body. But my lord it does offer clarity.

Courtesy of Matthew Korfhage

The Kingrinder K6 hand grinder, our top manual grinder pick,, also showed strong peaks at grind sizes appropriate for pour-over coffee. Shown here are analyses of two medium-fine grinds, at 60 and 70 clicks from zero, respectively. Hand grinders have a secret weapon, which is that they cause you to grind slowly—which works very well at coaxing out more clarity from conical burrs. For pour-over grinds, the Kingrinder showed a higher peak than basically any grinder I tested, meaning grinds are very concentrated in a tight range of sizes: as many as 40 percent of coffee grounds were functionaly the same size, and about 70 percent were grouped tightly around this. This leads to quite pronounced, intense flavor notes.

Courtesy of Matthew Korfhage

At grind sizes suitable for espresso, the Mazzer Philos bests this precision, with more than 90 percent of coffee grounds huddled in a tight grouping while using the i200D burr set. Nonetheless, while boulders are all but nonxistent, enough coffee fines exist to give each shot an almost syrupy consistency. The result is both body and perceived sweetness, with a surprisingly delicate clarity. While I haven’t tested the i189D burrs also available as an option, reports from the world say that the 189Ds lean even harder into clarity of flavors. But note that at bigger grind sizes more suitable for drip coffee, you’ll get a quite broad distribution. This will lead to a well-rounded cup, but may not offer the clarity of flavor of the Fellow Ode Gen 2 or the Kingrinder K6 for drip and pour-over brews.

Omni via Matthew Korfhage

Omni via Matthew Korfhage

Frequently Asked Questions

How We Test Coffee Grinders

WIRED tests coffee grinders by grinding a lot of beans, and making a lot of coffee—testing each grinder to see if it can serve well for espresso, Aeropress, drip or pour-over coffee, and coarse-ground cold brew and French Press. I tend to always grind a drip Stumptown Homestead or Single-Origin Colombia as a baseline, because each is readily available at my local supermarket with stamped roast dates, and because I know the flavor well enough I can detect variations. But I’ll also try out a number of flavors and roasts on each grinder, for different brewing methods.

Photograph: Matthew Korfhage

We assess each grinder for decibel level while grinding, ease of cleaning and operation, hopper design, the presence or absence of “popcorning” (where the beans pop around inside the hopper, often leading to more uneven results), messiness and static electrical buildup, grind retention, ease of use, value, and simple aesthetics.

Previous WIRED reviewers assessed grind uniformity visually with the aid of macro lenses, or filtered coffee grounds with sieves. In the most recent round of testing, I re-assessed each top coffee grinder pick using particle grind size analysis, with the help of the DiFluid Omni roast color and particle size analyzer, as well as a data analysis app that’s still in beta testing.

I tested both fine and medium grinds on each grinder, using the same beans for each grinder, roasted within a month of testing. I repeated the particle analysis at least five times for each grinder and setting. I assessed the uniformity of the grind and the overall distribution of particle sizes—paying particular attention to the share of coffee fines (the tiniest particles smaller than 100 microns) and boulders (big coffee bits larger than 1000 microns).

Why Grind Whole Beans Instead of Buying Pre-Ground?

The reasons are simple: Flavor. Freshness. Aroma.

Whenever you open a vacuum-sealed bag of beans, a little invisible clock starts. Oxidation begins to erode the character of your beans, breaking down organic compounds and degrading them, turning your lovely beans to cardboard. Aromatic flavor compounds also escape from the bean, gassing out into the air where they do no particular good.

When you grind your beans, these processes goes into overdrive. Freshness for whole beans can be measured in weeks. For ground beans, freshness in the open air is a matter of hours or even minutes. That bag of pre-ground beans you got from the supermarket? It’s still coffee, of course, and it’ll taste like coffee. But the vibrancy is gone. As far as true freshness is concerned, that coffee’s been dead for weeks. (Pre-ground beans can be kept airtight for a week or so and maintain their flavor, if you get them ground fresh at a coffee roaster.)

Photograph: Matthew Korfhage

The only reliable way to get truly excellent flavor from your coffee beans, the way you experience it at a café, is to use fresh, whole beans. This is also how you can exercise some control over extraction, and dial in your brewer or espresso maker to get the pefect results for each bean.

Espresso requires a fine grind, pour-over a little coarser, electric drip coffee a little coarser than this. Each grinder should have a guide to the best adjustments for each brewing method. Lighter-roast beans will want a finer grind than dark-roast, to aid in extraction: porous dark-roast beans give up their secrets a lot easier.

It’s all kinda fun to figure out, if you let it be fun. But certainly, when you strike paydirt, you’ll know it: Finding the right marriage of grind and bean, on a good grinder, can turn into the best cup you’ve ever had. It’s like the magical first time you seared a perfect steak, or baked a perfect layer cake. Effort meets reward. It’s marvelous. The grinders in this guide will help you find that moment more often.

What Is a Conical, Flat, or Blade Grinder?

Photograph: Iryna Veklich/Getty Images

Most coffee grinders fall into three main types: Conical-burr, flat-burr, and blade grinders. Burr grinders are generally higher quality, and higher cost.

Conical-burr grinders are the category occupied by our top pick, the Baratza Encore ESP, and pretty much all of the most affordable grinders that still make good coffeee. And there’s a reason for this: Conical tends to offer the sweet spot at the intersection of high-performance, cost, and flexibility. In a conical grinder, coffee beans are crushed and ground between two rings of burrs. They deliver a finer, much more consistent grind than you’d get with a traditional blade grinder, even the nicest blade grinder you ever met. Conicals do tend to throw off more fines than a flat burr, but many feel this leads to more body and a more rounded flavor character.

Flat-burr grinders are more precise than conical grinders, but they’re also typically more finicky and also more expensive. Burrs are laid on top of each other, and the beans pass through them as they grind. The grinder action pushes the grounds out of one end, instead of relying on gravity like a conical-burr grinder, which means the beans spend more time in contact with the burrs. This results in a more consistent grind, down to the micron in some cases, which leads to very precise flavors. For this reason, flat-burr grinders are often preferred as a way to elicit very precise flavors form single-origin beans for pour-over, drip, and Aeropress.

Blade grinders have a chopping blade that spins around like a food processor. But blades don’t produce even results. Some of your coffee will be fine powder at the bottom, and at the top you’ll have bits too large for even French press. The result is an inconsistent, unpredictable brew. These grinders are generally quite cheap.

But in case you’re wondering, using fresh beans in a blade grinder is still way better than buying ground coffee. (You can learn how to shake the beans to even your grind just a little. Pulsing the machine often also works. See world barista champion James Hoffmann’s video for some more blade grinder hacks.) Still, if you can afford it, the conical or flat-burr grinders on this list will lead to far better coffee than any blade.

What’s the Difference Between a Cheap and Expensive Burr Grinder?

The machinery in a high-quality burr grinder is a bit more complicated, and it’s built to withstand greater wear and tear. In cheap burr grinders, the burrs can get blunt from regular use, and flimsier motors may burn out in a matter of months.

But also, coffee grinders have undergone a revolution in technology and consideration in the past decade. Manufacturers have been experimenting with different shapes of burr even on conical burr grinders—pentagonal, hexagonal, heptagonal. And grinders with more precision cuts will cost more money.

Flat burrs also cost more money to manufacture, and are seen as having more precision. The true geeks are swapping out to new generations of flat burr that offer greater precision in machining, and multistage grinds. Grinder makers are experimenting with larger and smaller burrs, and different materials. It’s a hive of invention out there. And these precision parts cost money: Some burr sets might cost hundreds all by themselves.

The end result of all this attention is a greater range or finer adjustment of grind sizes, better and more reliable calibration, and often more precision in the resulting coffee grinds—and thus more precision in the flavor of your coffee or the brew of your espresso.

Can I Run Pre-Ground Beans Through My Burr Grinder to Get Better Coffee?

No, please don’t do this.

First off, if you’re trying to improve the flavor of store-bought beans, the game’s already lost. One of the main reasons to use fresh-ground whole beans is to avoid oxidation, and pre-ground beans have already been cardboarded up by evil, stale air.

But also, you’ll mostly just muck up your machine. Logically, it might make some sense. Your grind is too coarse, so let’s just run them through again at a finer setting, and perfect coffee results! Alas, on burr grinders, pre-ground coffee will get stuck inside the burrs, gum them up, and cause you to have to take the whole thing apart and clean it with your little brush and put it back together.

What Are the Best Coffee Grinders for Espresso?

Quite simply, the best coffee grinders for espresso are the ones that offer the finest calibration at the “fine” end of the spectrum. If you want to get super specific, look for coffee grinders that offer a number of fine calibrations at the fine end of the spectrum.

Dialing in individual espresso beans can require quite fine adjustments—and so even if a grinder is technically able to grind fine enough for espresso, it should also be able to make precise enough adjustments within that range to account for different beans, roasts, and machines. (For a real-world counterexample, witness the wall WIRED reviewer Joe Ray ran into when trying to get the (excellent) Wilfa Uniform Coffee Grinder to work for espresso. Without fine adjustments, chances are you’ll fail.)

Otherwise, what you’re looking for is excellent build, a motor that can withstand the higher torque you’ll need to grind finely even on lighter roasts, and a machine that deals well with static electricity: Finer espresso grinds can turn static into a terrible enemy, sending coffee grounds spraying wildly.

The most vaunted espresso grinders can travel upwards into the high hundreds of dollars (see the Timemore Sculptor 064S flat-burr) or the thousands of dollars (see the Zerno Z1).

The Mazzer Philos Coffee Grinder ($1,500) offered maybe the best shots of espresso I’ve pulled at home in the past year. This offers delicate flavor and syrupy shots like the ones you’ll get from a cafe, even on lower-cost espresso machines. (See my full review of the Mazzer Philos.)

But in this guide, we focused mostly on the best espresso grinders for the 90-some percent of people who are trying to gain access to good coffee without spending four figures. For most people and most budgets, our top pick, the Baratza Encore ESP ($200), will be the best choice, with sturdy construction and 30 grind adjustments for espresso alone. If you don’t mind a little elbow grease, you can tune your grinds even finer by using a manual coffee grinder like the Kingrinder K6 ($99).

And then there’s the true budget electric option. The tiny, slow-grinding Wirsh Geimori T38 Plus ($130) is the lowest-cost electric espresso grinder I’ve tried that can actually make good espresso on non-presurized baskets, though I’d probably limit it to medium roasts or darker, lest you strrain the machine. Torque is not a strong suit.

Honorable Mentions and Runners-Up

More Excellent Conical-burr All-rounders:

Fellow Opus for $200: The Fellow Opus is our previous top grinder pick. And it’s forever bound to be compared with our current top pick, the Baratza Encore ESP—a yang and yin among excellent $200 grinders that has caused oddly intense arguments on the WIRED Reviews team about which one’s better. The Opus comes out ahead in simple beauty, a mid-century stylishness that keeps it welcome on your counter. The Opus is among the quietest grinders I’ve tested, about half as loud as most picks on our list. But it’s not as easy to adjust and tune for espresso as our top pick all-rounder, the Encore ESP, and it retains more coffee grounds. And for truly excellent drip, I’d upgrade to the flat-burr Fellow Ode Gen 2 or the Moccamaster KM5 (below).

Baratza Encore for $150: Baratza’s original Encore is the Honda of the conical burr grinder world: easy to maintain, runs great, easy to use, lasts forever, replacement parts easy to find. It’s been on the market largely unchanged for more than a decade. For not much more money, though, our top-pick Encore ESP offers beautiful adjustment on espresso settings, so I tend to recommend paying an extra $50 for the added versatility. But the original Encore remains a solid entry-level choice.

Baratza Virtuoso+ for $250: The Virtuoso+ uses the same burr set as the ESP, but is not quite as optimized for espresso. The biggest upgrade against the Encore ESP is a timer. Both have similar rock-solid but compact builds (although the Virtuoso is a little more stylish with its fitted grounds bin), 40 grind settings, and burr grinders for consistent grounds. The Virtuoso’s digital timer, however, is great for those wanting consistent coffee ground dosings each morning. You’ll have to dial in on your grind time versus coffee grounds output, but once you figure that out, you can walk away from the grinder and multitask if you please. —Tyler Shane

Oxo Brew Conical Burr Grinder With Scale for $299: Making great coffee consistently is all about measuring your variables, and this Oxo model comes with a built-in scale. Set your grind size, select the weight you want, hit Start, and walk away; it shuts itself off when it’s done. This is a great way to streamline your morning ritual, but the device does spray off a few grounds—and at its price range, we tend to prefer the Fellow Opus or Baratza ESP as an all-rounder, or the bare-bones Oxo as a budget pick.

KitchenAid Burr Grinder for $200: This KitchenAid is stylish and easy to clean, and former WIRED reviewer Jaina Grey likes that the burrs are accessible thanks to their placement directly beneath the hopper. It also features precise dose control, with grind size controlled by a dial. For espresso lovers, one excellent feature is that you can swap the little container that catches the grounds with a holder for a portafilter.

Excellent flat burr coffee grinders for drip and pour-over:

Photograph: Matthew Korfhage

Technivorm Moccamaster KM5 Flat Burr Grinder for $329: OK, so this two-year-old Moccamaster sneaked up on me, in part because reviewers for other outlets have assumed that this Moccamaster is a rebuild of the Eureka Filtro (below), based on somewhat similar looks. Moccamaster reps assure me this is not the case. And it turns out the performance on this stepless (read: infinite adjustment) grinder is somewhere between good and damn good. The razor-thin grind size distribution in early testing makes the KM5 a credible rival to the similarly priced Fellow Ode, in fact. And like the Ode, this Moccamaster is made especially for bringing out precise flavors on drip and pour-over. Particle analysis shows this Moccamaster to potentially offer even more precise grinds, leading to an almost clinically clean brew with very light body. The KM5 not overly user-friendly, mind you: It cranks at 90 decibels, you have to hold down its analog switch to grind, and its aesthetics are the same sturdy industrial chic as all Moccamasters. Indeed, it’s designed to sit alongside the classic drip coffee maker that’s been on our buy-it-for-life guide since we’ve had one. If you prefer clarity to ease of use, this gives the Ode a run for the money, for less money.

Eureka Mignon Filtro for $269: The precision on flat burrs is terrific. But usually, so is the price. But this no-frills Filtro from beloved Italian coffee brand Eureka costs $80 less than our top-pick flat-burr, and it’s an absolute metal-clad tank of a machine, says former WIRED reviewer Jaina Grey. It’s as robust as the higher-end models and offers excellent consistency of grind size. Sure, it’s a little loud, and you have to hold the button down when you grind. But life is full of trade-offs.

Wilfa Uniform for $349: This Wilfa has long been on our list as a great flat-burr grinder for pour-overs and drip. It remains such, though the Ode springboarded it as the top pick with its Gen 2 burr update, at about the same price. Like its name suggests, the Wilfa offers a beautifully consistent grind size and will make you a lovely pour-over. That said, it’s fussier to adjust and louder than the Ode.

Courtesy of Breville

Breville Smart Grinder Pro for $200: WIRED has recommended this Breville in the past for its accessible burrs that make it easy to clean. But it’s not really optimized for lighter-roast espresso, and ever since Breville bought Baratza, they’ve slowly been swapping out the grinders in their top-line semi-automatic espresso machines with those excellent Baratza burrs. For a stand-alone grinder at the same price, we give the same advice to you.

Baratza Vario W+ for $600: The Encore has a bigger, beefier, flat burr cousin, the Baratza Vario-W+ (7/10, WIRED Recommends) with a built-in scale and ridiculously granular adjustment (230 settings!). But like a lot of flat burrs, it struggles on finer grinds, according to WIRED contributor Joe Ray. And static is an issue. With price in play, the Ode Gen 2 comes out on top, but Ray was still a big fan of the Vario.

Best coffee grinder for travel and camping:

Courtesy of VSSL

VSSL Java manual grinder for $170: VSSL specializes in ultra-durable camping tools, and it applied this same durable construction to this hardy campsite-ready hand grinder that WIRED reviewer Scott Gilbertson attests to be rugged enough to survive the zombie apocalypse. The handle folds out to provide a lot of leverage while you grind, and you can use it as a hook to hang the device up when you’re done.

Also Tested

Photograph: Matthew Korfhage

Wirsh Geimori GU38 for $200: The GU38 grinder from Wirsh/Geimori uses an identical burr set to the T38 Plus model I recommend as a budget espresso grinder. It’s also bulkier, and built a little sturdier. But the angled hopper causes more coffee retention, including some coffee beans that just refuse to feed into the grinder. Performance also seems slightly less reliable than the TU38, perhaps because the GU38 grinds faster. Either way, I’d opt for the lower-cost T38 Plus over this quite similar model.

Aarke Flat-Burr Grinder for $400: This pretty, shiny, stainless steel Aarke grinder contains a unique feature when paired with Aarke’s coffee brewer, detecting the water in the brewer’s tank and grinding the appropriate amount of beans. But this feature wasn’t as calibrated as we’d like, and there have been a lot of online reports of grinder jams. I didn’t have the same problem, but at more than $300 for a grinder that hasn’t been long on the market, prudence is often rewarded.

Hario Skerton Pro for $55: The Hario Skerton was the gateway hand grinder for many a coffee nerd, but it has since given ground to newer entrants. It’s fast and cheap, but it’ll give you a heck of a workout and isn’t as consistent for coarse grinds, plus the silicone handle has a habit of falling off.

Courtesy of Amazon

Hario Mini-Slim Plus for $39: This smaller Hario manual grinder is slower than the Skerton, but its plastic construction makes it good to throw in a travel bag. The low price is its main advertisement.

Cuisinart Burr Grinder for $75: At first, it seems like a good deal. It’s Cuisinart, a known brand, and a conical burr grinder for less than $100! But former WIRED reviewer Jaina Grey found that the low price came with a cost: These things apparently burn out faster than a rock star in the late ’60s.

Bodum Bistro Electric Blade Grinder for $20: This little blade grinder is quite cheap, and the model has served WIRED contributing reviewer Tyler Shane for years. That said, after some inconsistent reports on reliability, we favor the KitchenAid as our ultra-budget pick.

DmofwHi Cordless Grinder for $40: We used to recommend this cordless blade grinder for camping, largely because it can make 15 pots of French press without need of a recharge. It’s out of stock as of February 2026, and we’re monitoring to see whether it returns.

Power up with unlimited access to WIRED. Get best-in-class reporting that’s too important to ignore. Subscribe Today.

-

Business1 week ago

Business1 week agoTop stocks to buy today: Stock recommendations for February 13, 2026 – check list – The Times of India

-

Fashion1 week ago

Fashion1 week agoIndia’s PDS Q3 revenue up 2% as margins remain under pressure

-

Politics1 week ago

Politics1 week agoIndia clears proposal to buy French Rafale jets

-

Fashion1 week ago

Fashion1 week ago$10→ $12.10 FOB: The real price of zero-duty apparel

-

Tech1 week ago

Tech1 week agoElon Musk’s X Appears to Be Violating US Sanctions by Selling Premium Accounts to Iranian Leaders

-

Tech3 days ago

Tech3 days agoRakuten Mobile proposal selected for Jaxa space strategy | Computer Weekly

-

Entertainment3 days ago

Entertainment3 days agoQueen Camilla reveals her sister’s connection to Princess Diana

-

Politics3 days ago

Politics3 days agoRamadan moon sighted in Saudi Arabia, other Gulf countries