Tech

Tiny explosions and soft materials make onscreen braille more robust

From texting on a smart phone to ordering train tickets at a kiosk, touch screens are ubiquitous and, in most cases, relatively reliable. But for people who are blind or visually impaired and use electronic braille devices, the technology can be vulnerable to the elements, easily broken or clogged by dirt, and difficult to repair.

By combining the design principles and materials of soft robotics with microscale combustions, Cornell researchers have now created a high-resolution electronic tactile display that is more robust than other haptic braille systems and can operate in messy, unpredictable environments.

The technology also has potential applications in teleoperation, automation and could bring more tactile experiences to virtual reality.

The research is published in Science Robotics. The paper’s co-first authors are Ronald Heisser, Ph.D. ’23 and postdoctoral researcher Khoi Ly.

“The central premise of this work is two-fold: using energy stored in fluid to reduce the complexity of mass transport, and then thermal control of pressure to remove the requirements of complex valving,” said Rob Shepherd, the John F. Carr Professor of Mechanical Engineering in Cornell Engineering and the paper’s senior author.

“Very small amounts of combustible fuel allow us to create high-pressure actuation for tactile feedback wherever we like using small fluid channels, and cooling the gas during the reaction means this pressure stays localized and does not create pressure where we do not want it,” he said. “This chemical and thermal approach to tactile feedback solves the long-standing “Holy Braille’ challenge.”

The majority of refreshable electronic tactile displays contain dozens of tiny, intricate components in a single braille cell, which has six raised dots. Considering that a page of braille can hold upwards of 6,000 dots, that adds up to a lot of moving parts, all at risk of being jostled or damaged. Also, most refreshable displays only have a single line of braille, with a maximum of roughly 40 characters, which can be extremely limiting for readers, according to Heisser.

“Now people want to have multi-line displays so you can show pictures, or if you want to edit a spreadsheet or write computer code and read it back in braille,” he said.

Rather than relying on electromechanical systems—such as motors, hydraulics or tethered pumps—to power their tactile displays, Shepherd’s Organic Robotics Lab has taken a more explosive approach: micro combustion. In 2021, they unveiled a system in which liquid metal electrodes caused a spark to ignite a microscale volume of premixed methane and oxygen. The rapid combustion forced a haptic array of densely packed, 3-millimeter-wide actuators to cause molded silicone membrane dots—their form determined by a magnetic latching system—to pop up.

For the new iteration, the researchers created a 10-by-10-dot array of 2-millimeter-wide soft actuators, which are eversible—i.e., able to be turned inside out. When triggered by a mini combustion of oxygen and butane, the dots pop up in 0.24 milliseconds and remain fixed in place by virtue of their domed shape until a vacuum sucks them down. The untethered system maintains the elegance of soft robotics, Heisser said, resulting in something that is less bulky, less expensive and more resilient—”far beyond what typical braille displays are like.”

“We opted to have this rubber format where we’re molding separate components together, but because we’re kind of molding it all in one go and adhering everything, you have sheets of rubber,” said Heisser, currently a postdoctoral researcher at the Massachusetts Institute of Technology. “So now, instead of having 1,000 moving parts, we just have a few parts, and these parts aren’t sliding against each other. They’re integrated in this way that makes it simpler from a manufacturing and use standpoint.”

The silicone sheets would be replaceable, extending the lifespan of the device, and could be scaled up to include a larger number of braille characters while still being relatively portable. The hermetically sealed design also keeps out dirt and troublesome liquids.

“From a maintenance standpoint, if you want to give someone the ability to read braille in a public setting, like a museum or restaurant or sports game, we think this sort of display would be much more appropriate, more reliable,” Heisser said. “So someone spills beer on the braille display, is it going to survive? We think, in our case, yes, you can just wipe it down.”

This type of technology has numerous medical and industrial applications in which the sense of touch is important, from mimicking muscle to providing high-resolution haptic feedback during surgery or from automated machines, in addition to increasing accessibility and literacy for people who are blind or visually impaired.

“As technologies become more and more digitized, as we rely more and more on computer access, human-computer interaction becomes essential,” Heisser said. “Reading braille is equivalent to literacy. The workaround has been screen-reading technologies that allow you to interact with the computer, but don’t encourage your cognitive fluency.”

More information:

Ronald H. Heisser et al, Explosion-powered eversible tactile displays, Science Robotics (2025). DOI: 10.1126/scirobotics.adu2381

Citation:

Tiny explosions and soft materials make onscreen braille more robust (2025, September 30)

retrieved 30 September 2025

from https://techxplore.com/news/2025-09-tiny-explosions-soft-materials-onscreen.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

Tech

Donald Trump Jr.’s Private DC Club Has Mysterious Ties to an Ex-Cop With a Controversial Past

When the Executive Branch soft-launched in Washington, DC, last spring, the private club’s initial buzz centered on its starry roster of backers and founding members. The president’s eldest son, Donald Trump Jr., is one of the club’s several co-owners, according to previous reporting. Founding members reportedly include Trump administration AI czar David Sacks and his All-In podcast cohost Chamath Palihapitiya, as well as crypto bigwigs Tyler and Cameron Winklevoss.

“We wanted to create something new, hipper, and Trump-aligned,” Sacks said at the time. Proximity to Trumpworld didn’t come cheap; though the club headquarters is located in a basement space behind a shopping complex, fees to join are reportedly as high as $500,000.

The initial wave of press for the MAGA hot spot identified Trump Jr. and his business associates Omeed Malik, Chris Buskirk, and Zach and Alex Witkoff as the club’s co-owners. A Mother Jones report later revealed the involvement of David Sacks’ frequent business associate Glenn Gilmore, a San Francisco Bay Area real estate developer who is given a variety of titles on official documents, including co-owner, managing member, director, and president.

But according to corporate filings reviewed by WIRED, there’s another key figure whose involvement has not been previously reported and whose connection to its more famous founders remains unclear: Sean LoJacono, a former Metropolitan Police Department cop in Washington, DC, who gained local notoriety for his role in a stop and frisk that resulted in a lawsuit.

According to the legal complaint, in 2017, after questioning a man named M.B. Cottingham for a suspected open-container-law violation, LoJacono conducted a body search. A recording of the incident went viral on YouTube, sparking intense debate over aggressive policing tactics. “He stuck his finger in my crack,” Cottingham says in the video. “Stop fingering me, though, bro.” The next year, the American Civil Liberties Union of the District of Columbia sued LoJacono on behalf of Cottingham, alleging that LoJacono had “jammed his fingers between Mr. Cottingham’s buttocks and grabbed his genitals.” Cottingham agreed to settle his lawsuit with LoJacono and was paid an undisclosed amount by the District of Columbia (which admitted no wrongdoing) in 2018.

The MPD announced its intention to dismiss LoJacono following an internal affairs investigation, which concluded that the Cottingham search was not a fireable offense but that another search he had conducted the same day was. By early 2019, LoJacono had appealed his dismissal, arguing in well-publicized hearings that he had conducted searches according to how he had been taught by fellow officers in the field. Initially, the dismissal was upheld. However, the police union’s collective bargaining agreement enabled LoJacono to further appeal to a third-party arbitrator, which in November 2023 ruled in LoJacono’s favor.

Instead of returning to the police force, though, LoJacono has gone down a different path. A LinkedIn account featuring LoJacono’s name, likeness, and employment history lists his profession as “Director of Security and Facilities Management” at an unnamed private club in Washington, DC, from June 2025 to the present. Official incorporation paperwork for the Executive Branch Limited Liability Company filed to the Government of the District of Columbia’s corporations division in March 2025, shortly before the club launched, lists LoJacono as the “beneficial owner” of the business. The address listed on the paperwork matches the Executive Branch’s location. Donald Trump Jr. and other reported owners are not listed on the paperwork; Gilmore is listed on this document as the company’s “organizer.”

The paperwork indicates that LoJacono is considered a beneficial owner of a legal entity associated with the Executive Branch. But what does that mean, exactly?

Tech

A neural blueprint for human-like intelligence in soft robots

A new artificial intelligence control system enables soft robotic arms to learn a wide repertoire of motions and tasks once, then adjust to new scenarios on the fly, without needing retraining or sacrificing functionality.

This breakthrough brings soft robotics closer to human-like adaptability for real-world applications, such as in assistive robotics, rehabilitation robots, and wearable or medical soft robots, by making them more intelligent, versatile, and safe.

The work was led by the Mens, Manus and Machina (M3S) interdisciplinary research group — a play on the Latin MIT motto “mens et manus,” or “mind and hand,” with the addition of “machina” for “machine” — within the Singapore-MIT Alliance for Research and Technology. Co-leading the project are researchers from the National University of Singapore (NUS), alongside collaborators from MIT and Nanyang Technological University in Singapore (NTU Singapore).

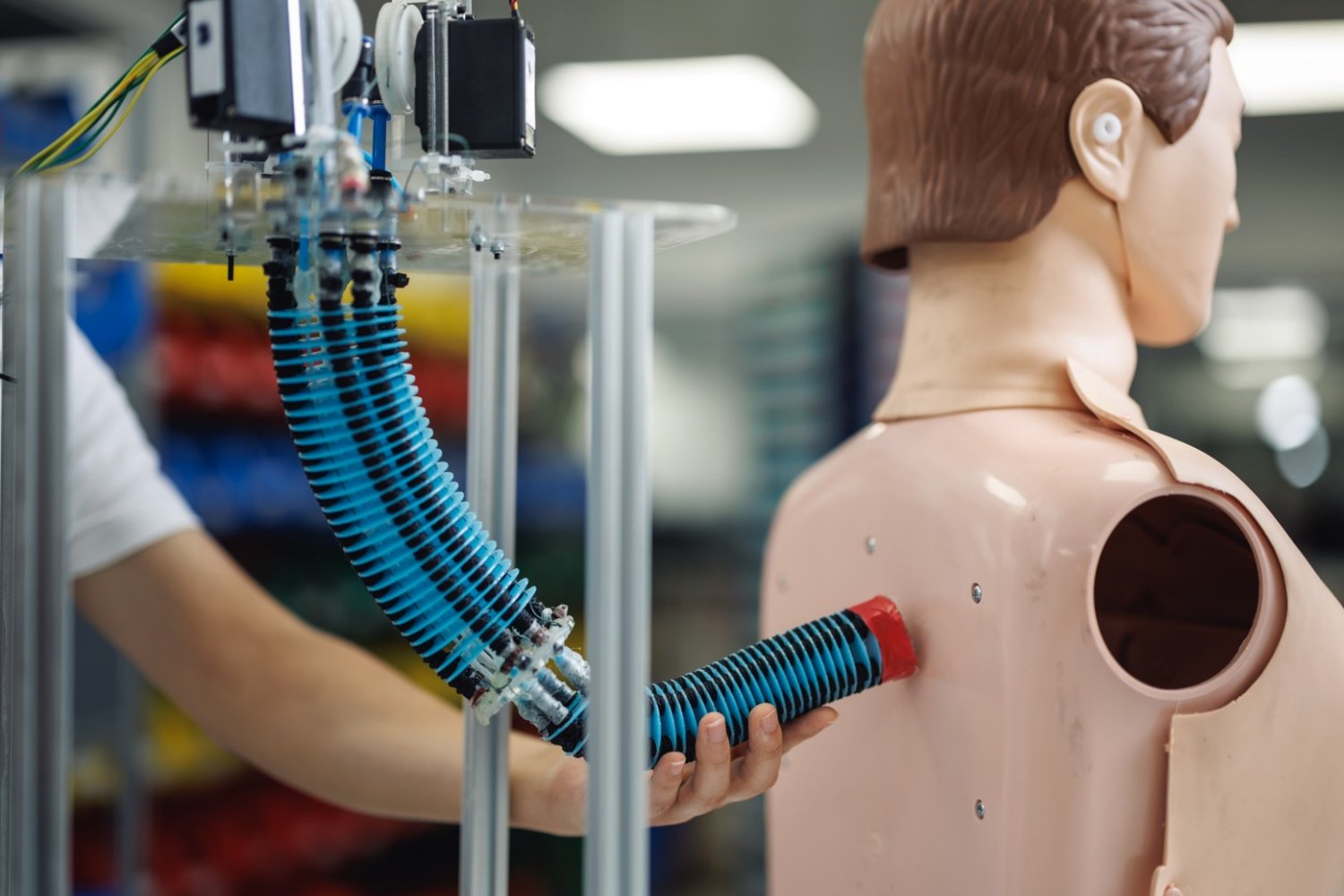

Unlike regular robots that move using rigid motors and joints, soft robots are made from flexible materials such as soft rubber and move using special actuators — components that act like artificial muscles to produce physical motion. While their flexibility makes them ideal for delicate or adaptive tasks, controlling soft robots has always been a challenge because their shape changes in unpredictable ways. Real-world environments are often complicated and full of unexpected disturbances, and even small changes in conditions — like a shift in weight, a gust of wind, or a minor hardware fault — can throw off their movements.

Despite substantial progress in soft robotics, existing approaches often can only achieve one or two of the three capabilities needed for soft robots to operate intelligently in real-world environments: using what they’ve learned from one task to perform a different task, adapting quickly when the situation changes, and guaranteeing that the robot will stay stable and safe while adapting its movements. This lack of adaptability and reliability has been a major barrier to deploying soft robots in real-world applications until now.

In an open-access study titled “A general soft robotic controller inspired by neuronal structural and plastic synapses that adapts to diverse arms, tasks, and perturbations,” published Jan. 6 in Science Advances, the researchers describe how they developed a new AI control system that allows soft robots to adapt across diverse tasks and disturbances. The study takes inspiration from the way the human brain learns and adapts, and was built on extensive research in learning-based robotic control, embodied intelligence, soft robotics, and meta-learning.

The system uses two complementary sets of “synapses” — connections that adjust how the robot moves — working in tandem. The first set, known as “structural synapses”, is trained offline on a variety of foundational movements, such as bending or extending a soft arm smoothly. These form the robot’s built‑in skills and provide a strong, stable foundation. The second set, called “plastic synapses,” continually updates online as the robot operates, fine-tuning the arm’s behavior to respond to what is happening in the moment. A built-in stability measure acts like a safeguard, so even as the robot adjusts during online adaptation, its behavior remains smooth and controlled.

“Soft robots hold immense potential to take on tasks that conventional machines simply cannot, but true adoption requires control systems that are both highly capable and reliably safe. By combining structural learning with real-time adaptiveness, we’ve created a system that can handle the complexity of soft materials in unpredictable environments,” says MIT Professor Daniela Rus, co-lead principal investigator at M3S, director of the MIT Computer Science and Artificial Intelligence Laboratory (CSAIL), and co-corresponding author of the paper. “It’s a step closer to a future where versatile soft robots can operate safely and intelligently alongside people — in clinics, factories, or everyday lives.”

“This new AI control system is one of the first general soft-robot controllers that can achieve all three key aspects needed for soft robots to be used in society and various industries. It can apply what it learned offline across different tasks, adapt instantly to new conditions, and remain stable throughout — all within one control framework,” says Associate Professor Zhiqiang Tang, first author and co-corresponding author of the paper who was a postdoc at M3S and at NUS when he carried out the research and is now an associate professor at Southeast University in China (SEU China).

The system supports multiple task types, enabling soft robotic arms to execute trajectory tracking, object placement, and whole-body shape regulation within one unified approach. The method also generalizes across different soft-arm platforms, demonstrating cross-platform applicability.

The system was tested and validated on two physical platforms — a cable-driven soft arm and a shape-memory-alloy–actuated soft arm — and delivered impressive results. It achieved a 44–55 percent reduction in tracking error under heavy disturbances; over 92 percent shape accuracy under payload changes, airflow disturbances, and actuator failures; and stable performance even when up to half of the actuators failed.

“This work redefines what’s possible in soft robotics. We’ve shifted the paradigm from task-specific tuning and capabilities toward a truly generalizable framework with human-like intelligence. It is a breakthrough that opens the door to scalable, intelligent soft machines capable of operating in real-world environments,” says Professor Cecilia Laschi, co-corresponding author and principal investigator at M3S, Provost’s Chair Professor in the NUS Department of Mechanical Engineering at the College of Design and Engineering, and director of the NUS Advanced Robotics Centre.

This breakthrough opens doors for more robust soft robotic systems to develop manufacturing, logistics, inspection, and medical robotics without the need for constant reprogramming — reducing downtime and costs. In health care, assistive and rehabilitation devices can automatically tailor their movements to a patient’s changing strength or posture, while wearable or medical soft robots can respond more sensitively to individual needs, improving safety and patient outcomes.

The researchers plan to extend this technology to robotic systems or components that can operate at higher speeds and more complex environments, with potential applications in assistive robotics, medical devices, and industrial soft manipulators, as well as integration into real-world autonomous systems.

The research conducted at SMART was supported by the National Research Foundation Singapore under its Campus for Research Excellence and Technological Enterprise program.

Tech

DHS Opens a Billion-Dollar Tab With Palantir

The Department of Homeland Security struck a $1 billion purchasing agreement with Palantir last week, further reinforcing the software company’s role in the federal agency that oversees the nation’s immigration enforcement.

According to contracting documents published last week, the blanket purchase agreement (BPA) awarded “is to provide Palantir commercial software licenses, maintenance, and implementation services department wide.” The agreement simplifies how DHS buys software from Palantir, allowing DHS agencies like Customs and Border Protection (CBP) and Immigration and Customs Enforcement (ICE) to essentially skip the competitive bidding process for new purchases of up to $1 billion in products and services from the company.

Palantir did not immediately respond to a request for comment.

Palantir announced the agreement internally on Friday. It comes as the company is struggling to address growing tensions among staff over its relationship with DHS and ICE. After Minneapolis nurse Alex Pretti was shot and killed in January, Palantir staffers flooded company Slack channels demanding information on how the tech they build empowers US immigration enforcement. Since then, the company has updated its internal wiki, offering few unreported details about its work with ICE, and Palantir CEO Alex Karp recorded a video for employees where he attempted to justify the company’s immigration work, as WIRED reported last week. Throughout a nearly hourlong conversation with Courtney Bowman, Palantir’s global director of privacy and civil liberties engineering, Karp failed to address direct questions about how the company’s tech powers ICE. Instead, he said workers could sign nondisclosure agreements for more detailed information.

Akash Jain, Palantir’s chief technology officer and president of Palantir US Government Partners, which works with US government agencies, acknowledged these concerns in the email announcing the company’s new agreement with DHS. “I recognize that this comes at a time of increased concern, both externally and internally, around our existing work with ICE,” Jain wrote. “While we don’t normally send out updates on new contract vehicles, in this moment it felt especially important to provide context to help inform your understanding of what this means—and what it doesn’t. There will be opportunities we run toward, and others we decline—that discipline is part of what has earned us DHS’s trust.”

In the Friday email, Jain suggests that the five-year agreement could allow the company to expand its reach across DHS into agencies like the US Secret Service (USSS), Federal Emergency Management Administration (FEMA), Transportation Security Administration (TSA), and the Cybersecurity and Infrastructure Security Agency (CISA).

Jain also argued that Palantir’s software could strengthen protections for US citizens. “These protections help enable accountability through strict controls and auditing capabilities, and support adherence to constitutional protections, especially the Fourth Amendment,” Jain wrote. (Palantir’s critics have argued that the company’s tools create a massive surveillance dragnet, which could ultimately harm civil liberties.)

Over the last year, Palantir’s work with ICE has grown tremendously. Last April, WIRED reported that ICE paid Palantir $30 million to build “ImmigrationOS,” which would provide “near real-time visibility” on immigrants self-deporting from the US. Since then, it’s been reported that the company has also developed a new tool called Enhanced Leads Identification & Targeting for Enforcement (ELITE) which creates maps of potential deportation targets, pulling data from DHS and the Department of Health and Human Services (HHS).

Closing his Friday email to staff, Jain suggested that staffers curious about the new DHS agreement come work on it themselves. “As Palantirians, the best way to understand the work is to engage on the work directly. If you are interested in helping shape and deliver the next chapter of Palantir’s work across DHS, please reach out,” Jain wrote to employees, who are sometimes referred to internally as fictional creatures from The Lord of the Rings. “There will be a massive need for committed hobbits to turn this momentum into mission outcomes.”

-

Business1 week ago

Business1 week agoGold price today: How much 18K, 22K and 24K gold costs in Delhi, Mumbai & more – Check rates for your city – The Times of India

-

Business7 days ago

Business7 days agoTop stocks to buy today: Stock recommendations for February 13, 2026 – check list – The Times of India

-

Fashion1 week ago

Fashion1 week agoIndia’s PDS Q3 revenue up 2% as margins remain under pressure

-

Politics1 week ago

Politics1 week agoIndia clears proposal to buy French Rafale jets

-

Fashion7 days ago

Fashion7 days ago$10→ $12.10 FOB: The real price of zero-duty apparel

-

Fashion1 week ago

Fashion1 week agoWhen 1% tariffs move billions: Inside the US apparel market repricing

-

Tech1 week ago

Tech1 week agoElon Musk’s X Appears to Be Violating US Sanctions by Selling Premium Accounts to Iranian Leaders

-

Tech3 days ago

Tech3 days agoRakuten Mobile proposal selected for Jaxa space strategy | Computer Weekly