Tech

Super-sensitive sensor detects tiny hydrogen leaks in seconds for safer energy use

Researchers at the University of Missouri are working to make hydrogen energy as safe as possible. As more countries and industries invest heavily in cleaner, renewable energy, hydrogen-powered factories and vehicles are gaining in popularity. But hydrogen fuel comes with risks—leaks can lead to explosions, accidents and environmental harm. Most hydrogen-detecting sensors on the market are expensive, can’t operate continuously and aren’t sensitive enough to detect tiny leaks quickly.

That’s why researcher Xiangqun Zeng and her team in the College of Engineering set out to design the ideal hydrogen sensor, focusing on six traits: sensitivity, selectivity, speed, stability, size and cost.

In a recent study published in the journal ACS Sensors, they unveiled a prototype of an affordable, longer-lasting, super-sensitive sensor that can accurately detect even the tiniest hydrogen leaks within seconds. The best part? It’s incredibly small, measuring about the size of a fingernail.

Zeng created her sensor by mixing tiny crystals made of platinum and nickel with ionic liquids. Compared to what’s already on the market, the new sensor is unmatched in performance and durability.

“Hydrogen can be tricky to detect since you can’t see it, smell it or taste it,” said Zeng, a MizzouForward hire who creates sensors to protect the health of people and the environment. “In general, our goal is to create sensors that are smaller, more affordable, highly sensitive and work continuously in real time.”

While her new hydrogen sensor is still being tested in the lab, Zeng hopes to commercialize it by 2027. Mizzou is committed to furthering this impactful research, as prioritizing renewable energy will be a cornerstone of the new Energy Innovation Center, expected to open on Mizzou’s campus in 2028.

Creating improved sensors with broad applications in health care, energy and the environment has been Zeng’s mission throughout her career.

“My expertise is in developing next-generation measurement technology, and for more than 30 years, I have prioritized projects that can make the biggest impacts on society,” said Zeng, who also has an appointment in the College of Arts and Science. “If we are going to develop sensors that can detect explosive gases, it needs to be done in real time so we can help people stay as safe as possible.”

More information:

Xiaojun Liu et al, PtNi Nanocrystal–Ionic Liquid Interfaces: An Innovative Platform for High-Performance and Reliable H2 Detection, ACS Sensors (2025). DOI: 10.1021/acssensors.4c03564

Citation:

Super-sensitive sensor detects tiny hydrogen leaks in seconds for safer energy use (2025, September 3)

retrieved 3 September 2025

from https://techxplore.com/news/2025-09-super-sensitive-sensor-tiny-hydrogen.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

Tech

Security News This Week: Oh Crap, Kohler’s Toilet Cameras Aren’t Really End-to-End Encrypted

An AI image creator startup left its database unsecured, exposing more than a million images and videos its users had created—the “overwhelming majority” of which depicted nudes and even nude images of children. A US inspector general report released its official determination that Defense Secretary Pete Hegseth put military personnel at risk through his negligence in the SignalGate scandal, but recommended only a compliance review and consideration of new regulations. Cloudflare’s CEO Matthew Prince told WIRED onstage at our Big Interview event in San Francisco this week that his company has blocked more than 400 billion AI bot requests for its customers since July 1.

A new New York law will require retailers to disclose if personal data collected about you results in algorithmic changes to their prices. And we profiled a new cellular carrier aiming to offer the closest thing possible to truly anonymous phone service—and its founder, Nicholas Merrill, who famously spent a decade-plus in court fighting an FBI surveillance order targeted at one of the customers of his internet service provider.

Putting a camera-enabled digital device in your toilet that uploads an analysis of your actual bodily waste to a corporation represents such a laughably bad idea that, 11 years ago, it was the subject of a parody infomercial. In 2025, it’s an actual product—and one whose privacy problems, despite the marketing copy of the company behind it, have turned out to be exactly as bad as any normal human might have imagined.

Security researcher Simon Fondrie-Teitler this week published a blog post revealing that the Dekota, a camera-packing smart device sold by Kohler, does not in fact use “end-to-end encryption” as it claimed. That term typically means that data is encrypted so that only user devices on either “end” of a conversation can decrypt the information therein, not the server that sits in between them and hosts that encrypted communication. But Fondrie-Teitler found that the Dekota only encrypts its data from the device to the server. In other words, according to the company’s definition of end-to-end encryption, one end is essentially—forgive us—your rear end, and the other is Kohler’s backend, where the images of its output are “decrypted and processed to provide our service,” as the company wrote in a statement to Fondrie-Teitler.

In response to his post pointing out that this is generally not what end-to-end encryption means, Kohler has removed all instances of that term from its descriptions of the Dekota.

The cyberespionage campaign known as Salt Typhoon represents one of the biggest counterintelligence debacles in modern US history. State-sponsored Chinese hackers infiltrated virtually every US telecom and gained access to the real-time calls and texts of Americans—including then presidential and vice-presidential candidates Donald Trump and J.D. Vance. But according to the Financial Times, the US government has declined to impose sanctions on China in response to that hacking spree amid the White House’s effort to reach a trade deal with China’s government. That decision has led to criticism that the administration is backing off key national security initiatives in an effort to accommodate Trump’s economic goals. But it’s worth noting that imposing sanctions in response to espionage has always been a controversial move, given that the United States no doubt carries out plenty of espionage-oriented hacking of its own across the world.

As 2025 draws to a close, the nation’s leading cyberdefense agency, the Cybersecurity and Infrastructure Agency (CISA), still has no director. And the nominee to fill that position, once considered a shoo-in, now faces congressional hurdles that may have permanently tanked his chances to run the agency. Sean Plankey’s name was excluded from a Senate vote Thursday on a panel of appointments, suggesting his nomination may be “over,” according to CyberScoop. Plankey’s nomination had faced various opposition from senators on both sides of the aisle with a broad mix of demands: Florida’s Republican senator Rick Scott had placed a hold on his nomination due to the Department of Homeland Security (DHS) terminating a Coast Guard contract with a company in his state, while North Carolina’s GOP senators opposed any new DHS nominees until disaster relief funding was allocated to their state. Democratic senator Ron Wyden, meanwhile, has demanded CISA publish a long-awaited report on telecom security prior to his appointment, which still has yet to be released.

The Chinese hacking campaign centered around the malware known as “Brickstorm” first came to light in September, when Google warned that the stealthy spy tool has been infecting dozens of victim organizations since 2022. Now CISA, the National Security Agency, and the Canadian Centre for Cybersecurity jointly added to Google’s warnings this week in an advisory about how to spot the malware. They also cautioned that the hackers behind it appear to be positioned not only for espionage targeting US infrastructure but also potentially disruptive cyberattacks, too. Most disturbing, perhaps, is a particular data point from Google, measuring the average time until the Brickstorm breaches have been discovered in a victim’s network: 393 days.

Tech

Top Vimeo Promo Codes and Discounts This Month in 2025

Remember Vimeo? You probably don’t use it to browse videos the way you might with some other services. But if you landed on this page, there’s a good chance you use it to host your professional portfolio. Or assets for your business. Or your short films. Vimeo has tools other video hosting services simply don’t have, like AI editing tools, on-demand content selling, customizable embeds, and collaborative editing features. And best of all: There are no ads. WIRED has rotating Vimeo promo codes to help you save.

Get 10% Off Annual Plans With This Vimeo Promo Code

No matter what you need for your business or career, when it comes to video, Vimeo’s got multiple plans to suit. And luckily, right now, you can save with a Vimeo promo code—even on the annual plans, which already include 40% in savings. Just use Vimeo coupon code GETVIMEO10 to save 10% on your membership plan.

The Easiest Way to Save 40% on Your Vimeo Plan

Vimeo has a few different membership plans that you can save on. No matter which you go with, the easiest way to save a lot is with an annual membership, which has automatic 40% savings compared to paying monthly. And yes, you can even stack promo codes with the annual billing options.

More on Vimeo Pricing and Membership Plans

So what tier do you need? The Starter plan starts at $12 per month (billed annually) or $20 per month (billed monthly). It comes with 100 gigabytes of storage, plus boosted privacy controls, custom video players, custom URLs, and automatic closed captioning.

Boost your plan to Standard for $25 per month (billed annually) or $41 per month (billed monthly) to upgrade to 2 terabytes of storage, 5 “seats” (which are collaborative team member spots), a brand kit, a teleprompter, text-based video editing, AI script generation, and engagement and social analytics.

Finally, there’s the Advanced plan, which costs $75 per month (billed annually) or $125 per month (billed monthly). You’ll get 10 “seats”, 7 terabytes of storage, AI-generated chapters and text summaries, live chat and poll options, plus streaming and live broadcast capabilities.

Use a Vimeo Coupon Code to Get Savings on Vimeo on Demand

Vimeo on Demand is a new way to stream and download movies online. Through Vimeo on Demand, you can rent, buy and subscribe to the best original films, documentaries and series directly from your favorite small business video creators, including The Talent and Wild Magic.

Vimeo Enterprise Solutions 2025

You may have not heard about Vimeo Enterprise, but it’s probably the most essential program for content creators, videographers, and digital media in the workplace in general. From meeting recordings and AI-driven video creation to compliance and distribution, Vimeo Enterprise helps centralize and manage video workflows.

Does Vimeo Have a Free Trial?

While Vimeo doesn’t have a free trial of its paid plans, it does have a free plan with some basic features. Additionally, paid plans can be canceled anytime–within 14 days for an annual subscription, or 3 days for a monthly subscription. You’ll get a full refund if you decide to cancel within the respective timeframes.

Tech

WIRED Roundup: DOGE Isn’t Dead, Facebook Dating Is Real, and Amazon’s AI Ambitions

Leah Feiger: So it’s a really good question actually, and it’s one that I’ve thought about for quite some time. I think if it’s not annoying, I want to read this quote from Scott Kupor, the director of OPM and the former managing partner at Andreessen Horowitz, to be clear, just to remind everyone where people are coming from in this current administration. He posted this on X late last month, and this was part of Reuter’s reporting. So he posts, “The truth is, DOGE may not have centralized leadership under USDS anymore, but the principles of DOGE remain alive and well, deregulation, eliminating fraud, waste and abuse, reshaping the federal workforce, et cetera, et cetera, et cetera.” Which is the exact same, the thing that they’ve been saying this entire time, but it’s all smoke and mirrors, right? It’s like, oh no, no, well, DOGE doesn’t exactly exist anymore. There’s no Elon Musk character leading it, which Elon Musk himself said on the podcast with Joe Rogan last month as well. He’s like, “Yeah, once I left, they weren’t able to pick on anyone, but don’t worry, DOGE is still there.” So it feels wild to watch people fall for this and go like, “DOGE is gone now.” And I’m like, they’re literally telling us that it’s not.

Zoë Schiffer: I think one thing that does feel honestly true is that it is harder and harder to differentiate where DOGE stops and the Trump administration begins because they have infiltrated so many different parts of government and the DOGE ethos, what you’re talking about, deregulation, cost cuttings, zero-based budgeting, those have really become kind of table stakes for the admin, right?

Leah Feiger: I think that’s such a good point. And honestly, by the end of Elon Musk’s reign, something that kept coming up wasn’t necessarily that the Trump administration didn’t agree with DOGE’s ethos at all. It was that they didn’t really agree with how Musk was going about it. They didn’t like that he was stepping on Treasury Secretary Scott Bessent and having fights outside of the Oval Office. That was bad optics and that also wasn’t helping the Trump administration even look like they were on top of it.

-

Sports5 days ago

Sports5 days agoIndia Triumphs Over South Africa in First ODI Thanks to Kohli’s Heroics – SUCH TV

-

Tech6 days ago

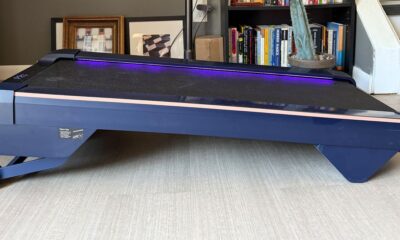

Tech6 days agoGet Your Steps In From Your Home Office With This Walking Pad—On Sale This Week

-

Entertainment5 days ago

Entertainment5 days agoSadie Sink talks about the future of Max in ‘Stranger Things’

-

Fashion5 days ago

Fashion5 days agoResults are in: US Black Friday store visits down, e-visits up, apparel shines

-

Politics5 days ago

Politics5 days agoElon Musk reveals partner’s half-Indian roots, son’s middle name ‘Sekhar’

-

Tech5 days ago

Tech5 days agoPrague’s City Center Sparkles, Buzzes, and Burns at the Signal Festival

-

Sports5 days ago

Sports5 days agoBroncos secure thrilling OT victory over Commanders behind clutch performances

-

Entertainment5 days ago

Entertainment5 days agoNatalia Dyer explains Nancy Wheeler’s key blunder in Stranger Things 5