Tech

Jaguar Land Rover extends production shutdown for another week | Computer Weekly

Jaguar Land Rover (JLR) has extended a pause in vehicle production for at least another week following a cyber attack by the Scattered Lapsus$ Hunters hacking collective comprising members of the Scattered Spider, ShinyHunters, and Lapsus$ gangs.

The incident, which began at the end of August before becoming public on 2 September, forced the suspension of work at JLR’s Merseyside plant and has also affected its retail services.

It has since emerged that data of an undisclosed nature has been compromised by the cyber gang – which has been boasting of its exploits on Telegram but has also now claimed to have retired – and notified the relevant regulators. Its forensic investigation continues.

A JLR spokesperson said: “Today we have informed colleagues, suppliers and partners that we have extended the current pause in our production until Wednesday 24 September 2025.

“We have taken this decision as our forensic investigation of the cyber incident continues, and as we consider the different stages of the controlled restart of our global operations, which will take time.

“We are very sorry for the continued disruption this incident is causing and we will continue to update as the investigation progresses,” they said.

James McQuiggan, CISO advisor at KnowBe4, said the continuing disruption at JLR demonstrated how entwined cyber security and wider business resilience have now become.

“When core systems are taken offline, the impact cascades through employees, suppliers and customers, showing that business continuity and cyber defence should be indivisible,” he said. “Beyond immediate disruption, data theft during such incidents increases the long-term risks, from reputational damage to regulatory consequences.”

“To mitigate these risks, organisations should regularly test and update their business continuity and incident response plans, strengthen supply chain risk assessments, and adopt zero-trust principles to limit attacker movement.”

McQuiggan added: “Just as important is addressing human risk, as social engineering remains the leading entry point for attackers. Ongoing security awareness, phishing simulations, and behavior analysis of users in a human risk management program help users recognise and resist malicious tactics. By combining strong technical controls with a culture of cyber resilience, organisations can reduce their exposure and recover with greater confidence.”

Golden parachutes

Meanwhile, the supposed Scattered Lapsus$ Hunters shutdown – announced via BreachForums and Telegram across a number of frequently crude postings – saw ‘farewell’ messages that included a number of apologies to the families of some gang members scooped up in law enforcement actions, to JLR, and to Google and CrowdStrike.

In the messages, reviewed by CyberNews, one of the supposed gang members even addressed the CIA, saying they were “so very sorry” they leaked classified documents and “had no idea what they were doing”.

“Please forgive me and f*** Iran. I will be going to the rehab center for 60 days,” they added.

The gang’s alleged climbdown has drawn a sceptical eye from cyber community members who, based on years of experience, know that cyber criminals rarely if ever pack up shop and go straight.

Cian Heasley, principal consultant at Acumen Cyber, said that the gang’s talk of activating “contingency plans” and a call for fans not to worry about them as they would be enjoying their “golden parachutes with the millions the group accumulated [sic]”, seemed far-fetched.

“This is a transparent move that suggests its members are buying some breathing time, panicking about the threat of prison, and arguing behind the scenes about how much trouble they are actually in and the need to be cautious,” said Heasley.

“Given the volatile and explosive nature of the group, it’s hard to imagine they carried out this level of due diligence.

“The lure of the money and excitement that comes with cyber crime will inevitably draw them back in eventually,” added Heasley.

Indeed, even amid its farewell messages, Scattered Lapsus$ Hunters hinted at future developments and taunted the likes of the FBI and Mandiant, and various victims including luxury goods house Kering and Air France.

It also named British Airlines, an organisation that does not exist but which may be a reference to British Airways (BA).

BA is not known to have been attacked at the time of writing, suggesting that more victims of the recent hacking spree may yet come to light.

Tech

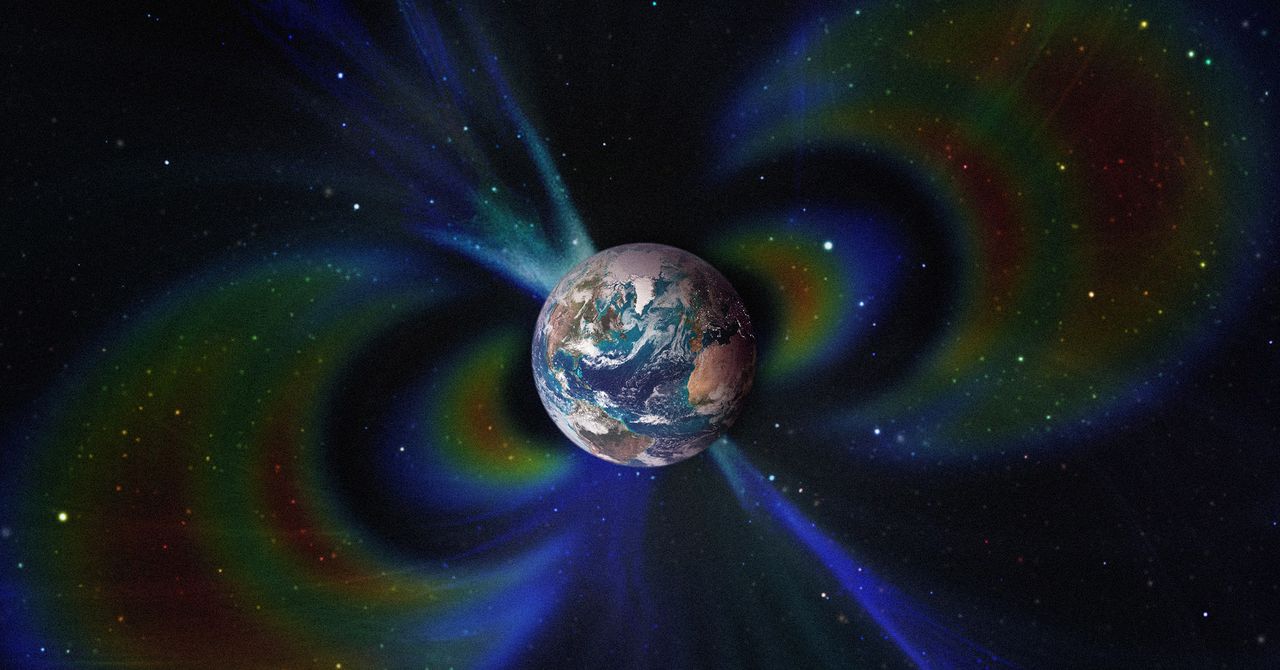

Two Titanic Structures Hidden Deep Within the Earth Have Altered the Magnetic Field for Millions of Years

A team of geologists has found for the first time evidence that two ancient, continent-sized, ultrahot structures hidden beneath the Earth have shaped the planet’s magnetic field for the past 265 million years.

These two masses, known as large low-shear-velocity provinces (LLSVPs), are part of the catalog of the planet’s most enormous and enigmatic objects. Current estimates calculate that each one is comparable in size to the African continent, although they remain buried at a depth of 2,900 kilometers.

Low-lying surface vertical velocity (LLVV) regions form irregular areas of the Earth’s mantle, not defined blocks of rock or metal as one might imagine. Within them, the mantle material is hotter, denser, and chemically different from the surrounding material. They are also notable because a “ring” of cooler material surrounds them, where seismic waves travel faster.

Geologists had suspected these anomalies existed since the late 1970s and were able to confirm them two decades later. After another 10 years of research, they now point to them directly as structures capable of modifying Earth’s magnetic field.

LLSVPs Alter the Behavior of the Nucleus

According to a study published this week in Nature Geoscience and led by researchers at the University of Liverpool, temperature differences between LLSVPs and the surrounding mantle material alter the way liquid iron flows in the core. This movement of iron is responsible for generating Earth’s magnetic field.

Taken together, the cold and ultrahot zones of the mantle accelerate or slow the flow of liquid iron depending on the region, creating an asymmetry. This inequality contributes to the magnetic field taking on the irregular shape we observe today.

The team analyzed the available mantle evidence and ran simulations on supercomputers. They compared how the magnetic field should look if the mantle were uniform versus how it behaves when it includes these heterogeneous regions with structures. They then contrasted both scenarios with real magnetic field data. Only the model that incorporated the LLSVPs reproduced the same irregularities, tilts, and patterns that are currently observed.

The geodynamo simulations also revealed that some parts of the magnetic field have remained relatively stable for hundreds of millions of years, while others have changed remarkably.

“These findings also have important implications for questions surrounding ancient continental configurations—such as the formation and breakup of Pangaea—and may help resolve long-standing uncertainties in ancient climate, paleobiology, and the formation of natural resources,” said Andy Biggin, first author of the study and professor of Geomagnetism at the University of Liverpool, in a press release.

“These areas have assumed that Earth’s magnetic field, when averaged over long periods, behaved as a perfect bar magnet aligned with the planet’s rotational axis. Our findings are that this may not quite be true,” he added.

This story originally appeared in WIRED en Español and has been translated from Spanish.

Tech

Loyalty Is Dead in Silicon Valley

Since the middle of last year, there have been at least three major AI “acqui-hires” in Silicon Valley. Meta invested more than $14 billion in Scale AI and brought on its CEO, Alexandr Wang; Google spent a cool $2.4 billion to license Windsurf’s technology and fold its cofounders and research teams into DeepMind; and Nvidia wagered $20 billion on Groq’s inference technology and hired its CEO and other staffers.

The frontier AI labs, meanwhile, have been playing a high stakes and seemingly never-ending game of talent musical chairs. The latest reshuffle began three weeks ago, when OpenAI announced it was rehiring several researchers who had departed less than two years earlier to join Mira Murati’s startup, Thinking Machines. At the same time, Anthropic, which was itself founded by former OpenAI staffers, has been poaching talent from the ChatGPT maker. OpenAI, in turn, just hired a former Anthropic safety researcher to be its “head of preparedness.”

The hiring churn happening in Silicon Valley represents the “great unbundling” of the tech startup, as Dave Munichiello, an investor at GV, put it. In earlier eras, tech founders and their first employees often stayed onboard until either the lights went out or there was a major liquidity event. But in today’s market, where generative AI startups are growing rapidly, equipped with plenty of capital, and prized especially for the strength of their research talent, “you invest in a startup knowing it could be broken up,” Munichiello told me.

Early founders and researchers at the buzziest AI startups are bouncing around to different companies for a range of reasons. A big incentive for many, of course, is money. Last year Meta was reportedly offering top AI researchers compensation packages in the tens or hundreds of millions of dollars, offering them not just access to cutting-edge computing resources but also … generational wealth.

But it’s not all about getting rich. Broader cultural shifts that rocked the tech industry in recent years have made some workers worried about committing to one company or institution for too long, says Sayash Kapoor, a computer science researcher at Princeton University and a senior fellow at Mozilla. Employers used to safely assume that workers would stay at least until the four-year mark when their stock options were typically scheduled to vest. In the high-minded era of the 2000s and 2010s, plenty of early cofounders and employees also sincerely believed in the stated missions of their companies and wanted to be there to help achieve them.

Now, Kapoor says, “people understand the limitations of the institutions they’re working in, and founders are more pragmatic.” The founders of Windsurf, for example, may have calculated their impact could be larger at a place like Google that has lots of resources, Kapoor says. He adds that a similar shift is happening within academia. Over the past five years, Kapoor says, he’s seen more PhD researchers leave their computer-science doctoral programs to take jobs in industry. There are higher opportunity costs associated with staying in one place at a time when AI innovation is rapidly accelerating, he says.

Investors, wary of becoming collateral damage in the AI talent wars, are taking steps to protect themselves. Max Gazor, the founder of Striker Venture Partners, says his team is vetting founding teams “for chemistry and cohesion more than ever.” Gazor says it’s also increasingly common for deals to include “protective provisions that require board consent for material IP licensing or similar scenarios.”

Gazor notes that some of the biggest acqui-hire deals that have happened recently involved startups founded long before the current generative AI boom. Scale AI, for example, was founded in 2016, a time when the kind of deal Wang negotiated with Meta would have been unfathomable to many. Now, however, these potential outcomes might be considered in early term sheets and “constructively managed,” Gazor explains.

Tech

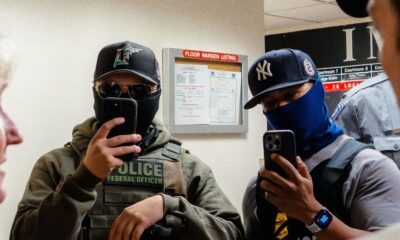

ICE and CBP’s Face-Recognition App Can’t Actually Verify Who People Are

The face-recognition app Mobile Fortify, now used by United States immigration agents in towns and cities across the US, is not designed to reliably identify people in the streets and was deployed without the scrutiny that has historically governed the rollout of technologies that impact people’s privacy, according to records reviewed by WIRED.

The Department of Homeland Security launched Mobile Fortify in the spring of 2025 to “determine or verify” the identities of individuals stopped or detained by DHS officers during federal operations, records show. DHS explicitly linked the rollout to an executive order, signed by President Donald Trump on his first day in office, which called for a “total and efficient” crackdown on undocumented immigrants through the use of expedited removals, expanded detention, and funding pressure on states, among other tactics.

Despite DHS repeatedly framing Mobile Fortify as a tool for identifying people through facial recognition, however, the app does not actually “verify” the identities of people stopped by federal immigration agents—a well-known limitation of the technology and a function of how Mobile Fortify is designed and used.

“Every manufacturer of this technology, every police department with a policy makes very clear that face recognition technology is not capable of providing a positive identification, that it makes mistakes, and that it’s only for generating leads,” says Nathan Wessler, deputy director of the American Civil Liberties Union’s Speech, Privacy, and Technology Project.

Records reviewed by WIRED also show that DHS’s hasty approval of Fortify last May was enabled by dismantling centralized privacy reviews and quietly removing department-wide limits on facial recognition—changes overseen by a former Heritage Foundation lawyer and Project 2025 contributor, who now serves in a senior DHS privacy role.

DHS—which has declined to detail the methods and tools that agents are using, despite repeated calls from oversight officials and nonprofit privacy watchdogs—has used Mobile Fortify to scan the faces not only of “targeted individuals,” but also people later confirmed to be US citizens and others who were observing or protesting enforcement activity.

Reporting has documented federal agents telling citizens they were being recorded with facial recognition and that their faces would be added to a database without consent. Other accounts describe agents treating accent, perceived ethnicity, or skin color as a basis to escalate encounters—then using face scanning as the next step once a stop is underway. Together, the cases illustrate a broader shift in DHS enforcement toward low-level street encounters followed by biometric capture like face scans, with limited transparency around the tool’s operation and use.

Fortify’s technology mobilizes facial capture hundreds of miles from the US border, allowing DHS to generate nonconsensual face prints of people who, “it is conceivable,” DHS’s Privacy Office says, are “US citizens or lawful permanent residents.” As with the circumstances surrounding its deployment to agents with Customs and Border Protection and Immigration and Customs Enforcement, Fortify’s functionality is visible mainly today through court filings and sworn agent testimony.

In a federal lawsuit this month, attorneys for the State of Illinois and the City of Chicago said the app had been used “in the field over 100,000 times” since launch.

In Oregon testimony last year, an agent said two photos of a woman in custody taken with his face-recognition app produced different identities. The woman was handcuffed and looking downward, the agent said, prompting him to physically reposition her to obtain the first image. The movement, he testified, caused her to yelp in pain. The app returned a name and photo of a woman named Maria; a match that the agent rated “a maybe.”

Agents called out the name, “Maria, Maria,” to gauge her reaction. When she failed to respond, they took another photo. The agent testified the second result was “possible,” but added, “I don’t know.” Asked what supported probable cause, the agent cited the woman speaking Spanish, her presence with others who appeared to be noncitizens, and a “possible match” via facial recognition. The agent testified that the app did not indicate how confident the system was in a match. “It’s just an image, your honor. You have to look at the eyes and the nose and the mouth and the lips.”

-

Business1 week ago

Business1 week agoPSX witnesses 6,000-point on Middle East tensions | The Express Tribune

-

Tech1 week ago

Tech1 week agoThe Surface Laptop Is $400 Off

-

Tech1 week ago

Tech1 week agoHere’s the Company That Sold DHS ICE’s Notorious Face Recognition App

-

Business1 week ago

Business1 week agoBudget 2026: Defence, critical minerals and infra may get major boost

-

Tech4 days ago

Tech4 days agoHow to Watch the 2026 Winter Olympics

-

Tech6 days ago

Tech6 days agoRight-Wing Gun Enthusiasts and Extremists Are Working Overtime to Justify Alex Pretti’s Killing

-

Business6 days ago

Business6 days agoLabubu to open seven UK shops, after PM’s China visit

-

Sports1 week ago

Sports1 week agoDarian Mensah, Duke settle; QB commits to Miami