Tech

Quantum computer chips clear major manufacturing hurdle

UNSW Sydney nano-tech startup Diraq has shown its quantum chips aren’t just lab-perfect prototypes—they also hold up in real-world production, maintaining the 99% accuracy needed to make quantum computers viable.

Diraq, a pioneer of silicon-based quantum computing, achieved this feat by teaming up with the European nanoelectronics institute Interuniversity Microelectronics Center (imec). Together they demonstrated the chips worked just as reliably coming off a semiconductor chip fabrication line as they do in the experimental conditions of a research lab at UNSW.

UNSW Engineering Professor Andrew Dzurak, who is the founder and CEO of Diraq, said up until now it hadn’t been proven that the processors’ lab-based fidelity—meaning accuracy in the quantum computing world—could be translated to a manufacturing setting.

“Now it’s clear that Diraq’s chips are fully compatible with manufacturing processes that have been around for decades.”

In a paper published in Nature, the teams report that Diraq-designed, imec-fabricated devices achieved over 99% fidelity in operations involving two quantum bits—or “qubits.”

The result is a crucial step toward Diraq’s quantum processors achieving utility scale, the point at which a quantum computer’s commercial value exceeds its operational cost. This is the key metric set out in the Quantum Benchmarking Initiative, a program run by the United States’ Defense Advanced Research Projects Agency (DARPA) to gauge whether Diraq and 17 other companies can reach this goal.

Utility-scale quantum computers are expected to be able to solve problems that are out of reach of the most advanced high-performance computers available today. But breaching the utility-scale threshold requires storing and manipulating quantum information in millions of qubits to overcome the errors associated with the fragile quantum state.

“Achieving utility scale in quantum computing hinges on finding a commercially viable way to produce high-fidelity quantum bits at scale,” said Prof. Dzurak.

“Diraq’s collaboration with imec makes it clear that silicon-based quantum computers can be built by leveraging the mature semiconductor industry, which opens a cost-effective pathway to chips containing millions of qubits while still maximizing fidelity.”

Silicon is emerging as the front-runner among materials being explored for quantum computers—it can pack millions of qubits onto a single chip and works seamlessly with today’s trillion-dollar microchip industry, making use of the methods that put billions of transistors onto modern computer chips.

Diraq has previously shown that qubits fabricated in an academic laboratory can achieve high fidelity when performing two-qubit logic gates, the basic building block of future quantum computers. However, it was unclear whether this fidelity could be reproduced in qubits manufactured in a semiconductor foundry environment.

“Our new findings demonstrate that Diraq’s silicon qubits can be fabricated using processes that are widely used in semiconductor foundries, meeting the threshold for fault tolerance in a way that is cost-effective and industry-compatible,” Prof. Dzurak said.

Diraq and imec previously showed that qubits manufactured using CMOS processes—the same technology used to build everyday computer chips—could perform single-qubit operations with 99.9% accuracy. But more complex operations using two qubits that are critical to achieving utility scale had not yet been demonstrated.

“This latest achievement clears the way for the development of a fully fault-tolerant, functional quantum computer that is more cost effective than any other qubit platform,” Prof. Dzurak said.

More information:

Paul Steinacker, Industry-compatible silicon spin-qubit unit cells exceeding 99% fidelity, Nature (2025). DOI: 10.1038/s41586-025-09531-9. www.nature.com/articles/s41586-025-09531-9

Citation:

Quantum computer chips clear major manufacturing hurdle (2025, September 24)

retrieved 24 September 2025

from https://techxplore.com/news/2025-09-quantum-chips-major-hurdle.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

Tech

Best HelloFresh Coupons and Promo Codes for December 2025

Leveraging meal kit coupons is the extreme couponing of our times—a capitalism hack-a-thon right up there with trial yoga classes and attempting to cancel your Adobe subscription. Meal kits like HelloFresh have always been a better deal than they get credit for, even at full price: It’s actually hard to recreate meal kit meals for less than you can get the recipes delivered to your home. But it’s especially worth it when you can find a HelloFresh coupon, promo code, or discount at more than half off.

I’ll admit I wasn’t that sold on HelloFresh when I first tried it most of a decade ago. It was useful, it got me out of my staid routines, but I wasn’t impressed with the selection. It felt a little basic. But lately? Honestly, it’s kinda cosmopolitan these days, after expanding to a dozen countries and absorbing the supply networks from multiple other meal plans. When I last tested the HelloFresh meal kit (7/10, WIRED Recommends), I was surprised to find myself cooking credible home renditions of ramen, ponzu-plum beef stir fry, and Southwest-accented pork roasts. And when I’m able to pick up a HelloFresh discount code, it’s generally less than I’d spend on groceries anyway. So it’s a good moment to try out a lifestyle where the food comes in the mail.

Get 50% Off and up to 10 Free Meals as a New HelloFresh Customer

Right now, new and returning customers can take advantage of a HelloFresh discount code offering 50% off your first meal kit box plus a free item each week. Enter your email as part of the signup process, and you’ll be auto-subscribed to an email with even more offers for both new and existing customers. Plus, new customers can get up to 55% off and extra free breakfasts, desserts, and other items with other secret discounts.

HelloFresh Student and Discount: 55% Off, Free Shipping, Plus Extra 15% Off Education Discounts Available via UNiDAYS

HelloFresh meal kits are pretty amenable to dorm life when ordering the ready-to-eat meals—or just saving time during grad school instead of ordering pizza, by letting the Internet do your shopping and meal planning. But student budgets tend to be tight, of course. And so there are steeply discounted HelloFresh coupon codes specifically for students. Follow the link here for a HelloFresh education promo code offering 55% off your first box, free shipping, and a continuing discount of 15% off for the first year.

Discounts also apply to teachers who’ve never tried HelloFresh. Educators and school employees can get up to 12 free meals spread out across 3 boxes, plus free shipping. Click here for the HelloFresh promo code, or go here for more information about educator discounts.

Note that the student and educator discounts don’t combine with any other HelloFresh discounts or promotions.

Special Hero Discount for Military, Veterans, and Healthcare Workers

Military discounts are a long tradition in America. HelloFresh also offers hero discount programs for first responders, health professionals, and military personnel. Heroes also get excellent discounts that include 55% off the first order, free shipping, and 15% off for the first year of HelloFresh delivery boxes.

This program is open to nurses, hospital employees, EMTs, active military, veterans, and first responders. First responders include law enforcement, 911 dispatch, and firefighters. Click here to see if you’re eligible, or follow this link for more information about HelloFresh hero discounts.

Note that the hero discounts don’t combine with any other HelloFresh promo codes.

Give $40, Get $10 With the HelloFresh Referral Program

Already a HelloFresh subscriber? You’re still eligible for discounts if you pass along subscription information to your friends. Here’s how: Send your friends a $40 discount for their own affordable meal kits. Once they sign up using your HelloFresh referral code, you’ll also get a $10 credit on your next delivery.

These discounts stack. So if you sign up multiple friends with your referral code, you get multiple $10 discounts. Check out the HelloFresh meal kit referral program here.

Take Advantage of HelloFresh Come Back Offers

Some of these discounts are only available to new HelloFresh customers. But there’s a hack to getting discounts anyway. After you pause or cancel your subscription, check your inbox after the next few days or weeks. Often, you’ll get HelloFresh coupon codes for discounts.

Typical HelloFresh “come-back” offers after a canceled subscription include: $100 to $180 off (spread out over several meal boxes), free shipping on the first box (after re-subscribing), free items such as dessert, breakfast, or an extra protein per meal, or a free meal box is offered after a break. Typical retention offers, for when customers try to cancel, include: 40% off the next box, if you decide not to cancel, or 25% off the next two meal kits. None of this is failsafe, of course, offers vary for each customer. But as with magazine subscriptions, sometimes canceling, or trying to cancel, will lead to a good discount offer from a company eager to keep your business.

When to Save the Most on HelloFresh Subscriptions

HelloFresh almost always has some sort of deal going, whether to bring in new customers with an especially choice HelloFresh coupon, or bring back previous customers with HelloFresh discount codes and retention offers. But summer tends to be one of the times they offer the steepest discounts, including 10 free meals across several boxes, complimentary appetizers, free ready-made items, or free shipping on select boxes.

The other big times for HelloFresh coupon codes are around Black Friday and the end of the year. HelloFresh often launches limited-edition holiday meal boxes and themed meal kits, not to mention discounts for returning customers looking to cook more at home as part of New Year’s resolutions.

Tech

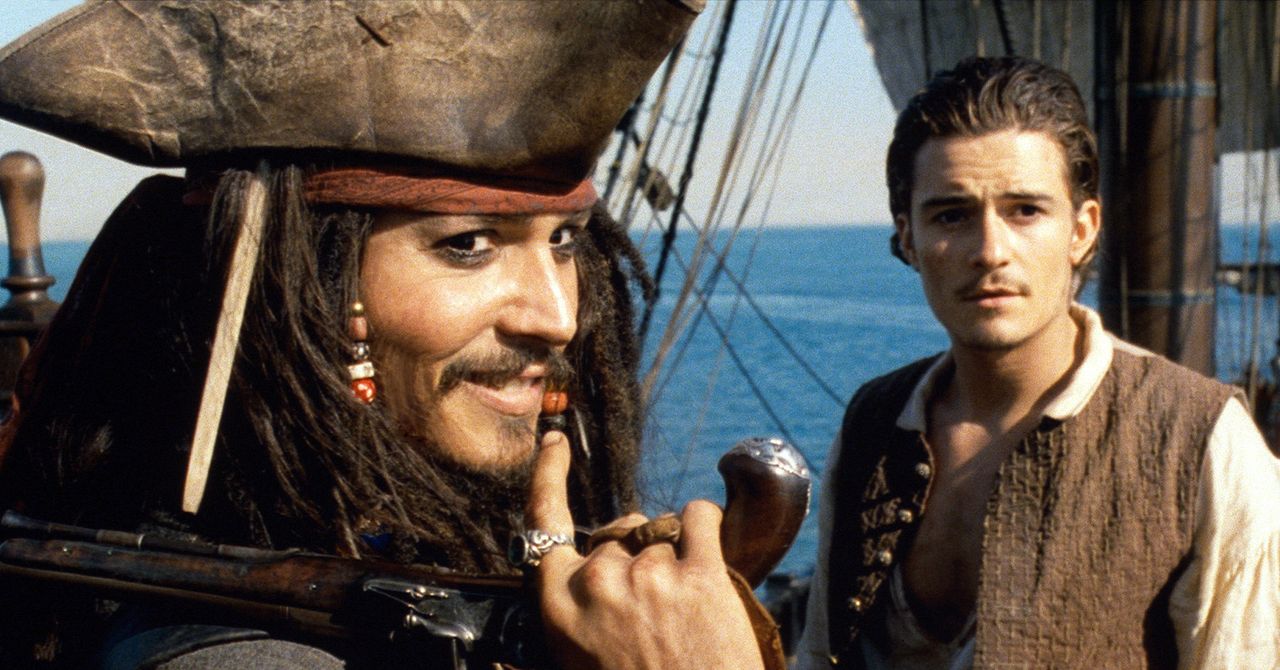

Could You Use a Rowboat to Walk on the Seafloor Like Jack Sparrow?

But you already know about this, because Fg is what normies call an object’s “weight,” and for a given volume, weight depends only on the density. Now, if you dropped these blocks in a lake, obviously the styrofoam would float and the steel would sink. So clearly it has something to do with density.

What if you had a block of water with the same volume? If you could somehow hold this cube of water, it would feel pretty heavy, about 62.4 pounds. Now, if you place it carefully in a lake, will it sink or bob on the surface like styrofoam? Neither, right? It’s just going to sit there.

Since it doesn’t move up or down, the total force on the block of water must be zero. That means there has to be a force counteracting gravity by pushing up with equal strength. We call this buoyancy, and for any object, the buoyancy force is equal to the weight of the water it displaces.

So let’s think about this. The steel block displaces the same amount of water, so it has the same upward-pushing buoyancy force as the block of water. But because it’s denser and has more mass, down it goes.

In general, an object will sink if the gravitational force exceeds the buoyancy force, and it will float if the buoyancy force exceeds the gravitational force. Another way of saying that is, an object will sink if it’s denser than water and it will float if it’s less dense.

And right in the middle an object will neither sink nor rise to the surface—we call that neutral buoyancy. Humans are pretty close to neutral because our bodies are 60 percent water. That’s why you feel weightless underwater—the buoyancy force pretty much offsets the gravitational force.

Avast! Hold on there, matey. Aircraft carriers are made of steel and weigh 100,000 tons, so why do they float? Can you guess? It’s because of their shape. Unlike a block of steel, a ship’s hull is hollow and filled with air, so it has a large volume relative to its weight.

But what if you start filling it with cargo? The ship gets heavier, which means it must displace more water to reach that equilibrium point. In general, when you launch a boat or ship into the water, it’ll sink down until the weight of the water it pushes aside equals the boat’s total weight.

Tech

The Ricoh GR IV, the Cult Favorite Pocket Camera, Just Got Way Better

When I reviewed the GR III, I wrote about how much I liked snap focus mode, which allows you to set a predetermined focus distance regardless of the aperture. I set up my GR III to use autofocus when I half-pressed the shutter and snap when I quickly pressed, so that snap focus fired off the shot at my predetermined focus distance (usually 1.5 meters).

All that remains, but there is also now a dedicated letter, Sn, on the mode dial that sets the camera in Snap Focus mode, which allows you to dial in not only the distance you want focus at, but also the aperture you want to lock in. You can control the depth of field as well. I rather enjoyed this new mode and found myself shooting with it quite a bit.

Should You Get One?

The GR IV debuted at $1,497, which is significantly more than the GR III’s $999 price at launch. Is it worth the extra money? If you have a GR III and are frustrated by the autofocus, I think you will like the upgrade. It’s significant and, if you have the money, well worth it.

If you have any desire to use your pocket camera for video, this is not the one for you. See our guides to pocket cameras and the best travel cameras for some better, hybrid photo- and video-capable cameras. If you want an APS-C sensor that legitimately fits in your pocket, offers amazing one-handed control, and produces excellent images, the the Ricoh GR IV is for you.

Personally, I am holding out for the GR IVx, which will hopefully, like the GR IIIx, be the same camera with a 40mm-equivalent lens. At the time of writing, Ricoh would not comment on whether there will be a GR IVx.

-

Sports7 days ago

Alabama turned Oklahoma’s College Football Playoff dream into a nightmare

-

Business1 week ago

Business1 week agoGold prices in Pakistan Today – December 20, 2025 | The Express Tribune

-

Entertainment7 days ago

Entertainment7 days agoRare look inside the secret LEGO Museum reveals the system behind a toy giant’s remarkable longevity

-

Business1 week ago

Business1 week agoRome: Tourists to face €2 fee to get near Trevi Fountain

-

Fashion1 week ago

Fashion1 week agoCELYS expands filament manufacturing capability

-

Politics1 week ago

Politics1 week agoUK teachers to tackle misogyny in classroom

-

Entertainment7 days ago

Entertainment7 days agoIndia drops Shubman Gill from T20 World Cup squad

-

Entertainment1 week ago

Entertainment1 week agoZoe Kravitz teases fans with ring in wedding finger