Tech

Robot ‘backpack’ drone launches, drives and flies to tackle emergencies

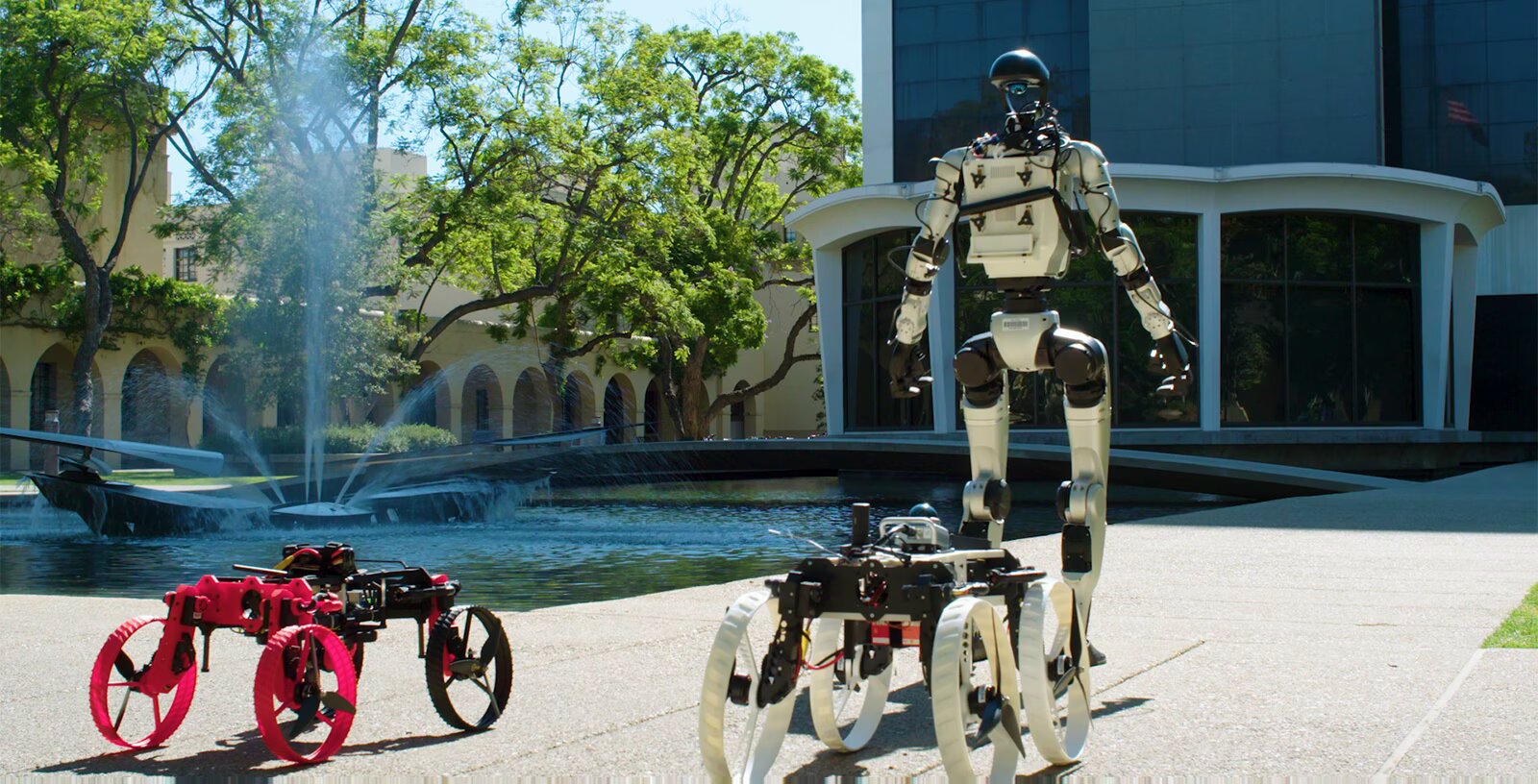

Introducing X1: The world’s first multirobot system that integrates a humanoid robot with a transforming drone that can launch off the humanoid’s back, and later, drive away.

The new multimodal system is one product of a three-year collaboration between Caltech’s Center for Autonomous Systems and Technologies (CAST) and the Technology Innovation Institute (TII) in Abu Dhabi, United Arab Emirates. The robotic system demonstrates the kind of innovative and forward-thinking projects that are possible with the combined global expertise of the collaborators in autonomous systems, artificial intelligence, robotics, and propulsion systems.

“Right now, robots can fly, robots can drive, and robots can walk. Those are all great in certain scenarios,” says Aaron Ames, the director and Booth-Kresa Leadership Chair of CAST and the Bren Professor of Mechanical and Civil Engineering, Control and Dynamical Systems, and Aerospace at Caltech. “But how do we take those different locomotion modalities and put them together into a single package, so we can excel from the benefits of all these while mitigating the downfalls that each of them have?”

Testing the capability of the X1 system, the team recently conducted a demonstration on Caltech’s campus. The demo was based on the following premise: Imagine that there is an emergency somewhere on campus, creating the need to quickly get autonomous agents to the scene. For the test, the team modified an off-the-shelf Unitree G1 humanoid such that it could carry M4, Caltech’s multimodal robot that can both fly and drive, as if it were a backpack.

The demo started with the humanoid in Gates–Thomas Laboratory. It walked through Sherman Fairchild Library and went outside to an elevated spot where it could safely deploy M4. The humanoid then bent forward at the waist, allowing M4 to launch in its drone mode. M4 then landed and transformed into driving mode to efficiently continue on wheels toward its destination.

Before reaching that destination, however, M4 encountered the Turtle Pond, so it switched back to drone mode, quickly flew over the obstacle, and made its way to the site of the “emergency” near Caltech Hall. The humanoid and a second M4 eventually met up with the first responder.

“The challenge is how to bring different robots to work together so, basically, they become one system providing different functionalities. With this collaboration, we found the perfect match to solve this,” says Mory Gharib, Ph.D., the Hans W. Liepmann Professor of Aeronautics and Medical Engineering at Caltech and CAST’s founding director.

Gharib’s group, which originally built the M4 robot, focuses on building flying and driving robots as well as advanced control systems. The Ames lab, for its part, brings expertise in locomotion and developing algorithms for the safe use of humanoid robots. Meanwhile, TII brings a wealth of knowledge about autonomy and sensing with robotic systems in urban environments. A Northeastern University team led by engineer Alireza Ramezani assists in the area of morphing robot design.

“The overall collaboration atmosphere was great. We had different researchers with different skill sets looking at really challenging robotics problems spanning from perception and sensor data fusion to locomotion modeling and controls, to hardware design,” says Ramezani, an associate professor at Northeastern.

When TII engineers visited Caltech in July 2025, the partners built a new version of M4 that takes advantage of Saluki, a secure flight controller and computer technology developed by TII for onboard computing. In a future phase of work, the collaboration aims to give the entire system sensors, model-based algorithms, and machine learning-driven autonomy to navigate and adapt to its surroundings in real time.

“We install different kinds of sensors—lidar, cameras, range finders—and we combine all these data to understand where the robot is, and the robot understands where it is in order to go from one point to another,” says Claudio Tortorici, director of TII. “So, we bring the capability of the robots to move around with autonomy.”

Ames explains that even more was on display in the demo than meets the eye. For example, he says, the humanoid robot did more than simply walking around campus. Currently, the majority of humanoid robots are given data originally captured from human movements to achieve a particular movement, such as walking or kicking, and scaling that action to the robot. If all goes well, the robot can imitate that action repeatedly.

But, Ames argues, “If we want to really deploy robots in complicated scenarios in the real world, we need to be able to generate these actions without necessarily having human references.”

His group builds mathematical models that describe the physics of that application to a robot more broadly. When these are fused with machine learning techniques, the models imbue robots with more general abilities to navigate any situation they might encounter.

“The robot learns to walk as the physics dictate,” Ames says. “So X1 can walk; it can walk on different terrain types; it can walk up and down stairs, and importantly, it can walk with things like M4 on its back.”

An overarching goal of the collaboration is to make such autonomous systems safer and more reliable.

“I believe we are at a stage where people are starting to accept these robots,” Tortorici says. ” In order to have robots all around us, we need these robots to be reliable.”

That is ongoing work for the team. “We’re thinking about safety-critical control, making sure we can trust our systems, making sure they’re secure,” Ames says. “We have multiple projects that extend beyond this one that study all these different facets of autonomy, and these problems are really big. By having these different projects and facets of our collaboration, we are able to take on these much bigger problems and really move autonomy forward in a substantial and concerted way.”

Citation:

Robot ‘backpack’ drone launches, drives and flies to tackle emergencies (2025, October 14)

retrieved 14 October 2025

from https://techxplore.com/news/2025-10-robot-backpack-drone-flies-tackle.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

Tech

Asus Made a Split Keyboard for Gamers—and Spared No Expense

The wheel on the left side has options to adjust actuation distance, rapid-trigger sensitivity, and RGB brightness. You can also adjust volume and media playback, and turn it into a scroll wheel. The LED matrix below it is designed to display adjustments to actuation distance but feels a bit awkward: Each 0.1 mm of adjustment fills its own bar, and it only uses the bottom nine bars, so the screen will roll over four times when adjusting (the top three bars, with dots next to them, illuminate to show how many times the screen has rolled over during the adjustment). The saving grace of this is that, when adjusting the actuation distance, you can press down any switch to see a visualization of how far you’re pressing it, then tweak the actuation distance to match.

Alongside all of this, the Falcata (and, by extension, the Falchion) now has an aftermarket switch option: TTC Gold magnetic switches. While this is still only two switches, it’s an improvement over the singular switch option of most Hall effect keyboards.

Split Apart

Photograph: Henri Robbins

The internal assembly of this keyboard is straightforward yet interesting. Instead of a standard tray mount, where the PCB and plate bolt directly into the bottom half of the shell, the Falcata is more comparable to a bottom-mount. The PCB screws into the plate from underneath, and the plate is screwed onto the bottom half of the case along the edges. While the difference between the two mounting methods is minimal, it does improve typing experience by eliminating the “dead zones” caused by a post in the middle of the keyboard, along with slightly isolating typing from the case (which creates fewer vibrations when typing).

The top and bottom halves can easily be split apart by removing the screws on the plate (no breakable plastic clips here!), but on the left half, four cables connect the top and bottom halves of the keyboard, all of which need to be disconnected before fully separating the two sections. Once this is done, the internal silicone sound-dampening can easily be removed. The foam dampening, however, was adhered strongly enough that removing it left chunks of foam stuck to the PCB, making it impossible to readhere without using new adhesive. This wasn’t a huge issue, since the foam could simply be placed into the keyboard, but it is still frustrating to see when most manufacturers have figured this out.

Tech

These Sub-$300 Hearing Aids From Lizn Have a Painful Fit

Don’t call them hearing aids. They’re hearpieces, intended as a blurring of the lines between hearing aid and earbuds—or “earpieces” in the parlance of Lizn, a Danish operation.

The company was founded in 2015, and it haltingly developed its launch product through the 2010s, only to scrap it in 2020 when, according to Lizn’s history page, the hearing aid/earbud combo idea didn’t work out. But the company is seemingly nothing if not persistent, and four years later, a new Lizn was born. The revamped Hearpieces finally made it to US shores in the last couple of weeks.

Half Domes

Photograph: Chris Null

Lizn Hearpieces are the company’s only product, and their inspiration from the pro audio world is instantly palpable. Out of the box, these look nothing like any other hearing aids on the market, with a bulbous design that, while self-contained within the ear, is far from unobtrusive—particularly if you opt for the graphite or ruby red color scheme. (I received the relatively innocuous sand-hued devices.)

At 4.58 grams per bud, they’re as heavy as they look; within the in-the-ear space, few other models are more weighty, including the Kingwell Melodia and Apple AirPods Pro 3. The units come with four sets of ear tips in different sizes; the default mediums worked well for me.

The bigger issue isn’t how the tip of the device fits into your ear, though; it’s how the rest of the unit does. Lizn Hearpieces need to be delicately twisted into the ear canal so that one edge of the unit fits snugly behind the tragus, filling the concha. My ears may be tighter than others, but I found this no easy feat, as the device is so large that I really had to work at it to wedge it into place. As you might have guessed, over time, this became rather painful, especially because the unit has no hardware controls. All functions are performed by various combinations of taps on the outside of either of the Hearpieces, and the more I smacked the side of my head, the more uncomfortable things got.

Tech

Two Thinking Machines Lab Cofounders Are Leaving to Rejoin OpenAI

Thinking Machines cofounders Barret Zoph and Luke Metz are leaving the fledgling AI lab and rejoining OpenAI, the ChatGPT-maker announced on Thursday. OpenAI’s CEO of applications, Fidji Simo, shared the news in a memo to staff Thursday afternoon.

The news was first reported on X by technology reporter Kylie Robison, who wrote that Zoph was fired for “unethical conduct.”

A source close to Thinking Machines said that Zoph had shared confidential company information with competitors. WIRED was unable to verify this information with Zoph, who did not immediately respond to WIRED’s request for comment.

Zoph told Thinking Machines CEO Mira Murati on Monday he was considering leaving, then was fired today, according to the memo from Simo. She goes on to write that OpenAI doesn’t share the same concerns about Zoph as Murati.

The personnel shake-up is a major win for OpenAI, which recently lost its VP of research, Jerry Tworek.

Another Thinking Machines Lab staffer, Sam Schoenholz, is also rejoining OpenAI, the source said.

Zoph and Metz left OpenAI in late 2024 to start Thinking Machines with Murati, who had been the ChatGPT-maker’s chief technology officer.

This is a developing story. Please check back for updates.

-

Entertainment1 week ago

Entertainment1 week agoDoes new US food pyramid put too much steak on your plate?

-

Politics1 week ago

Politics1 week agoUK says provided assistance in US-led tanker seizure

-

Entertainment1 week ago

Entertainment1 week agoWhy did Nick Reiner’s lawyer Alan Jackson withdraw from case?

-

Business1 week ago

Business1 week agoTrump moves to ban home purchases by institutional investors

-

Sports1 week ago

Sports1 week agoPGA of America CEO steps down after one year to take care of mother and mother-in-law

-

Sports4 days ago

Sports4 days agoClock is ticking for Frank at Spurs, with dwindling evidence he deserves extra time

-

Business1 week ago

Business1 week agoBulls dominate as KSE-100 breaks past 186,000 mark – SUCH TV

-

Business1 week ago

Business1 week agoGold prices declined in the local market – SUCH TV