Tech

Who is Zico Kolter? A professor leads OpenAI safety panel with power to halt unsafe AI releases

If you believe artificial intelligence poses grave risks to humanity, then a professor at Carnegie Mellon University has one of the most important roles in the tech industry right now.

Zico Kolter leads a 4-person panel at OpenAI that has the authority to halt the ChatGPT maker’s release of new AI systems if it finds them unsafe. That could be technology so powerful that an evildoer could use it to make weapons of mass destruction. It could also be a new chatbot so poorly designed that it will hurt people’s mental health.

“Very much we’re not just talking about existential concerns here,” Kolter said in an interview with The Associated Press. “We’re talking about the entire swath of safety and security issues and critical topics that come up when we start talking about these very widely used AI systems.”

OpenAI tapped the computer scientist to be chair of its Safety and Security Committee more than a year ago, but the position took on heightened significance last week when California and Delaware regulators made Kolter’s oversight a key part of their agreements to allow OpenAI to form a new business structure to more easily raise capital and make a profit.

Safety has been central to OpenAI’s mission since it was founded as a nonprofit research laboratory a decade ago with a goal of building better-than-human AI that benefits humanity. But after its release of ChatGPT sparked a global AI commercial boom, the company has been accused of rushing products to market before they were fully safe in order to stay at the front of the race. Internal divisions that led to the temporary ouster of CEO Sam Altman in 2023 brought those concerns that it had strayed from its mission to a wider audience.

The San Francisco-based organization faced pushback—including a lawsuit from co-founder Elon Musk—when it began steps to convert itself into a more traditional for-profit company to continue advancing its technology.

Agreements announced last week by OpenAI along with California Attorney General Rob Bonta and Delaware Attorney General Kathy Jennings aimed to assuage some of those concerns.

At the heart of the formal commitments is a promise that decisions about safety and security must come before financial considerations as OpenAI forms a new public benefit corporation that is technically under the control of its nonprofit OpenAI Foundation.

Kolter will be a member of the nonprofit’s board but not on the for-profit board. But he will have “full observation rights” to attend all for-profit board meetings and have access to information it gets about AI safety decisions, according to Bonta’s memorandum of understanding with OpenAI. Kolter is the only person, besides Bonta, named in the lengthy document.

Kolter said the agreements largely confirm that his safety committee, formed last year, will retain the authorities it already had. The other three members also sit on the OpenAI board—one of them is former U.S. Army General Paul Nakasone, who was commander of the U.S. Cyber Command. Altman stepped down from the safety panel last year in a move seen as giving it more independence.

“We have the ability to do things like request delays of model releases until certain mitigations are met,” Kolter said. He declined to say if the safety panel has ever had to halt or mitigate a release, citing the confidentiality of its proceedings.

Kolter said there will be a variety of concerns about AI agents to consider in the coming months and years, from cybersecurity—”Could an agent that encounters some malicious text on the internet accidentally exfiltrate data?”—to security concerns surrounding AI model weights, which are numerical values that influence how an AI system performs.

“But there’s also topics that are either emerging or really specific to this new class of AI model that have no real analogues in traditional security,” he said. “Do models enable malicious users to have much higher capabilities when it comes to things like designing bioweapons or performing malicious cyberattacks?”

“And then finally, there’s just the impact of AI models on people,” he said. “The impact to people’s mental health, the effects of people interacting with these models and what that can cause. All of these things, I think, need to be addressed from a safety standpoint.”

OpenAI has already faced criticism this year about the behavior of its flagship chatbot, including a wrongful-death lawsuit from California parents whose teenage son killed himself in April after lengthy interactions with ChatGPT.

Kolter, director of Carnegie Mellon’s machine learning department, began studying AI as a Georgetown University freshman in the early 2000s, long before it was fashionable.

“When I started working in machine learning, this was an esoteric, niche area,” he said. “We called it machine learning because no one wanted to use the term AI because AI was this old-time field that had overpromised and underdelivered.”

Kolter, 42, has been following OpenAI for years and was close enough to its founders that he attended its launch party at an AI conference in 2015. Still, he didn’t expect how rapidly AI would advance.

“I think very few people, even people working in machine learning deeply, really anticipated the current state we are in, the explosion of capabilities, the explosion of risks that are emerging right now,” he said.

AI safety advocates will be closely watching OpenAI’s restructuring and Kolter’s work. One of the company’s sharpest critics says he’s “cautiously optimistic,” particularly if Kolter’s group “is actually able to hire staff and play a robust role.”

“I think he has the sort of background that makes sense for this role. He seems like a good choice to be running this,” said Nathan Calvin, general counsel at the small AI policy nonprofit Encode. Calvin, who OpenAI targeted with a subpoena at his home as part of its fact-finding to defend against the Musk lawsuit, said he wants OpenAI to stay true to its original mission.

“Some of these commitments could be a really big deal if the board members take them seriously,” Calvin said. “They also could just be the words on paper and pretty divorced from anything that actually happens. I think we don’t know which one of those we’re in yet.”

© 2025 The Associated Press. All rights reserved. This material may not be published, broadcast, rewritten or redistributed without permission.

Citation:

Who is Zico Kolter? A professor leads OpenAI safety panel with power to halt unsafe AI releases (2025, November 2)

retrieved 2 November 2025

from https://techxplore.com/news/2025-11-zico-kolter-professor-openai-safety.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

Tech

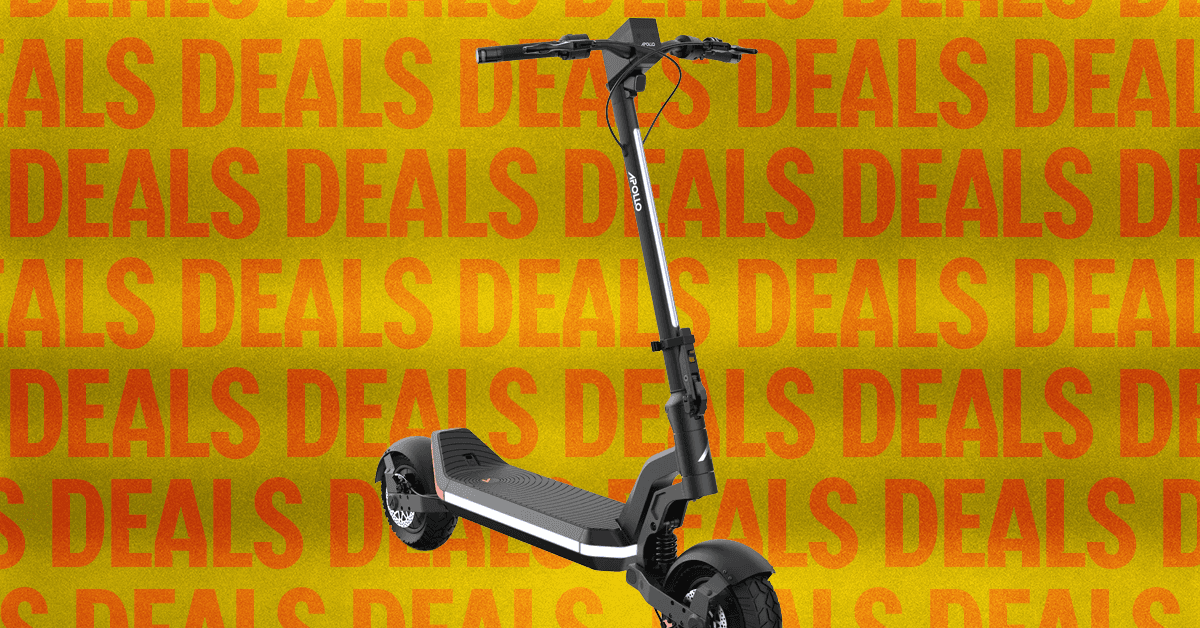

Just in Time for Spring, Don’t Miss These Electric Scooter Deals

The snow is melting, the days are getting longer, and I can almost smell the springtime ahead. Soon, we’ll be cruising around town on ebikes and electric scooters instead of burning fossil fuels. For now, the weather hasn’t quite caught up, which is great for markdowns. Many of the best electric scooters are still seeing significant discounts. If you’ve been thinking about buying one, now’s the best time: prices are low, and sunny commuting days are just ahead.

Gear editor Julian Chokkattu has spent five years testing more than 45 electric scooters. These are his top picks that are also on sale right now.

Apollo Go for $849 ($450 Off)

This is Gear editor Julian Chokkattu’s favorite scooter. The riding experience is powerful and smooth, thanks to its dual 350-watt motors and solid front and rear suspensions. The speed maxes out at 28 miles per hour (mph), which doesn’t make it the fastest scooter on the market, but it has a good range. (Chokkattu is a very tall man and was able to travel 15 miles on a single charge at 15 mph.) Other Apollo features he appreciates: turn signals, a dot display, a bell, along with a headlight and an LED strip for extra visibility.

Apollo Phantom 2.0 for $2099 ($900 Off)

The Apollo Phantom 2.0 maxes out at 44 mph, with plenty of power from its dual 1,750-watt motors. It’s a gorgeous scooter, designed with 11-inch self-healing tubeless tires and a dual-spring suspension system for a smooth riding experience. But with great power comes great weight. At 102 pounds, the Phantom 2.0 is the heaviest electric scooter Chokkattu has tested, so I would only recommend this purchase if you don’t live in a walkup and/or have a garage.

More Discounted Electric Scooters

Tech

What’s an E-Bike? California Wants You to Know

A few months ago, a family came into Pasadena Cyclery in Pasadena, California, for a repair on what they thought was their teenager’s e-bike. “I can’t fix that here,’ Daniel Purnell, a store manager and technician, remembers telling them. “That’s a motorcycle.” The mother got upset. She didn’t realize that what she thought was an e-bike could go much faster, perhaps up to 55 miles per hour.

“There’s definitely an education problem,” Purnell says. In California, bike advocates are pushing a new bill designed to clear up that confusion around what counts as an electric bicycle—and what doesn’t.

It’s a tricky balance. On one hand, backers want to allow riders access to new, faster, and more affordable non-car transportation options, ones that don’t require licenses and are emission-free. On the other hand, people, and especially kids, seem to be getting hurt. E-bike-related injuries jumped more than 1,020 percent nationwide between 2020 and 2024, according to hospital data, though it’s not clear if the stats-keepers can routinely distinguish between e-bikes and their faster, “e-moto” cousins. (Moped and powered-assisted cycle injuries jumped 67 percent in that same period.)

“We’re overdue to have better e-bike regulation,” says California state senator Catherine Blakespear, a Democrat who sponsored the bill and represents parts of North County in San Diego. “This has been an ongoing and growing issue for years.”

Senate Bill 1167 would make it illegal for retailers to label higher-powered, electric-powered vehicles as e-bikes. It would clarify that e-bikes have fully operative pedals and electric motors that don’t exceed 750 watts, enough to hit top speeds between 20 and 28 mph.

“We’re not against these devices,” says Kendra Ramsey, the executive director of the California Bicycle Coalition, which represents riders and is promoting the legislation. “People think they’re e-bikes and they’re not really e-bikes.”

Bill backers say they hope the fix, if it passes, makes a difference, especially for teenagers, who love the freedom that electric motors give them but can get into trouble if something goes wrong at higher speeds. Kids 17 and younger accounted for 20 percent of US e-bike injuries from 2020 to 2024, about in line with the share of the total population. But headlines—and the laws that follow them—have focused on teen injuries and even deaths.

There are no national laws governing e-bike riding. But bike backers spent years moving between states to pass laws that put e-bikes into three classes: Class 1, which have pedal-assist that only works when they’re actually pedaled, and goes up to 20 mph; Class 2, which have throttles that work without pedaling but still only reach 20 mph; and Class 3, which use pedal-assist to move up to 28 mph. Plenty of states and cities restrict the most powerful Class 3 bikes to people older than 16. (In a complicated twist, some e-bikes have different “modes,” allowing riders to toggle between Class 2 and Class 3.)

Last year, researchers visited 19 San Francisco Bay Area middle and high schools and found that 88 percent of the electric two-wheeled devices parked there were so high-powered and high-speed that they didn’t comply with the three-class system at all.

E-bikes have clearly struck a chord with state policymakers: At least 10 bills introduced this year deal with e-bikes, according to Ramsey.

Some bike advocates believe injuries have less to do with e-bikes than “e-motos,” a category that’s less likely to appear in retail stores or the sort of social media ads attracting teens to the tech. These have more powerful motors and can travel in excess of 30 mph. Vehicles, like the Surron Ultra Bee, which can hit top speeds of 55 mph, or Tuttio ICT, which can hit 50, are often marketed by retailers as “electric bikes.” Because so many sales happen online, it can be hard for people, and especially parents, to know what they’re getting into.

Tech

OpenAI Fires an Employee for Prediction Market Insider Trading

OpenAI has fired an employee following an investigation into their activity on prediction market platforms including Polymarket, WIRED has learned.

OpenAI CEO of Applications, Fidji Simo, disclosed the termination in an internal message to employees earlier this year. The employee, she said, “used confidential OpenAI information in connection with external prediction markets (e.g. Polymarket).”

“Our policies prohibit employees from using confidential OpenAI information for personal gain, including in prediction markets,” says spokesperson Kayla Wood. OpenAI has not revealed the name of the employee or the specifics of their trades.

Evidence suggests that this was not an isolated event. Polymarket runs on the Polygon blockchain network, so its trading ledger is pseudonymous but traceable. According to an analysis by the financial data platform Unusual Whales, there have been clusters of activities, which the service flagged as suspicious, around OpenAI-themed events since March 2023.

Unusual Whales flagged 77 positions in 60 wallet addresses as suspected insider trades, looking at the age of the account, trading history, and significance of investment, among other factors. Suspicious trades hinged on the release dates of products like Sora, GPT-5, and the ChatGPT Browser, as well as CEO Sam Altman’s employment status. In November 2023, two days after Altman was dramatically ousted from the company, a new wallet placed a significant bet that he would return, netting over $16,000 in profits. The account never placed another bet.

The behavior fits into patterns typical of insider trades. “The tell is the clustering. In the 40 hours before OpenAI launched its browser, 13 brand-new wallets with zero trading history appeared on the site for the first time to collectively bet $309,486 on the right outcome,” says Unusual Whales CEO Matt Saincome. “When you see that many fresh wallets making the same bet at the same time, it raises a real question about whether the secret is getting out.”

Prediction markets have exploded in popularity in recent years. These platforms allow customers to buy “event contracts” on the outcomes of future events ranging from the winner of the Super Bowl to the daily price of Bitcoin to whether the United States will go to war with Iran. There are a wide array of markets tied to events in the technology sector; you can trade on what Nvidia’s quarterly earnings will be, or when Tesla will launch a new car, or which AI companies will IPO in 2026.

As the platforms have grown, so have concerns that they allow traders to profit from insider knowledge. “This prediction market world makes the Wild West look tame in comparison,” says Jeff Edelstein, a senior analyst at the betting news site InGame. “If there’s a market that exists where the answer is known, somebody’s going to trade on it.”

Earlier this week, Kalshi announced that it had reported several suspicious insider trading cases to the Commodity Futures Trading Commission, the government agency overseeing these markets. In one instance, an employee of the popular YouTuber Mr. Beast was suspended for two years and fined $20,000 for making trades related to the streamer’s activities; in another, the far-right political candidate Kyle Langford was banned from the platform for making a trade on his own campaign. The company also announced a number of initiatives to prevent insider trading and market manipulation.

While Kalshi has heavily promoted its crackdown on insider trading, Polymarket has stayed silent on the matter. The company did not return requests for comments.

In the past, major trades on technology-themed markets have sparked speculation that there are Big Tech employees profiting by using their insider knowledge to gain an edge. One notorious example is the so-called “Google whale,” a pseudonymous account on Polymarket that made over $1 million trading on Google-related events, including a market on who the most-searched person of the year would be in 2025. (It was the singer D4vd, who is best known for his connection to an ongoing murder investigation after a young fan’s remains were found in a vehicle registered to him.)

-

Tech1 week ago

Tech1 week agoA $10K Bounty Awaits Anyone Who Can Hack Ring Cameras to Stop Sharing Data With Amazon

-

Business1 week ago

Business1 week agoUS Top Court Blocks Trump’s Tariff Orders: Does It Mean Zero Duties For Indian Goods?

-

Fashion1 week ago

Fashion1 week agoICE cotton ticks higher on crude oil rally

-

Business6 days ago

Business6 days agoEye-popping rise in one year: Betting on just gold and silver for long-term wealth creation? Think again! – The Times of India

-

Tech1 week ago

Tech1 week agoDonald Trump Jr.’s Private DC Club Has Mysterious Ties to an Ex-Cop With a Controversial Past

-

Fashion1 week ago

Fashion1 week agoIndia’s $28 bn reset: How 5 trade deals will reprice its T&A exports

-

Sports1 week ago

Sports1 week agoBrett Favre blasts NFL for no longer appealing to ‘true’ fans: ‘There’s been a slight shift’

-

Entertainment1 week ago

Entertainment1 week agoThe White Lotus” creator Mike White reflects on his time on “Survivor

.png)

.png)