Tech

AI model could boost robot intelligence via object recognition

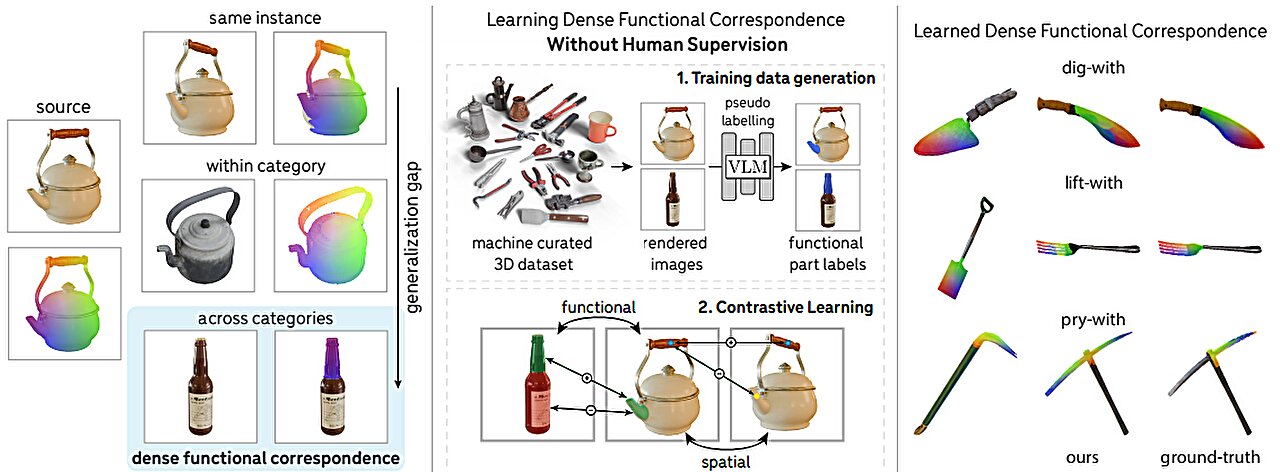

Stanford researchers have developed an innovative computer vision model that recognizes the real-world functions of objects, potentially allowing autonomous robots to select and use tools more effectively.

In the field of AI known as computer vision, researchers have successfully trained models that can identify objects in two-dimensional images. It is a skill critical to a future of robots able to navigate the world autonomously. But object recognition is only a first step. AI also must understand the function of the parts of an object—to know a spout from a handle, or the blade of a bread knife from that of a butter knife.

Computer vision experts call such utility overlaps “functional correspondence.” It is one of the most difficult challenges in computer vision. But now, in a paper that will be presented at the International Conference on Computer Vision (ICCV 2025), Stanford scholars will debut a new AI model that can not only recognize various parts of an object and discern their real-world purposes but also map those at pixel-by-pixel granularity between objects.

A future robot might be able to distinguish, say, a meat cleaver from a bread knife or a trowel from a shovel and select the right tool for the job. Potentially, the researchers suggest, a robot might one day transfer the skills of using a trowel to a shovel—or of a bottle to a kettle—to complete a job with different tools.

“Our model can look at images of a glass bottle and a tea kettle and recognize the spout on each, but also it comprehends that the spout is used to pour,” explains co-first author Stefan Stojanov, a Stanford postdoctoral researcher advised by senior authors Jiajun Wu and Daniel Yamins. “We want to build a vision system that will support that kind of generalization—to analogize, to transfer a skill from one object to another to achieve the same function.”

Establishing correspondence is the art of figuring out which pixels in two images refer to the same point in the world, even if the photographs are from different angles or of different objects. This is hard enough if the image is of the same object but, as the bottle versus tea kettle example shows, the real world is rarely so cut-and-dried. Autonomous robots will need to generalize across object categories and to decide which object to use for a given task.

One day, the researchers hope, a robot in a kitchen will be able to select a tea kettle to make a cup of tea, know to pick it up by the handle, and to use the kettle to pour hot water from its spout.

Autonomy rules

True functional correspondence would make robots far more adaptable than they are currently. A household robot would not need training on every tool at its disposal but could reason by analogy to understand that while a bread knife and a butter knife may both cut, they each serve a specific purpose.

In their work, the researchers say, they have achieved “dense” functional correspondence, where earlier efforts were able to achieve only sparse correspondence to define only a few key points on each object. The challenge so far has been a paucity of data, which typically had to be amassed through human annotation.

“Unlike traditional supervised learning where you have input images and corresponding labels written by humans, it’s not feasible to humanly annotate thousands of pixels individually aligning across two different objects,” says co-first author Linan “Frank” Zhao, who recently earned his master’s in computer science at Stanford. “So, we asked AI to help.”

The team was able to achieve a solution with what is known as weak supervision—using vision-language models to generate labels to identify functional parts and using human experts only to quality-control the data pipeline. It is a far more efficient and cost-effective approach to training.

“Something that would have been very hard to learn through supervised learning a few years ago now can be done with much less human effort,” Zhao adds.

In the kettle and bottle example, for instance, each pixel in the spout of the kettle is aligned with a pixel in the mouth of the bottle, providing dense functional mapping between the two objects. The new vision system can spot function in structure across disparate objects—a valuable fusion of functional definition and spatial consistency.

Seeing the future

For now, the system has been tested only on images and not in real-world experiments with robots, but the team believes the model is a promising advance for robotics and computer vision. Dense functional correspondence is part of a larger trend in AI in which models are shifting from mere pattern recognition toward reasoning about objects. Where earlier models saw only patterns of pixels, newer systems can infer intent.

“This is a lesson in form following function,” says Yunzhi Zhang, a Stanford doctoral student in computer science. “Object parts that fulfill a specific function tend to remain consistent across objects, even if other parts vary greatly.”

Looking ahead, the researchers want to integrate their model into embodied agents and build richer datasets.

“If we can come up with a way to get more precise functional correspondences, then this should prove to be an important step forward,” Stojanov says. “Ultimately, teaching machines to see the world through the lens of function could change the trajectory of computer vision—making it less about patterns and more about utility.”

More information:

Weakly-Supervised Learning of Dense Functional Correspondences. dense-functional-correspondence.github.io/ On arXiv: DOI: 10.48550/arxiv.2509.03893

Citation:

AI model could boost robot intelligence via object recognition (2025, October 20)

retrieved 20 October 2025

from https://techxplore.com/news/2025-10-ai-boost-robot-intelligence-recognition.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

Tech

Are DJI Drones Still Banned?

As of December 23, 2025, the US Federal Communications Commission barred Chinese-based drone maker DJI from importing any new drones into the United State. That might sound like you can’t buy a DJI drone right now, but that’s not true. Head over to Amazon and just about the whole DJI drone lineup is still for sale. So what gives? Are they banned or not?

The key word in the previous paragraph was any new drone. Nothing DJI has made in the past is banned. No one is taking your drone away. It’s still perfectly legal to fly a drone. And this isn’t just a DJI ban. It’s a ban on foreign-made drones, which includes those from companies such as DJI, Autel Robotics, HoverAir, and thers. That DJI is singled out in headlines has more to do with its market dominance than the way the rules are written.

I’d like to say that with the biggest competitor essentially removed from the market that US-based companies are swooping in with new drones. Actually we did say that once about Skydio, and we even liked the Skydio drone we tested, but since then Skydio has shifted away from the consumer market.

No New Drones

Courtesy of DJI

While it’s good news that the old stuff is still for sale, it’s unlikely that any new drones will arrive.

In order to sell in the United States, anything that uses radio frequency components has to be approved by the FCC. Drones use radio frequencies when flying, so they fall under FCC jurisdiction. Because none of the drone companies have had the security review they need by an approved US agency, they have all been placed on what’s called the Covered List. Companies on the Covered List do not have approval to import products into the US, effectively banning them.

There’s some evidence that wheels are turning somewhere, in a way that could spell good news for consumer drone flyers. Last week, the FCC amended its Covered List to exempt drones and components already approved by the Defense Contract Management Agency’s Blue UAS list. The FCC says in its public statement, “The DoW has determined that UAS and UAS critical components included on Defense Contract Management Agency’s (DCMA’s) Blue UAS list do not currently present unacceptable risks to the national security of the United States or to the safety and security of US persons.”

For the most part, this doesn’t really impact consumer drones, unless you were in the market for a $13.6k Parrot Anafi USA Gov edition thermal drone, but it’s better than silence, which has been the primary thing we’ve heard leading up to the December ban.

Tech

Zayo expands network across Iberian Peninsula | Computer Weekly

In a move described as underscoring the company’s strategic focus on pan-European connectivity amid rising data demands from artificial intelligence (AI), cloud and next-generation technologies, Zayo Europe has launched a “landmark” network in Iberia.

Operating across 13 countries and connecting 47 markets, Zayo already connects more than 600 datacentres with a “future-ready” network spanning over 2.7 million fibre kilometres and eight subsea systems. The company said its mission is to deliver the infrastructure that powers Europe’s digital economy, offering tailored connectivity solutions that enable telecom service providers, cloud platforms, datacentres, system integrators and enterprises to deliver the network performance they require from core to cloud to edge.

Following a recent expansion in the German Market, Iberia has become the next strategic link for Zayo, furthering the reach of its pan-European network. The new network will encompass the region’s leading cities including Madrid, Lisbon, Barcelona, Bilbao and Sines.

It is being delivered in partnership with Spanish dark fibre operator Reintel, which boasts more than 54,000km of interconnected fibre infrastructure across Spain. The company provides neutral and high-quality connectivity products through a network of sites linked to both the energy and railway sectors.

Zayo Europe sees the partnership marking a major milestone as brings its 400GE enabled wavelength network to the Iberian Peninsula, as well as expanding its Tier-1 IP offering to Portugal and to more Spanish cities.

The collaboration will look to deliver low-latency, high-capacity connectivity across Iberia, connecting the key business hubs. The partners see the new route as a way to enhance network diversity, reduce deployment times and strengthen connectivity options for businesses and carriers operating in the region.

Spanning over 3,500km of fibre across Iberia, Zayo Europe’s network will look to enable “seamless” datacentre-to-datacentre connectivity, faster cloud adoption and high-performance handling of data-intensive workloads. The move is set to strengthen Zayo Europe’s global reach, linking Iberia to international networks across the Mediterranean and Atlantic, and supporting the digital transformation of businesses across multiple continents.

“This partnership marks another important step in Zayo Europe’s journey to connect the continent’s most dynamic markets. Spain and Portugal are quickly emerging as major datacentre hubs, with a strong supply of renewable energy driving new investments to power AI and other cutting-edge technologies,” said Colman Deegan, Zayo Europe CEO.

“We’re delighted to partner with Reintel, who operate the highest quality, mission-critical fibre infrastructure in the region. By extending our network through their low latency, high availability fibre routes, we’re enabling enterprises, datacentres and carriers across Iberia to access our extensive high-performance connectivity that underpins Europe’s innovation economy. With the significant DC roll-out planned in 2026, Zayo Europe is poised to set connectivity trends for the decade ahead.”

Reintel CEO Francisco J. Blanca Patón added: “Zayo Europe’s expansion into Iberia aligns perfectly with our mission to accelerate Spain’s digital transformation. Combining our extensive dark fibre footprint with Zayo Europe’s international network and unparalleled service excellence creates powerful opportunities for customers across the region. This partnership will empower datacentres and businesses across Spain and Portugal to keep pace with rising data demands and, ultimately, strengthen Europe’s digital backbone. We look forward to what can be achieved together through 2026 and beyond.”

Tech

De-Gunk and Descale Your Keurig with These Cleaning Tips

It can be tricky to figure out how to clean your Keurig, but it’s important work. If your household is like mine, your pod coffee maker runs anywhere from three to seven times per day. All of that use can cause buildup and gunk, which can affect the taste of your coffee and the lifespan of your machine. But with proper maintenance and a dedicated routine, cleaning is a breeze. Here’s everything you need to know about light daily cleaning as well as deeper cleans.

Be sure to check out our related buying guides, including the Best Pod Coffee Makers, the Best Coffee Machines, the Best Coffee Subscriptions, and the Best Milk Frothers.

Daily Maintenance

To clean the housing of your Keurig coffee maker or other pod machine, just take a damp cloth and wipe down the outside. You can clean the K-Cup holder and needle by brushing or vacuuming away any loose debris like coffee grounds—be careful near the needle part since, obviously, it’s sharp.

Some machines come with a needle cleaning tool that you insert into the top and bottom of the needle, and a few people on various forums have used a paper clip instead. Some machines have removable pod holders that can be soaked in hot water. It’s always a good idea to refer to your specific model’s user guide, and you’ll probably want to unplug your machine beforehand.

To clean your drip tray and water reservoir, remove them and wash them by hand with hot, soapy water (though avoid using too much dish soap to prevent buildup). If your machine came with a carafe, wash it by hand or pop it in the dishwasher if it’s dishwasher-safe. Let them air dry or wipe them down with a lint-free towel after rinsing them off. You should be replacing the fresh water in your reservoir often, especially if it’s been sitting for a while. If your machine has a water filter in its reservoir, replace it every two to three months. Most machines with these types of filters have maintenance reminders—heed them!

For cleaning out the internal bits and pieces, you can use something like a Keurig Rinse Pod, which helps to flush out any excess oils or flavors that might be lingering. They are especially handy after brewing with flavored K-Cups like hot cocoa or some coffee varieties. You can also just run a hot water cycle every so often, which is a particularly good idea if you haven’t used your machine for a few days.

Deeper Cleaning and Descaling

Some manufacturers recommend using filtered water or distilled water instead of tap water in your reservoirs, but I’ve always used tap water with the knowledge that I might have to clean my machine more frequently. You should deep-clean or descale your pod coffee maker every three to six months, or possibly more often if you notice hard water stains, calcium deposits, or mineral buildup, or your machine prompts you to deep-clean it.

You can do this a few ways. For the DIY method, fill your water tank with white vinegar and water (about half and half) and run large-capacity brew cycles until the reservoir is empty; Halfway through, consider letting the vinegar solution soak for a while, around 20 to 30 minutes. Follow up with a few rinsing cycles using clean water until the vinegar smell is gone. Alternatively, you can use a dedicated Keurig descaling solution according to the instructions on the bottle. That solution can be used on non-Keurig machines too. Make sure your machine is fully rinsed out before brewing your next cup of coffee.

It’s important to perform these deeper cleaning cycles on a regular basis to ensure your machine lasts as long as possible. And that your coffee tastes good, of course.

Power up with unlimited access to WIRED. Get best-in-class reporting and exclusive subscriber content that’s too important to ignore. Subscribe Today.

-

Tech1 week ago

Tech1 week agoNew Proposed Legislation Would Let Self-Driving Cars Operate in New York State

-

Entertainment7 days ago

Entertainment7 days agoX (formerly Twitter) recovers after brief global outage affects thousands

-

Sports5 days ago

Sports5 days agoPak-Australia T20 series tickets sale to begin tomorrow – SUCH TV

-

Fashion3 days ago

Fashion3 days agoBangladesh, Nepal agree to fast-track proposed PTA

-

Business4 days ago

Business4 days agoTrump’s proposed ban on buying single-family homes introduces uncertainty for family offices

-

Tech4 days ago

Tech4 days agoMeta’s Layoffs Leave Supernatural Fitness Users in Mourning

-

Politics3 days ago

Politics3 days agoSaudi King Salman leaves hospital after medical tests

-

Tech5 days ago

Tech5 days agoTwo Thinking Machines Lab Cofounders Are Leaving to Rejoin OpenAI