Tech

A firewall for science: AI tool identifies 1,000 ‘questionable’ journals

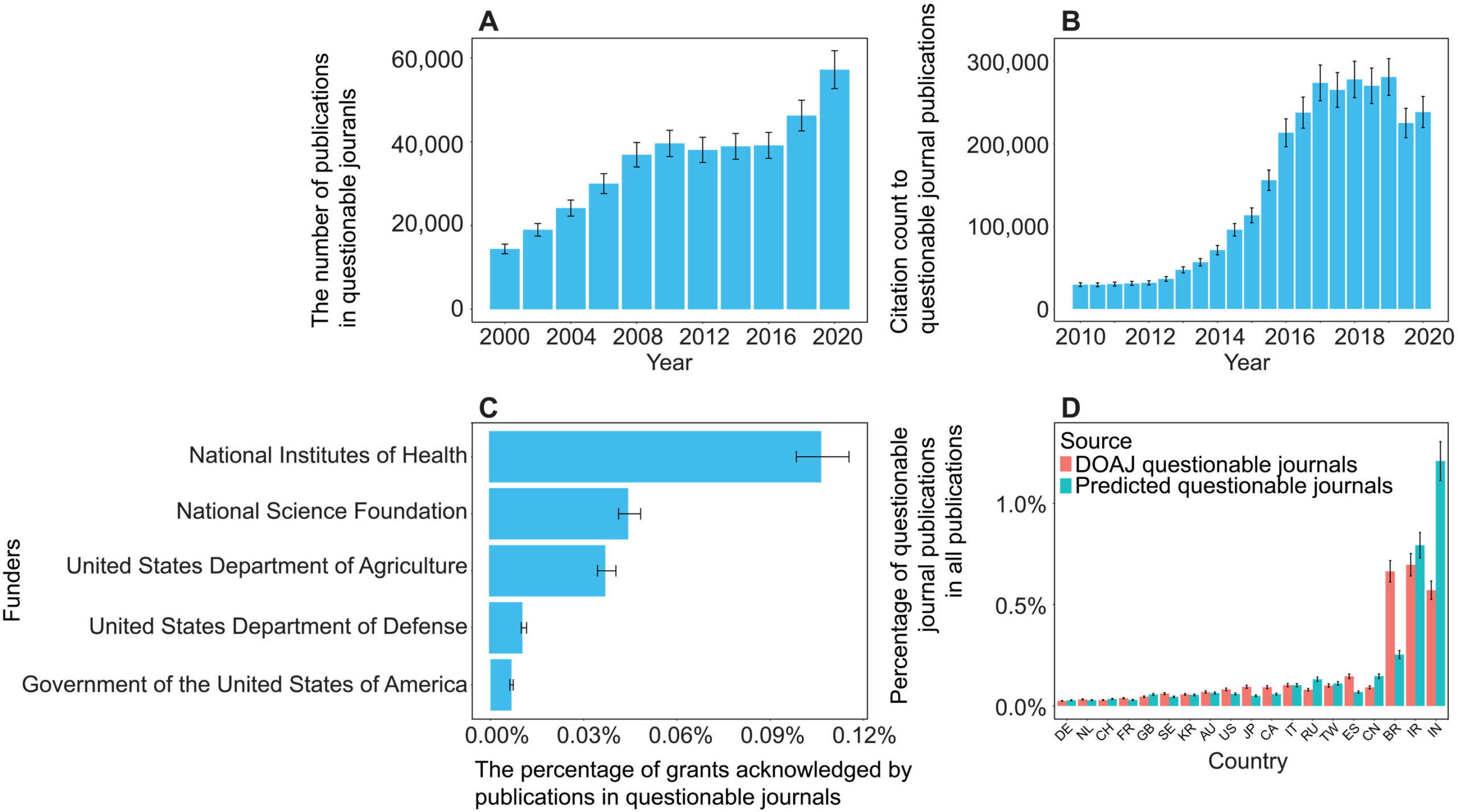

A team of computer scientists led by the University of Colorado Boulder has developed a new artificial intelligence platform that automatically seeks out “questionable” scientific journals.

The study, published Aug. 27 in the journal Science Advances, tackles an alarming trend in the world of research.

Daniel Acuña, lead author of the study and associate professor in the Department of Computer Science, gets a reminder of that several times a week in his email inbox: These spam messages come from people who purport to be editors at scientific journals, usually ones Acuña has never heard of, and offer to publish his papers—for a hefty fee.

Such publications are sometimes referred to as “predatory” journals. They target scientists, convincing them to pay hundreds or even thousands of dollars to publish their research without proper vetting.

“There has been a growing effort among scientists and organizations to vet these journals,” Acuña said. “But it’s like whack-a-mole. You catch one, and then another appears, usually from the same company. They just create a new website and come up with a new name.”

His group’s new AI tool automatically screens scientific journals, evaluating their websites and other online data for certain criteria: Do the journals have an editorial board featuring established researchers? Do their websites contain a lot of grammatical errors?

Acuña emphasizes that the tool isn’t perfect. Ultimately, he thinks human experts, not machines, should make the final call on whether a journal is reputable.

But in an era when prominent figures are questioning the legitimacy of science, stopping the spread of questionable publications has become more important than ever before, he said.

“In science, you don’t start from scratch. You build on top of the research of others,” Acuña said. “So if the foundation of that tower crumbles, then the entire thing collapses.”

The shake down

When scientists submit a new study to a reputable publication, that study usually undergoes a practice called peer review. Outside experts read the study and evaluate it for quality—or, at least, that’s the goal.

A growing number of companies have sought to circumvent that process to turn a profit. In 2009, Jeffrey Beall, a librarian at CU Denver, coined the phrase “predatory” journals to describe these publications.

Often, they target researchers outside of the United States and Europe, such as in China, India and Iran—countries where scientific institutions may be young, and the pressure and incentives for researchers to publish are high.

“They will say, ‘If you pay $500 or $1,000, we will review your paper,'” Acuña said. “In reality, they don’t provide any service. They just take the PDF and post it on their website.”

A few different groups have sought to curb the practice. Among them is a nonprofit organization called the Directory of Open Access Journals (DOAJ). Since 2003, volunteers at the DOAJ have flagged thousands of journals as suspicious based on six criteria. (Reputable publications, for example, tend to include a detailed description of their peer review policies on their websites.)

But keeping pace with the spread of those publications has been daunting for humans.

To speed up the process, Acuña and his colleagues turned to AI. The team trained its system using the DOAJ’s data, then asked the AI to sift through a list of nearly 15,200 open-access journals on the internet.

Among those journals, the AI initially flagged more than 1,400 as potentially problematic.

Acuña and his colleagues asked human experts to review a subset of the suspicious journals. The AI made mistakes, according to the humans, flagging an estimated 350 publications as questionable when they were likely legitimate. That still left more than 1,000 journals that the researchers identified as questionable.

“I think this should be used as a helper to prescreen large numbers of journals,” he said. “But human professionals should do the final analysis.”

Acuña added that the researchers didn’t want their system to be a “black box” like some other AI platforms.

“With ChatGPT, for example, you often don’t understand why it’s suggesting something,” Acuña said. “We tried to make ours as interpretable as possible.”

The team discovered, for example, that questionable journals published an unusually high number of articles. They also included authors with a larger number of affiliations than more legitimate journals, and authors who cited their own research, rather than the research of other scientists, to an unusually high level.

The new AI system isn’t publicly accessible, but the researchers hope to make it available to universities and publishing companies soon. Acuña sees the tool as one way that researchers can protect their fields from bad data—what he calls a “firewall for science.”

“As a computer scientist, I often give the example of when a new smartphone comes out,” he said. “We know the phone’s software will have flaws, and we expect bug fixes to come in the future. We should probably do the same with science.”

More information:

Han Zhuang et al, Estimating the predictability of questionable open-access journals, Science Advances (2025). DOI: 10.1126/sciadv.adt2792

Citation:

A firewall for science: AI tool identifies 1,000 ‘questionable’ journals (2025, August 30)

retrieved 30 August 2025

from https://techxplore.com/news/2025-08-firewall-science-ai-tool-journals.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

Tech

Make the Most of Chrome’s Toolbar by Customizing It to Your Liking

The main job of Google Chrome is to give you a window to the web. With so much engaging content out there on the internet, you may not have given much thought to the browser framework that serves as the container for the sites you visit.

You’d be forgiven for still using the default toolbar configuration that was in place when you first installed Chrome. But if you take a few minutes to customize it, it can make a significant difference to your browsing. You can get quicker access to the key features you need, and you may even discover features you didn’t know about.

If you’re reading this in Chrome on the desktop, you can experiment with a few customizations right now—all it takes is a few clicks. Here’s how the toolbar in Chrome is put together, and all the different changes you can make.

The Default Layout

Take a look up at the top right corner of your Chrome browser tab and you’ll see two key buttons: One reveals your browser extensions (the jigsaw piece), and the other opens up your bookmarks (the double-star icon). There should also be a button showing a downward arrow, which gives you access to recently downloaded files.

Right away, you can start customizing. If you click the jigsaw piece icon to show your browser extensions, you can also click the pin button next to any one of these extensions to make it permanently visible on the toolbar. While you don’t want your toolbar to become too cluttered, it means you can put your most-used add-ons within easy reach.

For the extension icons you choose to have on the toolbar, you can choose the way they’re arranged, too: Click and drag on any of the icons to change its position (though the extensions panel itself has to stay in the same place). To remove an extension icon (without uninstalling the extension), right-click on it and choose Unpin.

Making Changes

Click the three dots up in the top right corner of any browser window and then Settings > Appearance > Customize your toolbar to get to the main toolbar customization panel, which has recently been revamped. Straight away you’ll see toggle switches that let you show or hide certain buttons on the toolbar.

Tech

The Piracy Problem Streaming Platforms Can’t Solve

“The trade-off isn’t only ethical or economic,” Andreaux adds. “It’s also about reliability, privacy and personal security.”

Abed Kataya, digital content manager at SMEX, a Beirut-based digital rights organization focused on internet policy in the Middle East and North Africa, says piracy in the region is shaped less by culture than by structural barriers.

“I see that piracy in MENA is not a cultural choice; rather, it has multiple layers,” Kataya tells WIRED Middle East.

“First, when the internet spread across the region, as in many other regions, people thought everything on it was free,” Kataya says. “This perception was based on the nature of Web 1.0 and 2.0, and how the internet was presented to people.”

Today, he says, structural barriers still lead many users towards illegal platforms. “Users began to watch online on unofficial streaming platforms for many reasons: lack of local platforms, inability to pay, bypassing censorship and, of course, to watch for free or at lower prices.”

Payment access also remains a major factor. “Not to mention that many are unbanked, do not have bank accounts, lack access to online payments, or do not trust paying with their cards and have a general distrust of online payments,” Kataya adds.

Algerian students also share external hard drives loaded with television series, while in Lebanon streaming passwords are frequently shared across households. In Egypt, large Telegram channels distribute content across different genres, including Korean dramas, classic Arab films and underground music.

“We grew up solving problems online,” says Mira. “When something is blocked, you find a way around it. It’s … a fundamental human instinct.”

Streaming Platforms Adapting

Andreaux says StarzPlay has tried to address some of the payment barriers that limit streaming adoption in the region. “StarzPlay recognized early that payment friction was a regional barrier to adoption,” he says. “That’s why we invested in flexible subscription models and alternative payment methods, including telecom-led billing options that make access easier across different markets.”

At the same time, international media companies are working together to combat piracy through the Alliance for Creativity and Entertainment (ACE), a coalition of film studios, television networks and streaming platforms that targets illegal distribution of films, television and sports content. Its members include global companies such as Netflix as well as regional players like OSN Group, which operates the streaming service OSN+ across the Middle East and North Africa.

Kataya notes that legitimate streaming platforms are still expanding across the region. “The user base of official streaming platforms has been growing in the region,” he says. “For example, Shahid, the Saudi platform, is expanding and Netflix has dedicated packages for the region.”

“Other players, like StarzPlay and local platforms in Egypt, are also finding their place,” Kataya adds. “Social media also plays a huge role, especially when a film is widely discussed or controversial.”

Piracy carries legal and security risks, Andreaux says. “Rather than just ‘free streaming’, piracy exposes consumers to malware and insecure payment channels,” he says. “It also weakens investment in local content by depriving creators of revenue and reducing jobs.”

But the structural barriers described by users across the region remain. For many viewers in North Africa and the Levant, the challenge is not choosing between piracy and legality—it is whether legitimate access exists at all.

Tech

X Is Drowning in Disinformation Following US and Israel’s Attack on Iran

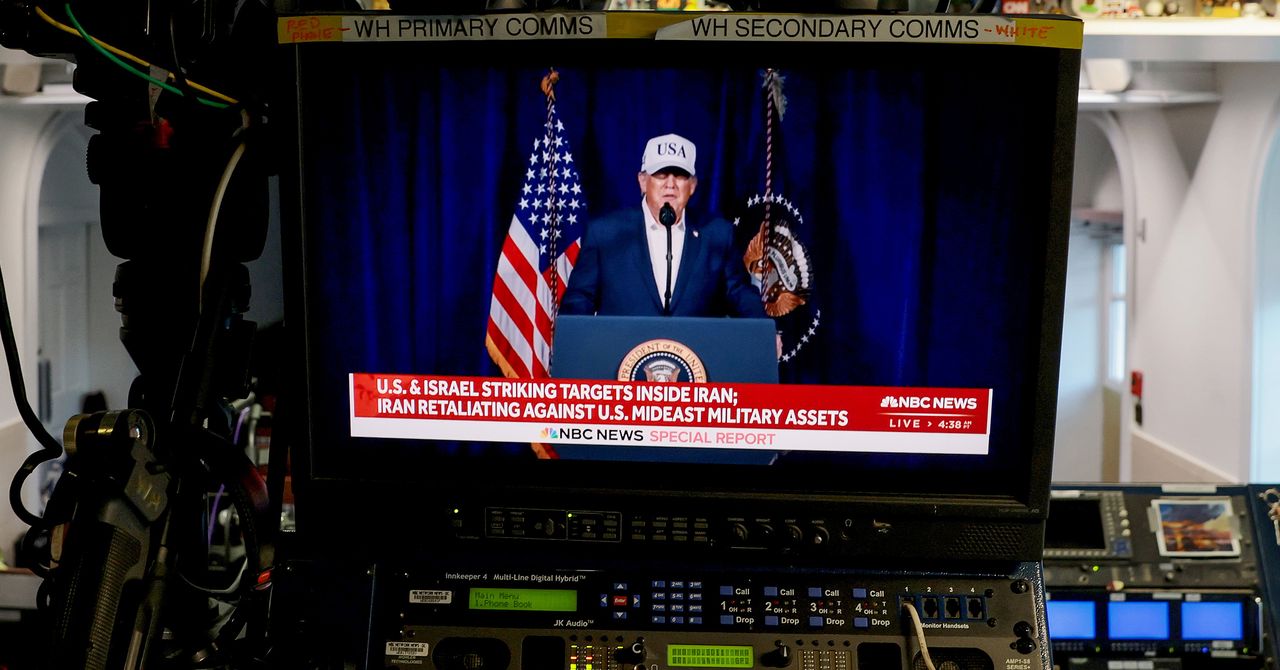

Minutes after Donald Trump announced that the US and Israeli governments had launched a “major combat operation” against Iran in the early hours of Saturday morning, disinformation about the attack and Tehran’s response flooded X.

WIRED has reviewed hundreds of posts on X, some of which have racked up millions of views, that promote misleading claims about the locations and scale of the attack.

Elon Musk’s social media platform is a verifiable mess: In some cases, alleged video footage of the attack shared in posts on X are actually months or years old. In several posts, video footage of apparent attacks have been attributed to incorrect locations. A number of images shared on X appear to be altered or generated with AI. Other posts attempt to pass off video game footage as scenes from the conflict.

X did not respond to a request for comment. Under Musk’s stewardship, X has become a haven for disinformation, especially during major global breaking news events. At the beginning of the Israel-Hamas war, and more recently during anti-immigration enforcement protests in LA, the platform has drowned in inaccurate and faulty posts.

Almost all of the most viral posts reviewed by WIRED on Saturday came from accounts with blue check marks, meaning they pay X for its premium service and could be eligible to earn money based on how much engagement their posts generate, even if the content is false. While some posts with disinformation have a community note appended beneath them to correct the record, they remain up on the site, and it’s unclear how many people viewed them before the notes appeared.

One video posted by a blue check mark account claimed to show ballistic missiles over Dubai; the clip actually showed Iranian ballistic missiles fired at Tel Aviv in October 2024. The post has been viewed over 4.4 million times.

One of the most viral clips shared on X in the hours after the attack claims to show an Israeli fighter jet being shot down by Iranian air defense systems. The video has been shared by dozens of accounts, including one post which has been viewed more than 3.5 million times. The provenance of the video is unclear, but there have been no credible reports of any Israeli jets being shot down over Iran on Saturday.

Another account that claims to be an expert in open source intelligence posted a video showing explosions, alongside the caption: “6 Iranian Hypersonic Missiles hit the Indian-invested Israeli Haifa port. Massive damages reported.” The video has been viewed 64,000 times, but the footage was actually captured last July and shows an Israeli attack on the defense ministry in Damascus, Syria.

In a number of cases, pro-Iranian accounts have been using images and footage from Saturday’s attacks to falsely claim successful strikes against Israel. “IRANIAN MISSILE IMPACT IN TEL AVIV RIGHT NOW,” the Iran Observer account wrote in a post featuring an image of Dubai. The post had been viewed over 200,000 times before it was deleted, but dozens of other posts sharing the same image and making the same claims remain on X.

Tehran Times, a news outlet aligned with the Iranian government, posted what appears to be an AI-generated image on X which claims to show that “an American radar in Qatar was completely destroyed today in an Iranian drone strike.” The use of AI generated images was flagged on X by Tal Hagin, a senior analyst with open source intelligence company Golden Owl. While there are reports that drone and missile attacks targeted the US Navy’s 5th Fleet headquarters in Bahrain, there are no reports yet of similar successful attacks in Qatar.

A pro-Trump account, which also features a blue check mark, posted images claiming to show the before and after pictures of the palace of Iranian Supreme Leader Ali Khamenei, which was targeted during Saturday’s missile attacks. (In a post on Truth Social, Trump claimed Khamenei was killed in an attack.) While the after picture appears to accurately show the palace after the attack, the before picture shows the Mausoleum of Ruhollah Khomeini, which is located on the other side of Tehran. The post has been viewed 365,000 times.

-

Business1 week ago

Business1 week agoUS Top Court Blocks Trump’s Tariff Orders: Does It Mean Zero Duties For Indian Goods?

-

Fashion1 week ago

Fashion1 week agoICE cotton ticks higher on crude oil rally

-

Entertainment1 week ago

Entertainment1 week agoThe White Lotus” creator Mike White reflects on his time on “Survivor

-

Politics1 week ago

Politics1 week agoPakistan carries out precision strikes on seven militant hideouts in Afghanistan

-

Business1 week ago

Business1 week agoEye-popping rise in one year: Betting on just gold and silver for long-term wealth creation? Think again! – The Times of India

-

Sports1 week ago

Sports1 week agoBrett Favre blasts NFL for no longer appealing to ‘true’ fans: ‘There’s been a slight shift’

-

Entertainment1 week ago

Entertainment1 week agoViral monkey Punch makes IKEA toy global sensation: Here’s what it costs

-

Sports1 week ago

Sports1 week agoKansas’ Darryn Peterson misses most of 2nd half with cramping