Tech

AI method reconstructs 3D scene details from simulated images using inverse rendering

Over the past decades, computer scientists have developed many computational tools that can analyze and interpret images. These tools have proved useful for a broad range of applications, including robotics, autonomous driving, health care, manufacturing and even entertainment.

Most of the best performing computer vision approaches employed to date rely on so-called feed-forward neural networks. These are computational models that process input images step by step, ultimately making predictions about them.

While some of these models were found to perform well when tested on the data they analyzed during training, they often do not generalize well across new images and in different scenarios. In addition, their predictions and the patterns they extract from images can be difficult to interpret.

Researchers at Princeton University recently developed a new inverse rendering approach that is more transparent and could also interpret a wide range of images more reliably. The new approach, introduced in a paper published in Nature Machine Intelligence, relies on a generative artificial intelligence (AI)-based method to simulate the process of image creation, while also optimizing it by gradually adjusting a model’s internal parameters.

“Generative AI and neural rendering have transformed the field in recent years for creating novel content: producing images or videos from scene descriptions,” Felix Heide, senior author of the paper, told Tech Xplore. “We investigate whether we can flip this around and use these generative models for extracting the scene descriptions from images.”

The new approach developed by Heide and his colleagues relies on a so-called differentiable rendering pipeline. This is a process for the simulation of image creation, relying on compressed representations of images created by generative AI models.

“We developed an analysis-by-synthesis approach that allows us to solve vision tasks, such as tracking, as test-time optimization problems,” explained Heide. “We found that this method generalizes across datasets, and in contrast to existing supervised learning methods, does not need to be trained on new datasets.”

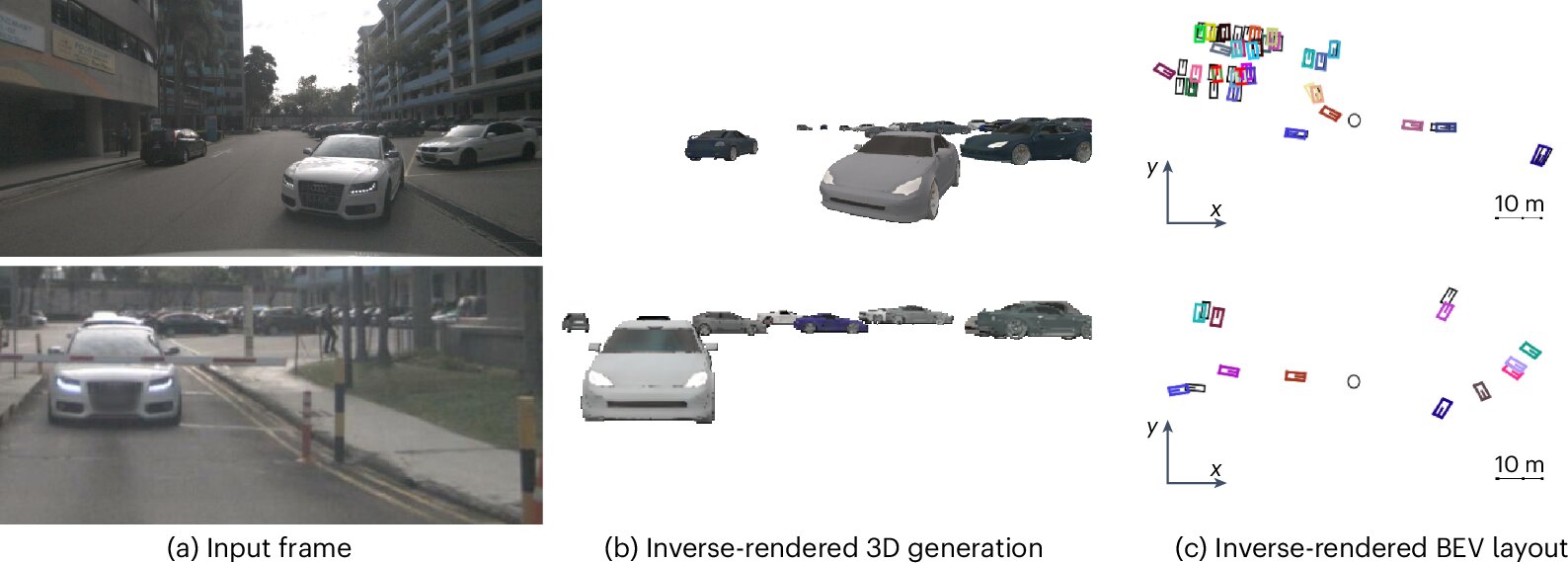

Essentially, the method developed by the researchers works by placing models of 3D objects in a virtual scene depicting real world settings. These models of objects are generated by a generative AI based on random sample of 3D scene parameters.

“We then render all these objects back together into a 2D image,” said Heide. “Next, we compare this rendered image with the real observed image. Based on how different they are, we backpropagate the difference through both the differentiable rendering function and the 3D generation model to update its inputs. In just a few steps, we optimize these inputs to make the rendered match the observed images better.”

-

Optimizing 3D models through inverse neural rendering. From left to right: the observed image, initial random 3D generations, and three optimization steps that refine these to better match the observed image. The observed images are faded to show the rendered objects clearly. The method effectively refines object appearance and position, all done at test time with inverse neural rendering. Credit: Ost et al.

-

Generalization of 3D multi-object tracking with Inverse Neural Rendering. The method directly generalizes across datasets such as the nuScenes and Waymo Open Dataset benchmarks without additional fine-tuning and is trained on synthetic 3D models only. The observed images are overlaid with the closest generated object and tracked 3D bounding boxes. Credit: Ost et al.

A notable advantage of the team’s newly proposed approach is that it allows very generic 3D object generation models trained on synthetic data to perform well across a wide range of datasets containing images captured in real-world settings. In addition, the renderings produced by the models are far more explainable than those produced by conventional rendering tools based on feed-forward machine learning models.

“Our inverse rendering approach for tracking works just as well as learned feed-forward approaches, but it provides us with explicit 3D explanations of its perceived world,” said Heide.

“The other interesting aspect is the generalization capabilities. Without changing the 3D generation model or training it on new data, our 3D multi-object tracking through Inverse Neural Rendering works well across different autonomous driving datasets and object types. This can significantly reduce the cost of fine-tuning on new data or at least work as an auto-labeling pipeline.”

This recent study could soon help to advance AI models for computer vision, improving their performance in real-world settings while also increasing their transparency. The researchers now plan to continue improving their method and start testing it on more computer vision-related tasks.

“A logical next step is the expansion of the proposed approach to other perception tasks, such as 3D detection and 3D segmentation,” added Heide. “Ultimately, we want to explore if inverse rendering can even be used to infer the whole 3D scene, and not just individual objects. This would allow our future robots to reason and continuously optimize a three-dimensional model of the world, which comes with built-in explainability.”

Written for you by our author Ingrid Fadelli,

edited by Gaby Clark, and fact-checked and reviewed by Robert Egan—this article is the result of careful human work. We rely on readers like you to keep independent science journalism alive.

If this reporting matters to you,

please consider a donation (especially monthly).

You’ll get an ad-free account as a thank-you.

More information:

Julian Ost et al, Towards generalizable and interpretable three-dimensional tracking with inverse neural rendering, Nature Machine Intelligence (2025). DOI: 10.1038/s42256-025-01083-x.

© 2025 Science X Network

Citation:

AI method reconstructs 3D scene details from simulated images using inverse rendering (2025, August 23)

retrieved 23 August 2025

from https://techxplore.com/news/2025-08-ai-method-reconstructs-3d-scene.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

Tech

OpenAI Had Banned Military Use. The Pentagon Tested Its Models Through Microsoft Anyway

OpenAI CEO Sam Altman is still in the hot seat this week after his company signed a deal with the US military. OpenAI employees have criticized the move, which came after Anthropic’s roughly $200 million contract with the Pentagon imploded, and asked Altman to release more information about the agreement. Altman admitted it looked “sloppy” in a social media post.

While this incident has become a major news story, it may just be the latest and most public example of OpenAI creating vague policies around how the US military can access its AI.

In 2023, OpenAI’s usage policy explicitly banned the military from accessing its AI models. But some OpenAI employees discovered the Pentagon had already started experimenting with Azure OpenAI, a version of OpenAI’s models offered by Microsoft, two sources familiar with the matter said. At the time, Microsoft had been contracting with the Department of Defense for decades. It was also OpenAI’s largest investor, and had broad license to commercialize the startup’s technology.

That same year, OpenAI employees saw Pentagon officials walking through the company’s San Francisco offices, the sources said. They spoke on the condition of anonymity as they aren’t licensed to comment on private company matters.

Some OpenAI employees were wary about associating with the Pentagon, while others were simply confused about what OpenAI’s usage policies meant. Did the policy apply to Microsoft? While sources tell WIRED it was not clear to most employees at the time, spokespeople from OpenAI and Microsoft say Azure OpenAI products are not, and were not, subject to OpenAI’s policies.

“Microsoft has a product called the Azure OpenAI Service that became available to the US Government in 2023 and is subject to Microsoft terms of service,” said spokesperson Frank Shaw in a statement to WIRED. Microsoft declined to comment specifically on when it made Azure OpenAI available to the Pentagon, but notes the service was not approved for “top secret” government workloads until 2025.

“AI is already playing a significant role in national security and we believe it’s important to have a seat at the table to help ensure it’s deployed safely and responsibly,” OpenAI spokesperson Liz Bourgeois said in a statement. “We’ve been transparent with our employees as we’ve approached this work, providing regular updates and dedicated channels where teams can ask questions and engage directly with our national security team.”

The Department of Defense did not respond to WIRED’s request for comment.

By January 2024, OpenAI updated its policies to remove the blanket ban on military use. Several OpenAI employees found out about the policy update through an article in The Intercept, sources say. Company leaders later addressed the change at an all-hands meeting, explaining how the company would tread carefully in this area moving forward.

In December 2024, OpenAI announced a partnership with Anduril to develop and deploy AI systems for “national security missions.” Ahead of the announcement, OpenAI told employees that the partnership was narrow in scope and would only deal with unclassified workloads, the same sources said. This stood in contrast to a deal Anthropic had signed with Palantir, which would see Anthropic’s AI used for classified military work.

Palantir approached OpenAI in the fall of 2024 to discuss participating in their “FedStart” program, an OpenAI spokesperson confirmed to WIRED. The company ultimately turned it down, and told employees it would’ve been too high-risk, two sources familiar with the matter tell WIRED. However, OpenAI now works with Palantir in other ways.

Around the time the Anduril deal was announced, a few dozen OpenAI employees joined a public Slack channel to discuss their concerns about the company’s military partnerships, sources say and a spokesperson confirmed. Some believed the company’s models were too unreliable to handle a user’s credit card information, let alone assist Americans on the battlefield.

Tech

Don’t Risk Birdwatching FOMO—Put Out Your Hummingbird Feeders Now

Though most people associate the beginning of March with the hopefulness of spring and the indignities of daylight saving time, there’s another important event taking place yards all over the country: hummingbird season.

While many species of hummingbirds can be seen in regions year-round, others are migratory, and this time typically marks their return from wintering grounds in Central and South America. These tiny birds can lose up to 40 percent of their body weight by the time they arrive here after having flown thousands of miles, and since many flowers haven’t bloomed yet, nectar feeders can be a source of essential fuel.

Though I test smart bird feeders year-round, I don’t use hummingbird feeders as often as I should, as it’s imperative that they be cleaned and refilled with new nectar every two or three days (a ratio of 1:4 granulated sugar to water is best, and avoid any dyes or additives) to prevent deadly bacteria and mold, and I don’t always have the time.

But if you are going to invest the energy in maintaining a hummingbird feeder, right now is the best time, as you have a chance to see migratory species you might not otherwise encounter, such as black-chinned hummingbirds. A smart feeder helps you ID them, whether they’re stopping at your feeder on their way north or arriving at their final destination.

Birdbuddy’s Pro is the smart hummingbird feeder I recommend and use myself when I’m not actively testing. The app is easy to navigate and sends cleaning reminders, the built-in solar roof keeps the battery charged, and, unlike other feeders, only the shallow bottom screws off for refilling. No having to pour sticky nectar through a narrow opening, or turn a giant cylinder upside down and risk spilling.

Note that it’s not perfect; the sensor is inconsistent and doesn’t capture every hummingbird that visits, but for the camera quality (5 MP photos, 2K video with slow-motion, 122-degree field of view) and ease of use, it’s a foible I’m willing to put up with. If you already have another Birdbuddy feeder, the hummingbird feeder images and videos will integrate seamlessly into your app feed.

Right now, the feeder is 37 percent off on Birdbuddy’s website—a deal I usually don’t see outside of shopping events like Black Friday or Amazon Prime Day. Note that the feeder only runs on 2.4 GHz Wi-Fi, and while it is fully functional without a subscription, a Birdbuddy Premium subscription will let you add friends and family members to your account so they can see the birds as well. That’s $99 a year through the app.

Power up with unlimited access to WIRED. Get best-in-class reporting and exclusive subscriber content that’s too important to ignore. Subscribe Today.

Tech

The Controversies Finally Caught Up to Kristi Noem

After a tenure marked by controversy and a contentious week of Congressional hearings, secretary Kristi Noem is out as head of the Department of Homeland Security.

President Donald Trump announced in a Truth Social post on Thursday that Noem would be replaced by senator Markwayne Mullin of Oklahoma, a staunch Trump ally and immigration hardliner. “The current Secretary, Kristi Noem, who has served us well, and has had numerous and spectacular results (especially on the Border!), will be moving to be Special Envoy for The Shield of the Americas, our new Security Initiative in the Western Hemisphere we are announcing on Saturday in Doral, Florida,” Trump wrote. “I thank Kristi for her service at ‘Homeland.’”

DHS did not immediately respond to a request for comment.

The agencies under DHS include Immigration and Customs Enforcement, US Customs and Border Protection, the Cybersecurity and Infrastructure Security Agency, the Federal Emergency Management Agency, US Citizenship and Immigration Services, the US Coast Guard, and others. It’s a sprawling network whose vast responsibilities and rapidly expanding budget have put it at the center of the Trump administration’s radical overhaul of immigration and border policy.

Speculation has swirled around Noem’s departure for months. Critics have assailed DHS’s aggressive immigration enforcement tactics, while Noem and figures like White House border czar Tom Homan have reportedly been at odds over how to execute the administration’s mass deportation agenda, with Noem and senior adviser Corey Lewandowski said to have emphasized sheer numbers of arrests and deportations above other considerations.

The relationship between Noem and Lewandowski has itself been a subject of controversy, with CNN reporting that a September meeting between the two and president Donald Trump grew “contentious.” Last month, the Wall Street Journal reported that Lewandowski attempted to fire a pilot during a flight for failing to bring Noem’s blanket from one plane to another during a transfer.

The ousted secretary faced mounting scrutiny over the deaths of US citizens during federal operations in Minneapolis, including the killings of Renee Good and Alex Pretti by federal agents under Noem’s employ. In both cases, Noem publicly labeled the deceased “domestic terrorists,” framing echoed by Trump and other key administration officials. Video evidence, witness testimony, and an independent autopsy contradicted the agency’s claims, including early assertions that Pretti brandished a firearm.

Scrutiny of Noem’s tenure extends beyond the fatal shootings in Minneapolis to a broader pattern of aggressive enforcement tactics, warrantless raids, and mass detention camps. A secretive policy directive issued in May 2025, first reported by the Associated Press, authorized ICE agents to forcibly enter private residences without a judicial warrant. The memo, signed by acting ICE director Todd Lyons, instructed agents to rely solely on an administrative removal document to bypass Fourth Amendment requirements. The policy led to multiple documented instances of federal agents entering the wrong homes, including a January raid in Minnesota where agents removed a US citizen at gunpoint with no legitimate reason.

A record 53 people died in ICE or CBP custody last year, according to House Democrats on the Committee on Homeland Security. Concurrently, Noem has initiated a $38 billion procurement effort to buy and refurbish up to 24 warehouses across the country, aimed at converting them into mass detention camps for people awaiting deportation.

Noem’s tenure has led to controversy at other DHS agencies as well. Her insistence on approving any contracts or grants over $100,000 at the department have caused particular strain at FEMA, which has experienced a massive backlog of funding that has slowed normal processes at the agency. A report issued from Senate Democrats Wednesday found that Noem’s vetting process at FEMA has caused more than 1,000 contracts, grants, and awards to be held up. Multiple FEMA employees have told WIRED that this process has made the agency less ready to respond to disasters and threats.

-

Politics1 week ago

Politics1 week agoWhat are Iran’s ballistic missile capabilities?

-

Business7 days ago

Business7 days agoIndia Us Trade Deal: Fresh look at India-US trade deal? May be ‘rebalanced’ if circumstances change, says Piyush Goyal – The Times of India

-

Business1 week ago

Business1 week agoAttock Cement’s acquisition approved | The Express Tribune

-

Politics1 week ago

Politics1 week agoUS arrests ex-Air Force pilot for ‘training’ Chinese military

-

Fashion1 week ago

Fashion1 week agoPolicy easing drives Argentina’s garment import surge in 2025

-

Business1 week ago

Business1 week agoHouseholds set for lower energy bills amid price cap shake-up

-

Sports6 days ago

Sports6 days agoLPGA legend shares her feelings about US women’s Olympic wins: ‘Gets me really emotional’

-

Fashion7 days ago

Fashion7 days agoTexwin Spinning showcasing premium cotton yarn range at VIATT 2026