Tech

Europe’s fastest supercomputer to boost AI drive

Europe’s fastest supercomputer Jupiter is set to be inaugurated Friday in Germany with its operators hoping it can help the continent in everything from climate research to catching up in the artificial intelligence race.

Here is all you need to know about the system, which boasts the power of around one million smartphones.

What is the Jupiter supercomputer?

Based at Juelich Supercomputing Center in western Germany, it is Europe’s first “exascale” supercomputer—meaning it will be able to perform at least one quintillion (or one billion billion) calculations per second.

The United States already has three such computers, all operated by the Department of Energy.

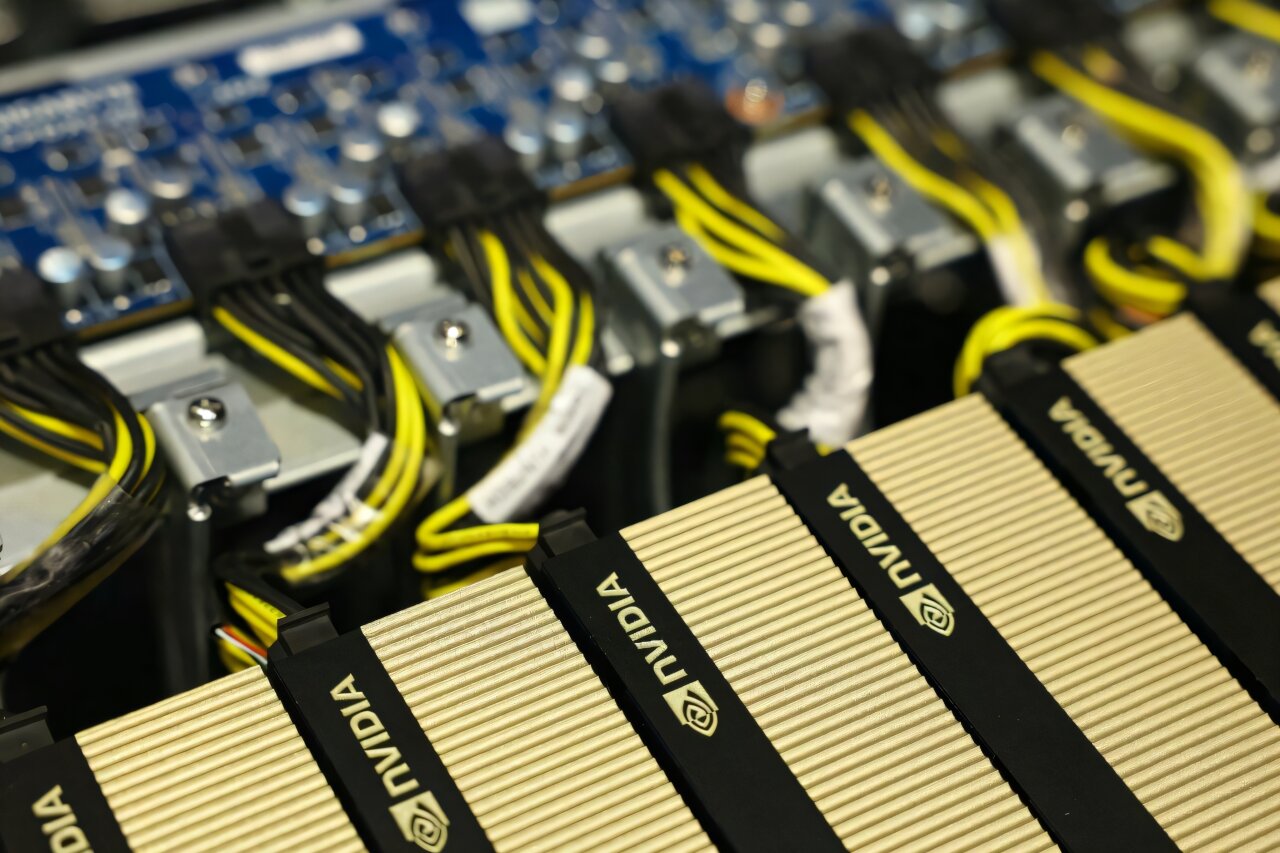

Jupiter is housed in a center covering some 3,600 meters (38,000 square feet)—about half the size of a football pitch—containing racks of processors, and packed with about 24,000 Nvidia chips, which are favored by the AI industry.

Half the 500 million euros ($580 million) to develop and run the system over the next few years comes from the European Union and the rest from Germany.

Its vast computing power can be accessed by researchers across numerous fields as well as companies for purposes such as training AI models.

“Jupiter is a leap forward in the performance of computing in Europe,” Thomas Lippert, head of the Juelich center, told AFP, adding that it was 20 times more powerful than any other computer in Germany.

How can it help Europe in the AI race?

Lippert said Jupiter is the first supercomputer that could be considered internationally competitive for training AI models in Europe, which has lagged behind the US and China in the sector.

According to a Stanford University report released earlier this year, US-based institutions produced 40 “notable” AI models—meaning those regarded as particularly influential—in 2024, compared to 15 for China and just three for Europe.

“It is the biggest artificial intelligence machine in Europe,” Emmanuel Le Roux, head of advanced computing at Eviden, a subsidiary of French tech giant Atos, told AFP.

A consortium consisting of Eviden and German group ParTec built Jupiter.

Jose Maria Cela, senior researcher at the Barcelona Supercomputing Center, said the new system was “very significant” for efforts to train AI models in Europe.

“The larger the computer, the better the model that you develop with artificial intelligence,” he told AFP.

Large language models (LLMs) are trained on vast amounts of text and used in generative AI chatbots such as OpenAI’s ChatGPT and Google’s Gemini.

Nevertheless with Jupiter packed full of Nvidia chips, it is still heavily reliant on US tech.

The dominance of the US tech sector has become a source of growing concern as US-Europe relations have soured.

What else can the computer be used for?

Jupiter has a wide range of other potential uses beyond training AI models.

Researchers want to use it to create more detailed, long-term climate forecasts that they hope can more accurately predict the likelihood of extreme weather events such as heat waves.

Le Roux said that current models can simulate climate change over the next decade.

“With Jupiter, scientists believe they will be able to forecast up to at least 30 years, and in some models, perhaps even up to 100 years,” he added.

Others hope to simulate processes in the brain more realistically, research that could be useful in areas such as developing drugs to combat diseases like Alzheimer’s.

It can also be used for research related to the energy transition, for instance by simulating air flows around wind turbines to optimize their design.

Does Jupiter consume a lot of energy?

Yes, Jupiter will require on average around 11 megawatts of power, according to estimates—equivalent to the energy used to power thousands of homes or a small industrial plant.

But its operators insist that Jupiter is the most energy-efficient among the fastest computer systems in the world.

It uses the latest, most energy-efficient hardware, has water-cooling systems and the waste heat that it generates will be used to heat nearby buildings, according to the Juelich center.

© 2025 AFP

Citation:

Europe’s fastest supercomputer to boost AI drive (2025, September 5)

retrieved 5 September 2025

from https://techxplore.com/news/2025-09-europe-fastest-supercomputer-boost-ai.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

Tech

L.L.Bean Promo Codes and Coupons: Up to 75% Off

L.L. Bean is infamous for its outdoorsy appeal, ranging from outerwear and supplies to withstand the elements to laid-back lifestyle products. The company was established in 1912 by Leon Leonwood Bean in Maine. It remains headquartered there today, continually rolling out revered classics and updated essentials for today’s nature lovers. Take the Bean Boots: what started as L.L. Bean’s premier product ultimately helped shape the brand into what it is today. This definitive shoe, which can be worn on hiking trails and rain-slicked city streets alike, has remained true to the original version. If you’ve ever wanted to capture the essence of being a rugged Mainer or recreate a cozy cabin at home, here are plenty of L.L. Bean promo code options at your fingertips.

Get 10% Off Your First Order With an L.L.Bean Promo Code

You may bemoan email updates, but in terms of sales, this L.L. Bean coupon is a pretty low lift. Sign up for email updates from the company, and you get 10% off your first order. This offer is valid only once per email address, so choose your purchase wisely.

Take Up to 75% Off Outdoor Gear in the L.L.Bean Sale Section

Sales mean stocking up, especially on outdoor equipment and camping supplies ahead of your next adventure. Whether you’re about to take up fishing and need supplies, or have Noah Kahan concert tickets in sight and want extras from his L.L. Bean collaboration collection for the event, all of that is available to you. You can save 75% off these L.L. Bean sale items, no promo code needed.

This is a different sort of two-for-one special: twice a day, L.L. Bean posts new sales at 6 AM and 2 PM sharp, Eastern time. While the two-a-day daily markdown is not super expansive in terms of inventory up for grabs, what is posted for sale usually comes at a heavily discounted price akin to deals you’d see on Black Friday.

This L.L. Bean sale is like an online treasure hunt. The daily markdown sale involves a new deal posted daily from 6 AM to midnight Eastern time. Inventory leans toward gear, such as backpacks, blankets, and shoes.

Score Free Shipping on Orders Over $75

We’ve all abandoned our online shopping carts at one point or another once we saw how much shipping was going to cost. Shipping usually costs $8 for a standard L.L. Bean order—that is, if you are under $75. If you hit that threshold or more, you immediately score free shipping on your order.

Military, First Responders, Medical Workers, and Students Can Save an Additional 10%

Being in the medical field or a first responder can often be a tough, thankless job. But, there’s a special L.L. Bean sale for medical workers and first responders so that you can stock up on supplies for when you rest and recharge in your down time. Use the L.L. Bean first responder discount for 10% off—be sure to verify your license status through SheerID.

L.L. Bean military discount offers 10% for military personnel, current or former. This discount also applies to family members—if you or a family member would like to partake, verify your status via SheerID.

Teachers deserve their (wild)flowers. To make sure you have what you need for your next outdoor adventure and say thanks, you can get 10% off with the special L.L. Bean teacher discount. College students, there’s also the L.L. Bean student discount where you 10% off, too. To redeem either of these discounts, make sure to verify your teaching or student (or both!) status via SheerID.

Earn 20% Off With the L.L.Bean Mastercard

If you’re hunting for a potential credit card candidate, and already are an avid L.L. Bean fan, this is the opportunity for you. You can earn 20% off once approved for an L.L. Bean Mastercard, along with free shipping on all orders when you use it—no minimum purchase necessary.

Tech

What Is That Mysterious Metallic Device US Chief Design Officer Joe Gebbia Is Using?

Joe Gebbia, cofounder of Airbnb and the US Chief Design Officer appointed by Trump, was spotted in San Francisco today using a mysterious metallic device. In a social media post on X viewed over 500,000 times, a man who looks like Gebbia sits with an espresso at a coffee shop. He’s wearing metallic buds that bisect his ears, with a matching clamshell-shaped disc in front of him on the counter.

After the video was posted Monday morning, social media users were quick to suggest that this could be some kind of prototype from OpenAI’s upcoming line of hardware devices designed in partnership with famed Apple designer Jony Ive. An OpenAI spokesperson declined to comment on the potential Gebbia video after WIRED reached out. Gebbia also did not respond to a request for comment.

The device Gebbia appears to be wearing looks quite similar to the hardware seen in a fake OpenAI ad that was widely circulated on Reddit and social media in February. That video from last month seemingly showed Pillion actor Alexander Skarsgård interacting with an AI device that had a similar-looking pair of earbuds and a circular disc. At the time, OpenAI denounced the widely seen video as not real. “Fake news,” wrote OpenAI President Greg Brockman at the time, responding to a social media post.

The earbuds seen in the video of Gebbia also look quite similar in shape to the Huawei FreeClip 2, a pair of open earbuds released earlier this year. However, the clamshell seen on the coffee counter next to Gebbia is different from Huawei’s most recent headphone case. It would also be quite surprising if a government official were seen using Huawei tech, considering the Chinese company is effectively banned from selling its phones in the US due to security concerns.

WIRED’s audio experts say he’s most likely wearing open earbuds, as Gebbia’s pair share some similarities with Soundcore’s AeroClips or Sony’s LinkBuds Clip, though the cases for those buds don’t match what’s on the table in front of Gebbia. WIRED also ran the photo and video through software that attempts to identify AI-generated outputs and other deepfakes. The detection software, from a company called Hive, says the odds are low that this imagery of Gebbia was generated by AI. Still, AI detectors are not always reliable and can include false outputs. It’s possible that the entire post could be a synthetic hoax.

Could this be some kind of soft launch teaser for OpenAI’s hardware? The timing of this trickle out would make sense, since the company may ship devices to consumers sometime early in 2027. Still, OpenAI denied any involvement with the previous pseudo-ad for the metallic AI hardware, with its shiny earbuds and matching disc.

Tech

The ‘European’ Jolla Phone Is an Anti-Big-Tech Smartphone

“There are Chinese components as well—we are totally open about it—but the key is that as we compile the software ourselves and install it in Finland, we protect the integrity of the product,” Pienimäki says.

What makes Sailfish OS unique over competitors like GrapheneOS or e/OS is that it’s not based on the Android Open Source Project, but Linux. That means it has no ties to Google—no need for the company to “deGoogle” the software; meaning there’s a greater sense of sovereignty over the software (and now the hardware). Still, it’s able to run Android apps, though the implementation isn’t perfect. Another common criticism is that it’s not as secure as options like GrapheneOS, where every app is sandboxed.

There’s a good chance some Android apps on Sailfish OS will run into issues, which is why in the startup wizard, the phone will ask if you want to install services like MicroG—open source software that can run Google services on devices that don’t have the Google Play Store, making it an easier on-ramp for folks coming from traditional smartphones without a technical background. You don’t even need to create a Sailfish OS account to use the Jolla Phone.

Jolla’s effort is hardly the first to push the anti-Big Tech narrative. A wave of other hardware and software companies offer a “deGoogled” experience, whether that’s Murena from France and its e/OS privacy-friendly operating system, or the Canadian GrapheneOS, which just announced a partnership with Motorola. At CES earlier this year, the Swiss company Punkt also teamed up with ApostrophyOS to deploy its software on the new MC03 smartphone. Jolla is following a broader European trend of reducing reliance on US companies, like how French officials ditched Zoom for French-made video conference software earlier this year.

The Phone

A common problem with these niche smartphones is that they inevitably end up costing a lot of money for the specs. Take the Light Phone III, for example, a fairly low-tech anti-smartphone that doesn’t enjoy the benefits of economies of scale, resulting in an outlandish $699 price. The Jolla Phone is in a similar boat, though the specs-to-value ratio is a little more respectable.

It’s powered by a midrange MediaTek Dimensity 7100 5G chip with 8 GB of RAM, 256 GB of storage, plus a microSD card slot and dual-SIM tray. There’s a 6.36-inch 1080p AMOLED screen, the two main cameras, and a 32-megapixel selfie shooter. The 5,500-mAh battery cell is fairly large considering the phone’s size, though the phone’s connectivity is a little dated, stuck with Wi-Fi 6 and Bluetooth 5.4.

Uniquely, the Jolla Phone brings back “The Other Half” functional rear covers from the original. These swappable back covers have pogo pins that interface with the phone, allowing people to create unique accessories like a second display on the back of the phone or even a keyboard attachment. There’s an Innovation Program where the community can cocreate functional covers together and 3D print them. And yes, a removable rear cover means the Jolla Phone’s battery is user-replaceable.

-

Politics5 days ago

Politics5 days agoWhat are Iran’s ballistic missile capabilities?

-

Business6 days ago

Business6 days agoHouseholds set for lower energy bills amid price cap shake-up

-

Sports5 days ago

Sports5 days agoSri Lanka’s Shanaka says constant criticism has affected players’ mental health

-

Business6 days ago

Business6 days agoLucid widely misses earnings expectations, forecasts continued EV growth in 2026

-

Sports1 week ago

Sports1 week agoTop 50 USMNT players of 2026, ranked by club form: USMNT Player Performance Index returns

-

Business5 days ago

Business5 days agoAttock Cement’s acquisition approved | The Express Tribune

-

Sports5 days ago

Sports5 days agoWho has the advantage at WWE Elimination Chamber? Notes, stats and history to consider

-

Tech6 days ago

Tech6 days agoHere’s What a Google Subpoena Response Looks Like, Courtesy of the Epstein Files