Tech

Method teaches generative AI models to locate personalized objects

Say a person takes their French Bulldog, Bowser, to the dog park. Identifying Bowser as he plays among the other canines is easy for the dog owner to do while onsite.

But if someone wants to use a generative AI model like GPT-5 to monitor their pet while they are at work, the model could fail at this basic task. Vision-language models like GPT-5 often excel at recognizing general objects, like a dog, but they perform poorly at locating personalized objects, like Bowser the French Bulldog.

To address this shortcoming, researchers from MIT and the MIT-IBM Watson AI Lab have introduced a new training method that teaches vision-language models to localize personalized objects in a scene.

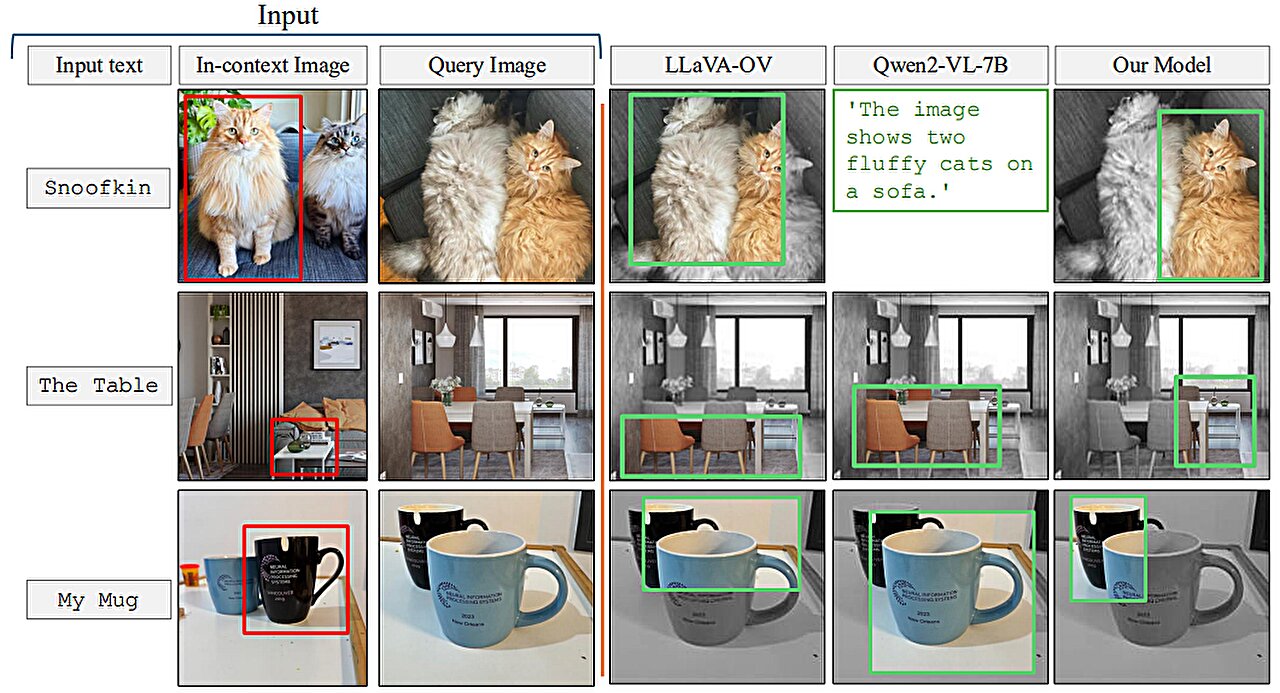

Their method uses carefully prepared video-tracking data in which the same object is tracked across multiple frames. They designed the dataset so the model must focus on contextual clues to identify the personalized object, rather than relying on knowledge it previously memorized.

When given a few example images showing a personalized object, like someone’s pet, the retrained model is better able to identify the location of that same pet in a new image.

Models retrained with their method outperformed state-of-the-art systems at this task. Importantly, their technique leaves the rest of the model’s general abilities intact.

This new approach could help future AI systems track specific objects across time, like a child’s backpack, or localize objects of interest, such as a species of animal in ecological monitoring. It could also aid in the development of AI-driven assistive technologies that help visually impaired users find certain items in a room.

“Ultimately, we want these models to be able to learn from context, just like humans do. If a model can do this well, rather than retraining it for each new task, we could just provide a few examples and it would infer how to perform the task from that context. This is a very powerful ability,” says Jehanzeb Mirza, an MIT postdoc and senior author of a paper on this technique posted to the arXiv preprint server.

Mirza is joined on the paper by co-lead authors Sivan Doveh, a graduate student at Weizmann Institute of Science; and Nimrod Shabtay, a researcher at IBM Research; James Glass, a senior research scientist and the head of the Spoken Language Systems Group in the MIT Computer Science and Artificial Intelligence Laboratory (CSAIL); and others. The work will be presented at the International Conference on Computer Vision (ICCV 2025), held Oct 19–23 in Honolulu, Hawai’i.

An unexpected shortcoming

Researchers have found that large language models (LLMs) can excel at learning from context. If they feed an LLM a few examples of a task, like addition problems, it can learn to answer new addition problems based on the context that has been provided.

A vision-language model (VLM) is essentially an LLM with a visual component connected to it, so the MIT researchers thought it would inherit the LLM’s in-context learning capabilities. But this is not the case.

“The research community has not been able to find a black-and-white answer to this particular problem yet. The bottleneck could arise from the fact that some visual information is lost in the process of merging the two components together, but we just don’t know,” Mirza says.

The researchers set out to improve VLMs abilities to do in-context localization, which involves finding a specific object in a new image. They focused on the data used to retrain existing VLMs for a new task, a process called fine-tuning.

Typical fine-tuning data are gathered from random sources and depict collections of everyday objects. One image might contain cars parked on a street, while another includes a bouquet of flowers.

“There is no real coherence in these data, so the model never learns to recognize the same object in multiple images,” he says.

To fix this problem, the researchers developed a new dataset by curating samples from existing video-tracking data. These data are video clips showing the same object moving through a scene, like a tiger walking across a grassland.

They cut frames from these videos and structured the dataset so each input would consist of multiple images showing the same object in different contexts, with example questions and answers about its location.

“By using multiple images of the same object in different contexts, we encourage the model to consistently localize that object of interest by focusing on the context,” Mirza explains.

Forcing the focus

But the researchers found that VLMs tend to cheat. Instead of answering based on context clues, they will identify the object using knowledge gained during pretraining.

For instance, since the model already learned that an image of a tiger and the label “tiger” are correlated, it could identify the tiger crossing the grassland based on this pretrained knowledge, instead of inferring from context.

To solve this problem, the researchers used pseudo-names rather than actual object category names in the dataset. In this case, they changed the name of the tiger to “Charlie.”

“It took us a while to figure out how to prevent the model from cheating. But we changed the game for the model. The model does not know that ‘Charlie’ can be a tiger, so it is forced to look at the context,” he says.

The researchers also faced challenges in finding the best way to prepare the data. If the frames are too close together, the background would not change enough to provide data diversity.

In the end, finetuning VLMs with this new dataset improved accuracy at personalized localization by about 12% on average. When they included the dataset with pseudo-names, the performance gains reached 21%.

As model size increases, their technique leads to greater performance gains.

In the future, the researchers want to study possible reasons VLMs don’t inherit in-context learning capabilities from their base LLMs. In addition, they plan to explore additional mechanisms to improve the performance of a VLM without the need to retrain it with new data.

“This work reframes few-shot personalized object localization—adapting on the fly to the same object across new scenes—as an instruction-tuning problem and uses video-tracking sequences to teach VLMs to localize based on visual context rather than class priors. It also introduces the first benchmark for this setting with solid gains across open and proprietary VLMs.

“Given the immense significance of quick, instance-specific grounding—often without finetuning—for users of real-world workflows (such as robotics, augmented reality assistants, creative tools, etc.), the practical, data-centric recipe offered by this work can help enhance the widespread adoption of vision-language foundation models,” says Saurav Jha, a postdoc at the Mila-Quebec Artificial Intelligence Institute, who was not involved with this work.

More information:

Sivan Doveh et al, Teaching VLMs to Localize Specific Objects from In-context Examples, arXiv (2025). DOI: 10.48550/arxiv.2411.13317

This story is republished courtesy of MIT News (web.mit.edu/newsoffice/), a popular site that covers news about MIT research, innovation and teaching.

Citation:

Method teaches generative AI models to locate personalized objects (2025, October 16)

retrieved 16 October 2025

from https://techxplore.com/news/2025-10-method-generative-ai-personalized.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

Tech

VTL Group boosts output by 10% with Coats Digital’s GSDCost solution

With over 5,000 employees and 3,000 sewing machines across 90 sewing lines, VTL Group specialises in jersey knits and denim, producing up to 20 million garments per year for world-renowned brands such as Lacoste, Adidas, G-Star, Hugo Boss, Replay and Paul & Shark. The company operates six garment production units, along with dedicated facilities for screen printing, knitting, dyeing and textile finishing. This extensive vertical integration gives VTL complete control over quality, lead-times and cost-efficiency, which is vital for meeting the stringent demands of its global customer base.

VTL Group has adopted Coats Digital’s GSDCost to standardise production, boost productivity, and improve pricing accuracy across its Tunisian operations.

The solution cut SMVs by 15–20 per cent, raised line output by 10 per cent, and enhanced planning, cost accuracy, and customer confidence, enabling competitive pricing, lean operations, and stronger relationships with global fashion brands.

Prior to implementing GSDCost, VTL calculated capacity and product pricing using data from internal time catalogues stored in Excel. This approach led to inconsistent and inaccurate cost estimations, causing both lost contracts due to inflated production times and reduced margins from underestimations. In some cases, delays caused by misaligned time predictions resulted in increased transportation costs and operational inefficiencies that impacted customer satisfaction.

Hichem Kordoghli, Plant Manager, VTL Group, said: “Before GSDCost, we struggled with inconsistent operating times that directly impacted our competitiveness. We lost orders when our timings were too high and missed profits when they were too low. GSDCost has transformed the way we approach planning, enabling us to quote confidently with accurate, reliable data. We’ve already seen up to 20% reductions in SMVs, a 10% rise in output, and improved customer confidence. It’s a game-changer for our sales and production teams.”

Since adopting GSDCost across 50 sewing lines, VTL Group has been able to establish a reliable baseline for production planning and line efficiency monitoring. This has led to a more streamlined approach to managing load plans and forecasting. Importantly, GSDCost has given the business the flexibility to align pricing more effectively with actual production realities, contributing to greater customer satisfaction and improved profit margins.

Although it’s too early to determine the exact financial impact, VTL Group has already realised improvements in pricing flexibility and competitiveness thanks to shorter product times and better planning. These gains are seen as instrumental in enabling the company to pursue more strategic orders, reduce wasted effort and overtime, and maintain the high expectations of leading global fashion brands.

Hichem Kordoghli, Plant Manager, VTL Group, added: “GSDCost has empowered our teams with reliable data that has translated directly into real operational benefits. We are seeing more consistent line performance, enhanced planning precision, and greater confidence across departments. These improvements are helping us build stronger relationships with our brand partners, while setting the foundation for sustainable productivity gains in the future.”

The company now plans to expand usage across an additional 30 lines in 2025, supported by a second phase of GSD Practitioner Bootcamp training to strengthen in-house expertise and embed best practices throughout the production environment. A further 10 lines are expected to follow in 2026 as part of VTL’s phased rollout strategy.

Liz Bamford, Customer Success Manager, Coats Digital, commented: “We are proud to support VTL Group in their digital transformation journey. The impressive improvements in planning accuracy, quoting precision, and cross-functional alignment are a testament to their commitment to innovation and excellence. GSDCost is helping VTL set a new benchmark for operational transparency and performance in the region, empowering their teams with the tools needed for long-term success.”

GSDCost, Coats Digital’s method analysis and pre-determined times solution, is widely acknowledged as the de-facto international standard across the sewn products industry. It supports a more collaborative, transparent, and sustainable supply chain in which brands and manufacturers establish and optimise ‘International Standard Time Benchmarks’ using standard motion codes and predetermined times. This shared framework supports accurate cost prediction, fact-based negotiation, and a more efficient garment manufacturing process, while concurrently delivering on CSR commitments.

Key Benefits and ROI for VTL Group

- 15–20% reduction in SMVs across 50 production lines

- 10% productivity increase across key sewing facilities

- More competitive pricing for strategic sales opportunities

- Improved cost accuracy and quotation flexibility

- Standardised time benchmarks for future factory expansion

- Enhanced planning accuracy and load plan management

- Greater alignment with lean and sustainable manufacturing goals

- Increased brand confidence and satisfaction among premium customers

Note: The headline, insights, and image of this press release may have been refined by the Fibre2Fashion staff; the rest of the content remains unchanged.

Fibre2Fashion News Desk (HU)

Tech

Adidas Promo Codes: Up to 40% Off in January 2026

No matter how my style may change, I always consider Adidas the ultimate shoes for effortlessly cool people. With celebrity endorsements from pro athletes like David Beckham to music icons like Pharrell and Bad Bunny, Adidas has cemented itself firmly in the current zeitgeist. Although most known for classic sneaker styles like Sambas (beloved by skaters and boys I had crushes on in high school), Adidas also has always-stylish apparel, slides, running shoes, and more. WIRED has Adidas promo codes so you too can be cool—but on a budget.

Unlock 15% Adidas Promo Codes With Sign Up

Become a member of the cool kids club with Adidas membership program, adiClub. adiClub gives you free shipping, discount vouchers, and members-only exclusives. When you join, you’ll get instant benefits, points on purchases, and you can get rewards, exclusive experiences, products, vouchers, and more. Right now, when you sign up to be an adiClub member you’ll get a 15% Adidas promo code to save on a fresh pair of sneaks or athleisurewear fit.

There is more than one way to save. You can get 15% off by signing up for adiClub, either with your email, or by downloading the adidas or CONFIRMED app on your phone. After, you’ll find the 15% off welcome offer in your account under “Vouchers and Gift Cards.” Then, you’ll just need to paste it in the promo code step at checkout to save. You’ll instantly get 100 adiClub points, plus an additional 100 when you create a profile. Plus, when you sign up for the brand email newsletter, a unique promo code will be sent to your inbox to use for more savings.

Explore Adidas Coupons and 2026 Sale Deals For 60% Off Trending Shoes

As aforementioned, I think the Samba OG shoes are the most classic style you can get—I mean OG is in the name. The style gives an effortless cool vibe that’s stood the test of time. The classic Samba is now 20% off. Always-popular Campus 00s blend the skater aesthetic with contemporary tastes, making them another modern classic, starting at $66, now 40% off. The Gazelle Bold shoe comes in a bunch of fun colorways, making them a versatile choice for any stylish shoe-wearer, now on sale. Plus, you can get 40% off Handball Spezial shoes (starting at $66) and 30% off the Superstar II shoes (starting at $70).

Some of the best ways to save big are just through navigating the Adidas website—make sure you’re browsing styles under $80, and check the final clearance sale styles for up to 60% off. Plus, there are always discounts on certain colorways or materials of the same type of shoe.

Students, Military Members, and Healthcare Workers Can Unlock 30% Off at Adidas

Adidas doesn’t want your kid going back to school after holiday break with the same ol’ same ol’ and that’s why Adidas student discount gets your kid (or you, lifelong learner) 30% off full-price items with UNiDAYS online and a 15% discount in-store. Join now and verify your status with UNiDAYS. All you’ll need to do is enter the code provided from UNiDAYS during checkout, and you can get discounts of up to $1,000.

Heroic and stylish? That’s hot. The Adidas heroes discount gives thanks by giving verified medical professionals, first responders, nurses, military members, and teachers 30% off online and in-store (and 15% off at factory outlets). To redeem the heroes discount, you must complete verification through ID.me, then you’ll apply your unique discount code that will be sent to your inbox. A fireman in a pair of Sambas? Come rescue me, it’s burning up in here.

Be sure to check back regularly as we check back for more Adidas promo codes and other discounts, especially as the holiday (and shopping) season approaches oh-too-quickly.

Adidas Free Shipping Deals for adiClub and Prime Members

I’ve talked to you about all of the perks adiClub members get, but they also get free standard shipping on every order, which usually ships in 3-5 business days. With the membership, you’ll also get free returns or exchanges on any order!

Plus, if you’re already a Prime member, you’ll get 2-3 day free shipping without needing to join adiClub. Through this, you can conveniently track order in your Amazon Prime account; it will even show your delivery date info once you select your size.

Pay Less Now With These Adidas Financing Options

Adidas makes it easy for anyone to get the gift of great style. Adidas offers Klarna, the financing service on purchases, which allows you to pay later (in 30 days), or in 4 interest-free installments. Plus, with Klarna, you can try your order before you buy it).

There’s also the Afterpay buy option, which allows you to buy shoes now, and pay for them in four payments made every 2 weeks without any interest! This Afterpay option is eligible on any order above $50. If you’d rather pay with PayPal Pay, you can pay in 4 installments (eligible on purchases from $30 to $1,500). You can also pay over 6 weeks, starting with paying for only 25% of your order today, then the rest will be split into 3 additional payments.

Tech

Meta’s Layoffs Leave Supernatural Fitness Users in Mourning

Tencia Benavidez, a Supernatural user who lives in New Mexico, started her VR workouts during the Covid pandemic. She has been a regular user in the five years since, calling the ability to workout in VR ideal, given that she lives in a rural area where it’s hard to get to a gym or workout outside during a brutal winter. She stuck with Supernatural because of the community and the eagerness of Supernatural’s coaches.

“They seem like really authentic individuals that were not talking down to you,” Benavidez says. “There’s just something really special about those coaches.”

Meta bought Supernatural in 2022, folding it into its then-heavily invested in metaverse efforts. The purchase was not a smooth process, as it triggered a lengthy legal battle in which the US Federal Trade Commission tried to block Meta from purchasing the service due to antitrust concerns about Meta “trying to buy its way to the top” of the VR market. Meta ultimately prevailed. At the time, some Supernatural users were cautiously optimistic, hoping that big bag of Zuckerbucks could keep its workout juggernaut afloat.

“Meta fought the government to buy this thing,” Benavidez says. “All that just for them to shut it down? What was the point?”

I reached out to Meta and Supernatural, and neither responded to my requests for comment.

Waking Up to Ash and Dust

On Tuesday, Bloomberg reported that Meta has laid off more than 1,000 people across its VR and metaverse efforts. The move comes after years of the company hemorrhaging billions of dollars on its metaverse products. In addition to laying off most of the staff at Supernatural, Meta has shut down three internal VR studios that made games like Resident Evil 4 and Deadpool VR.

“If it was a bottom line thing, I think they could have charged more money,” Goff Johnson says about Supernatural. “I think people would have paid for it. This just seems unnecessarily heartless.”

There is a split in the community about who will stay and continue to pay the subscription fee, and who will leave. Supernatural still has more than 3,000 lessons available in the service, so while new content won’t be added, some feel there is plenty of content left in the library. Other users worry about how Supernatural will continue to license music from big-name bands.

“Supernatural is amazing, but I am canceling it because of this,” Chip told me. “The library is large, so there’s enough to keep you busy, but not for the same price.”

There are other VR workout experiences like FitXR or even the VR staple Beat Saber, which Supernatural cribs a lot of design concepts from. Still, they don’t hit the same bar for many of the Supernatural faithful.

“I’m going to stick it out until they turn the lights out on us,” says Stefanie Wong, a Bay Area accountant who has used Supernatural since shortly after the pandemic and has organized and attended meetup events. “It’s not the app. It’s the community and it’s the coaches that we really, really care about.”

Welcome to the New Age

I tried out Supernatural’s Together feature on Wednesday, the day after the layoffs. It’s where I met Chip and Alisa. When we could stop to catch our breath, we talked about the changes coming to the service. They had played through previous sessions hosted by Jane Fonda or playlists with a mix of music that would change regularly. It seems the final collaboration in Supernatural’s multiplayer mode will be what we played now, an artist series featuring entirely Imagine Dragons songs.

In the session, as we punched blocks while being serenaded by this shirtless dude crooning, recorded narrations from Supernatural coach Dwana Olsen chimed in to hype us up.

“Take advantage of these moments,” Olsen said as we punched away. “Use these movements to remind you of how much awesome life you have yet to live.”

Frankly, it was downright invigorating. And bittersweet. We ended another round, sweaty, huffing and puffing. Chip, Alisa, and I high-fived like crazy and readied for another round.

“Beautiful,” Alisa said. “It’s just beautiful, isn’t it?”

-

Politics1 week ago

Politics1 week agoUK says provided assistance in US-led tanker seizure

-

Entertainment1 week ago

Entertainment1 week agoDoes new US food pyramid put too much steak on your plate?

-

Entertainment1 week ago

Entertainment1 week agoWhy did Nick Reiner’s lawyer Alan Jackson withdraw from case?

-

Sports5 days ago

Sports5 days agoClock is ticking for Frank at Spurs, with dwindling evidence he deserves extra time

-

Business1 week ago

Business1 week agoTrump moves to ban home purchases by institutional investors

-

Sports1 week ago

Sports1 week agoPGA of America CEO steps down after one year to take care of mother and mother-in-law

-

Tech3 days ago

Tech3 days agoNew Proposed Legislation Would Let Self-Driving Cars Operate in New York State

-

Business1 week ago

Business1 week agoBulls dominate as KSE-100 breaks past 186,000 mark – SUCH TV