Tech

AI method reconstructs 3D scene details from simulated images using inverse rendering

Over the past decades, computer scientists have developed many computational tools that can analyze and interpret images. These tools have proved useful for a broad range of applications, including robotics, autonomous driving, health care, manufacturing and even entertainment.

Most of the best performing computer vision approaches employed to date rely on so-called feed-forward neural networks. These are computational models that process input images step by step, ultimately making predictions about them.

While some of these models were found to perform well when tested on the data they analyzed during training, they often do not generalize well across new images and in different scenarios. In addition, their predictions and the patterns they extract from images can be difficult to interpret.

Researchers at Princeton University recently developed a new inverse rendering approach that is more transparent and could also interpret a wide range of images more reliably. The new approach, introduced in a paper published in Nature Machine Intelligence, relies on a generative artificial intelligence (AI)-based method to simulate the process of image creation, while also optimizing it by gradually adjusting a model’s internal parameters.

“Generative AI and neural rendering have transformed the field in recent years for creating novel content: producing images or videos from scene descriptions,” Felix Heide, senior author of the paper, told Tech Xplore. “We investigate whether we can flip this around and use these generative models for extracting the scene descriptions from images.”

The new approach developed by Heide and his colleagues relies on a so-called differentiable rendering pipeline. This is a process for the simulation of image creation, relying on compressed representations of images created by generative AI models.

“We developed an analysis-by-synthesis approach that allows us to solve vision tasks, such as tracking, as test-time optimization problems,” explained Heide. “We found that this method generalizes across datasets, and in contrast to existing supervised learning methods, does not need to be trained on new datasets.”

Essentially, the method developed by the researchers works by placing models of 3D objects in a virtual scene depicting real world settings. These models of objects are generated by a generative AI based on random sample of 3D scene parameters.

“We then render all these objects back together into a 2D image,” said Heide. “Next, we compare this rendered image with the real observed image. Based on how different they are, we backpropagate the difference through both the differentiable rendering function and the 3D generation model to update its inputs. In just a few steps, we optimize these inputs to make the rendered match the observed images better.”

-

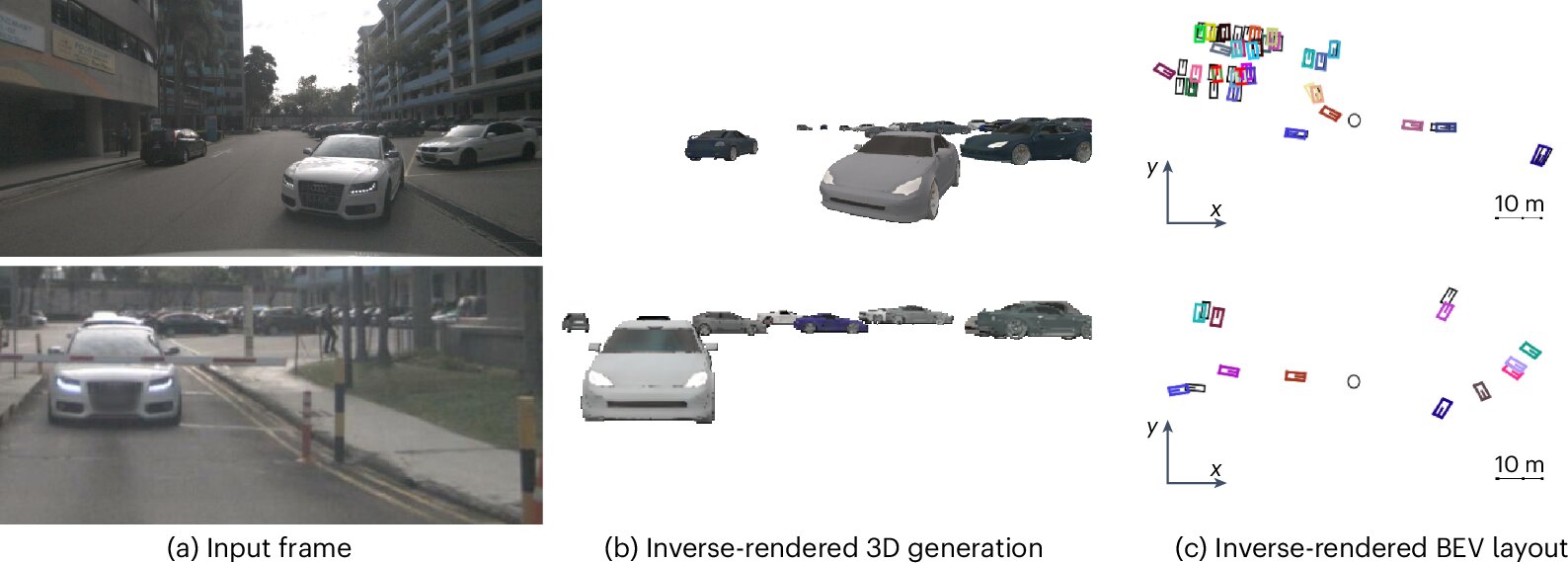

Optimizing 3D models through inverse neural rendering. From left to right: the observed image, initial random 3D generations, and three optimization steps that refine these to better match the observed image. The observed images are faded to show the rendered objects clearly. The method effectively refines object appearance and position, all done at test time with inverse neural rendering. Credit: Ost et al.

-

Generalization of 3D multi-object tracking with Inverse Neural Rendering. The method directly generalizes across datasets such as the nuScenes and Waymo Open Dataset benchmarks without additional fine-tuning and is trained on synthetic 3D models only. The observed images are overlaid with the closest generated object and tracked 3D bounding boxes. Credit: Ost et al.

A notable advantage of the team’s newly proposed approach is that it allows very generic 3D object generation models trained on synthetic data to perform well across a wide range of datasets containing images captured in real-world settings. In addition, the renderings produced by the models are far more explainable than those produced by conventional rendering tools based on feed-forward machine learning models.

“Our inverse rendering approach for tracking works just as well as learned feed-forward approaches, but it provides us with explicit 3D explanations of its perceived world,” said Heide.

“The other interesting aspect is the generalization capabilities. Without changing the 3D generation model or training it on new data, our 3D multi-object tracking through Inverse Neural Rendering works well across different autonomous driving datasets and object types. This can significantly reduce the cost of fine-tuning on new data or at least work as an auto-labeling pipeline.”

This recent study could soon help to advance AI models for computer vision, improving their performance in real-world settings while also increasing their transparency. The researchers now plan to continue improving their method and start testing it on more computer vision-related tasks.

“A logical next step is the expansion of the proposed approach to other perception tasks, such as 3D detection and 3D segmentation,” added Heide. “Ultimately, we want to explore if inverse rendering can even be used to infer the whole 3D scene, and not just individual objects. This would allow our future robots to reason and continuously optimize a three-dimensional model of the world, which comes with built-in explainability.”

Written for you by our author Ingrid Fadelli,

edited by Gaby Clark, and fact-checked and reviewed by Robert Egan—this article is the result of careful human work. We rely on readers like you to keep independent science journalism alive.

If this reporting matters to you,

please consider a donation (especially monthly).

You’ll get an ad-free account as a thank-you.

More information:

Julian Ost et al, Towards generalizable and interpretable three-dimensional tracking with inverse neural rendering, Nature Machine Intelligence (2025). DOI: 10.1038/s42256-025-01083-x.

© 2025 Science X Network

Citation:

AI method reconstructs 3D scene details from simulated images using inverse rendering (2025, August 23)

retrieved 23 August 2025

from https://techxplore.com/news/2025-08-ai-method-reconstructs-3d-scene.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

Tech

Need One Pair for Hiking, Traveling, and Working Out? Try Gravel Running Shoes

HOKA’s max-stacked Rocket X Trail combines road race shoe energy with boosted grip from a 3-mm lugged outsole. If you’re looking for a fast shoe to go on the attack, this is it. It’s also fantastic for all round comfort. In testing, I laced up the Rocket X Trail and ran 3 hours (just short of 19 miles) fresh out of the box, across roads, forest gravel trails, some grass and through some serious water. It delivered efficiency and energy whether I was moving at marathon pace or with heavier, tired, ragged footfalls in the latter miles.

The rockered, supercritical midsole uses HOKA’s liveliest foam, similar to those you find in its race-ready road shoes, along with a carbon plate. That combines for a really fun ride that’s smooth, springy and fast and really consistent. It’s also highly cushioned, so you will sacrifice a lot of ground feel for that big stack springy softness. It’s also less stable over very lumpy terrain. But on open, flat, runnable mixed terrain, it’s excellent.

The lightweight uppers have a race-shoe-ready feel and after running through ankle-deep flooded sections, they shed water really quickly. This is a pricey road-to-trail shoe, it’s versatile and there’s plenty of winter road potential, too.

| Specs | |

|---|---|

| Weight | 9.45 oz |

| Heel-to-toe drop | 6 mm |

| Lug depth | 3 mm |

Tech

If a Garmin Is Too Expensive, Consider Suunto’s Latest Adventure Watch

It’s always pleasing to see an array of physical buttons, and you get sizable ones too. You’re not going to miss these wide flat ones even when picking the pace up. The silicone strap has a nice stretch to it and while the button clasp is a bit awkward to get into place, this watch does not budge.

Suunto has jumped on the flashlight trend, with an LED light strip sat on the front of the case. You can adjust brightness levels and there’s SOS and alert modes to emit a very noticeable pulsating light pattern. This is a light I found useful rooting around indoors as well as on nighttime outings.

The biggest change is the introduction of a 1.5-inch, 466 x 466 AMOLED display. This replaces the dull, albeit very visible, memory-in-pixel (MIP) display. Suunto also ditched the solar charging that did require spending a significant amount of time outside to reap its battery benefits.

Adding AMOLED screens to outdoor watches has been contentious. The older MIP displays are just more power-efficient. The Vertical 2 is down by about 10 days from the older Vertical for what Suunto calls daily use.

Still, even if you’re putting its tracking and mapping features to use, you’re not going to be reaching for the charger every few days. After two hours of tracking in optimal GPS mode, the battery only dropped by 2 to 3 percent. The battery drop outside of tracking is also small and the standby performance is excellent as well.

Software Updates

Photograph: Michael Sawh

A more streamlined set of smartwatch features helps reserve battery for when it really matters. Unfortunately, I probably got better battery life because you don’t get phone notifications or responses if it’s paired to an iPhone instead of an Android. There’s also no onboard music player, but you do get a pretty slick set of music playback controls that are accessible during tracking.

Tech

Electronic health records are still creating issues for patients | Computer Weekly

Every NHS trust in England needs an electronic patient record (EPR) system in place by March 2026, as part of a government push to digitise the healthcare system.

In many ways, this is long overdue: some trusts have still been using pen-and-paper record-keeping until very recently.

EPRs have the potential to massively improve efficiency in the NHS. If working properly, they allow doctors to keep all of their records in one place, speed up prescribing and diagnostics, and make it easier for patients to access their own health information.

But these roll-outs have not been without problems. Concerns have been raised about how far these benefits can actually be realised. Some NHS trusts have experienced issues with integrating new systems and training staff on how to use them.

In the extreme, there have been reports of EPRs creating new problems for hospitals, with evidence suggesting these systems may have contributed to serious harm and even deaths among patients.

NHS trusts have been put in charge of procuring their own EPRs, meaning there are numerous different technology companies involved. Some providers of these systems are large US firms. This includes Oracle Health, provided by the Larry Ellison-led tech giant, and Epic, a tech firm based in Wisconsin.

Contracts can run into nine figures: Guy’s and St Thomas’, a trust in South London, launched a £450m system from Epic in late 2023. Some parts of the NHS have been using them for more than a decade, but a handful are still set to miss the government’s March deadline.

Data access

Pritesh Mistry is a fellow at the King’s Fund, where he researches the impact of digital transformation in the NHS. He says it has had “both positive and negative impacts”.

“In the last few years, we’ve seen doubling down on the focus around digital records,” says Mistry. These are now in place in more than 90% of all trusts, and every GP practice.

“That means we’ve now got [new] data that’s within the healthcare system, which allows us to do other things, like treat populations, and understand and track patient safety,” he says.

Despite this, he cautions some patients are still struggling to get hold of their own data.

“We’ve got a lot of data that’s in silos,” says Mistry. “It doesn’t flow. That’s the biggest challenge: making the data accessible and usable for patients and healthcare professionals to be able to provide care in a way that is joined up and meets with modern expectations.”

He says complaints with new technology haven’t just come from patients.

“We need to recognise that staff are really frustrated,” says Mistry. “Software often crashes. Computers are really slow, and technology adds to their workload, instead of simplifying things.” He caveats that some parts of the NHS are better than others on this.

Safeguarding patient data

Mistry adds that there are safeguards in place to ensure patient data isn’t ending up where it shouldn’t be – such as through data protection rules and procurement requirements.

However, he warns that “we need to make sure we move with the times in terms of what technology is available”. Mistry is more concerned about medical staff inadvertently putting personal information into a large language model, for instance.

“Digital exclusion remains a barrier as well,” he says, adding that these systems have the potential to widen inequalities in healthcare. Those less able to use new technology might struggle to access their records.

“People tend to assume it’s old people [who are most impacted], but that isn’t necessarily true,” says Mistry, instead highlighting the impact of poverty and deprivation, with some still unable to afford internet access.

He argues the NHS should be working to meet people where they are, and provide more “tailored” technology services.

Patient safety

Nick Woodier is a doctor and investigator at the Health Services Safety Investigations Body (HSSIB), which looks into issues with healthcare in the UK. He sees problems arising from how EPRs are deployed by trusts, especially when medical staff overestimate their capabilities.

He uses the example of prescribing medicines: “There’s an assumption that these electronic prescribing systems will stop you [from] doing something catastrophic.”

But this isn’t always the case. In one investigation, the HSSIB found a child had been prescribed nearly 10 times the recommended dose of an anti-coagulant medication, with doctors having assumed the EPR would flag an issue. The child ended up with a bleed on their brain.

Woodier also worries hospitals are not always picking up on when these systems are at fault.

“We will often see where incidents have happened and the contribution of the electronic system has not been recognised,” he says.

Woodier sees this as coming from a culture which prefers to put the blame for safety failures on individuals.

A 2024 investigation by the BBC found there were more than 126 instances of serious harm registered by NHS trusts across 31 trusts, including three deaths related to EPR problems.

The HSSIB has also encountered problems from patients being unable to access their digital records.

“We’ve seen in general practice, for example, some patients telling us that they’ve gone without care – because in their mind, they thought the only way they could access their GP was to fill in an electronic form,” says Woodier.

A spokesperson for NHS England says EPRs are “already having a significant impact on improving safety and care for patients”, for instance, by helping to identify conditions such as sepsis, and preventing medication errors.

“They have replaced outdated and often less-safe paper-based systems, and we are working closely with NHS trusts to ensure they are implemented safely alongside other systems with appropriate training – and are used to the highest quality and safety standards,” the spokesperson adds.

Interoperability

The EPR roll-out has also been criticised for problems with “interoperability” – the ability of different programs and modes of data collection to converse with each other. The patchwork of different systems used by different trusts means data stored in one system might not be useful for a system used by a different part of the NHS.

Woodier says this often happens in communications between hospitals and GP surgeries. This can involve someone manually inputting information from one system to another, which can create risks when data is not being transferred properly, or is missed completely.

“When you introduce a manual operation, that risk increases,” he warns. “The odds are that at some point, somebody won’t do the right thing, because that’s the reality of being human.”

Alex Lawrence, a fellow at the Health Foundation, describes interoperability as a “significant challenge”, which the NHS and technology companies have been “grappling with for a really long time”.

“Some trusts have found it much harder to access their own EPR data than they anticipated, because of where that data is stored,” she adds, referring to research the organisation carried out in 2024.

“If it’s taking you days to pull the data that you need, then it’s already not going to be useful for a lot of the purposes that you might want it for.”

However, Lawrence adds that there have been some steps made in the right direction, notably with the Data (Use and Access) Act, which was passed last year.

“The government is making information standards mandatory for EPR providers, as well as trusts, with the Secretary of State potentially having more powers to enforce those standards,” she says.

The longer term

Going forward, Lawrence would like to see a system involving “patients being empowered with access to their own data, and as far as appropriate, clinicians being able to see all of the history that they need for their patients”.

In an ideal system, different parts of the healthcare system would be able to “share a patient’s data where necessary and appropriate, in an easy and timely way”.

She says they have the “potential to offer enormous value”, but much of their functionality is going unused. “What our qualitative research suggested was that a lot of these systems are still functioning as digital notebooks,” says Lawrence.

Matthew Taylor is the head of the NHS Confederation and NHS Providers, membership bodies for healthcare organisations.

“NHS leaders say the gap between trusts on digital maturity is still stark – and it’s shaping how quickly organisations can move to modern EPRs,” he says.

This gap – combined with the organisational complexity of the healthcare system – means interoperability has “long been a thorn in the NHS’s side”.

Taylor adds that EPRs are not a “once-and-done” job, and argues they will result in savings in the long term, but that it may take around five years to see the benefits.

“Hospitals are housing a huge amount of paper records, and the cost of storing, retrieving and managing those records can run into millions of pounds each year,” he says.

These systems are part of a larger picture, and one facet of the conversation, around the use of artificial intelligence in the NHS. AI models for areas such as research and diagnostics will require extensive and standardised medical data.

Mistry warns these AI tools operate on the basis of “garbage in, garbage out”.

“There is a risk that we roll out AI tools without the underpinning data quality it needs,” he says, adding that this could exacerbate inequalities or biases from using AI.

As Woodier puts it: “We’ve got organisations who are still using archaic computers, have got infrastructure that’s not working, are still on old web systems, or have EPRs that don’t talk to each other. A few [trusts] don’t have EPRs.

“So, actually, are we trying to run before we’ve even managed to walk?”

-

Politics1 week ago

Politics1 week agoWhat are Iran’s ballistic missile capabilities?

-

Business6 days ago

Business6 days agoIndia Us Trade Deal: Fresh look at India-US trade deal? May be ‘rebalanced’ if circumstances change, says Piyush Goyal – The Times of India

-

Business1 week ago

Business1 week agoAttock Cement’s acquisition approved | The Express Tribune

-

Politics1 week ago

Politics1 week agoUS arrests ex-Air Force pilot for ‘training’ Chinese military

-

Business1 week ago

Business1 week agoHouseholds set for lower energy bills amid price cap shake-up

-

Fashion1 week ago

Fashion1 week agoPolicy easing drives Argentina’s garment import surge in 2025

-

Sports6 days ago

Sports6 days agoLPGA legend shares her feelings about US women’s Olympic wins: ‘Gets me really emotional’

-

Fashion7 days ago

Fashion7 days agoTexwin Spinning showcasing premium cotton yarn range at VIATT 2026