Tech

How to reduce greenhouse gas emissions from ammonia production

Ammonia is one of the most widely produced chemicals in the world, used mostly as fertilizer, but also for the production of some plastics, textiles, and other applications. Its production, through processes that require high heat and pressure, accounts for up to 20% of all the greenhouse gases from the entire chemical industry, so efforts have been underway worldwide to find ways to reduce those emissions.

Now, researchers at MIT have come up with a clever way of combining two different methods of producing a compound that minimizes waste products, that—when combined with some other simple upgrades—could reduce the greenhouse emissions from production by as much as 63%, compared to the leading “low-emissions” approach being used today.

The new approach is described in the journal Energy & Fuels, in a paper by MIT Energy Initiative (MITEI) Director William H. Green, graduate student Sayandeep Biswas, MITEI Director of Research Randall Field, and two colleagues.

“Ammonia has the most carbon dioxide emissions of any kind of chemical,” says Green, who is the Hoyt C. Hottel Professor in Chemical Engineering.

“It’s a very important chemical,” he says, because its use as a fertilizer is crucial to being able to feed the world’s population.

Until late in the 19th century, the most widely used source of nitrogen fertilizer was mined deposits of bat or bird guano, mostly from Chile, but that source was beginning to run out, and there were predictions that the world would soon be running short of food to sustain the population. But then a new chemical process, called the Haber-Bosch process after its inventors, made it possible to make ammonia out of nitrogen from the air and hydrogen, which was mostly derived from methane. But both the burning of fossil fuels to provide the needed heat and the use of methane to make the hydrogen led to massive climate-warming emissions from the process.

To address this, two newer variations of ammonia production have been developed: so-called blue ammonia, where the greenhouse gases are captured right at the factory and then sequestered deep underground, and green ammonia, produced by a different chemical pathway, using electricity instead of fossil fuels to hydrolyze water to make hydrogen.

Blue ammonia is already beginning to be used, with a few plants operating now in Louisiana, Green says, and the ammonia mostly being shipped to Japan, “so that’s already kind of commercial.” Other parts of the world are starting to use green ammonia, especially in places that have lots of hydropower, solar, or wind to provide inexpensive electricity, including a giant plant now under construction in Saudi Arabia.

But in most places, both blue and green ammonia are still more expensive than the traditional fossil-fuel-based version, so many teams around the world have been working on ways to cut these costs as much as possible so that the difference is small enough to be made up through tax subsidies or other incentives.

The problem is growing, because as the population grows, and as wealth increases, there will be ever-increasing demand for nitrogen fertilizer. At the same time, ammonia is a promising substitute fuel to power hard-to-decarbonize transportation such as cargo ships and heavy trucks, which could lead to even greater needs for the chemical.

“It definitely works” as a transportation fuel, by powering fuel cells that have been demonstrated for use by everything from drones to barges and tugboats and trucks, Green says.

“People think that the most likely market of that type would be for shipping,” he says, “because the downside of ammonia is it’s toxic and it’s smelly, and that makes it slightly dangerous to handle and to ship around.”

So its best uses may be where it’s used in high volume and in relatively remote locations, like the high seas. In fact, the International Maritime Organization will soon be voting on new rules that might give a strong boost to the ammonia alternative for shipping.

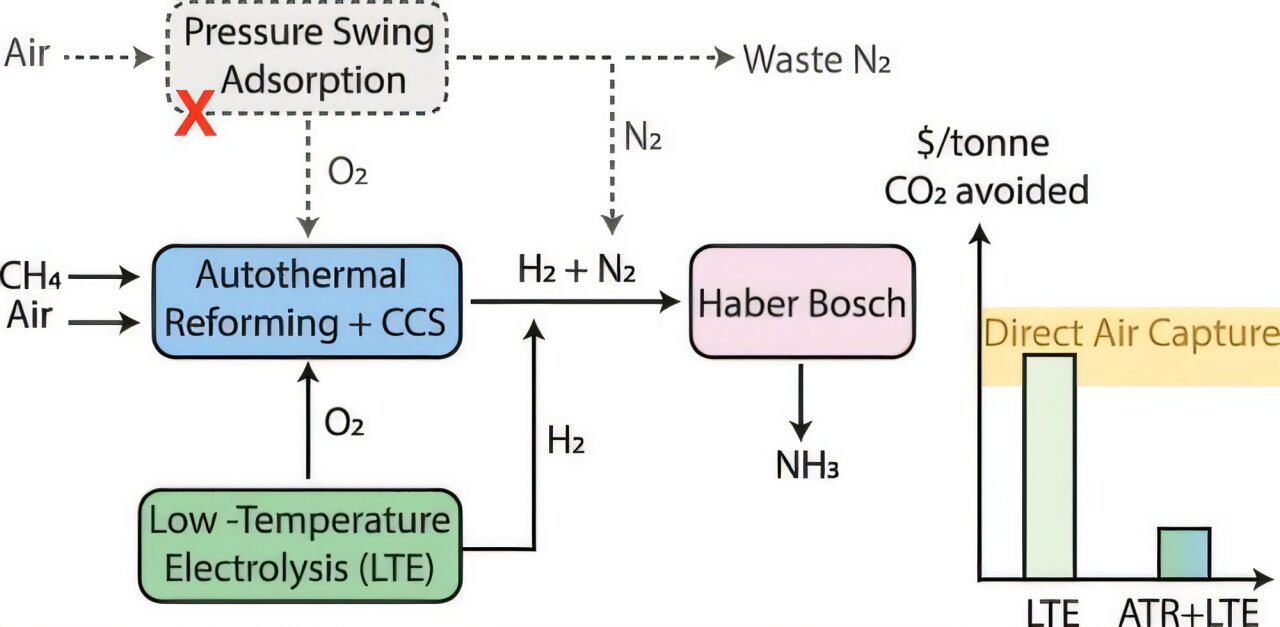

The key to the new proposed system is to combine the two existing approaches in one facility, with a blue ammonia factory next to a green ammonia factory. The process of generating hydrogen for the green ammonia plant leaves a lot of leftover oxygen that just gets vented to the air. Blue ammonia, on the other hand, uses a process called autothermal reforming that requires a source of pure oxygen, so if there’s a green ammonia plant next door, it can use that excess oxygen.

“Putting them next to each other turns out to have significant economic value,” Green says.

This synergy could help hybrid “blue-green ammonia” facilities serve as an important bridge toward a future where eventually green ammonia, the cleanest version, could finally dominate. But that future is likely decades away, Green says, so having the combined plants could be an important step along the way.

“It might be a really long time before [green ammonia] is actually attractive” economically, he says. “Right now, it’s nowhere close, except in very special situations.”

But the combined plants “could be a really appealing concept, and maybe a good way to start the industry,” because so far only small, standalone demonstration plants of the green process are being built.

“If green or blue ammonia is going to become the new way of making ammonia, you need to find ways to make it relatively affordable in a lot of countries, with whatever resources they’ve got.” This new proposed combination, he says, “looks like a really good idea that can help push things along. Ultimately, there’s got to be a lot of green ammonia plants in a lot of places,” and starting out with the combined plants, which could be more affordable now, could help to make that happen. The team has filed for a patent on the process.

Although the team did a detailed study of both the technology and the economics that showed the system has great promise, Green points out, “No one has ever built one. We did the analysis, it looks good, but surely when people build the first one, they’ll find funny little things that need some attention,” such as details of how to start up or shut down the process.

“I would say there’s plenty of additional work to do to make it a real industry.”

But the results of this study, which show the costs to be much more affordable than existing blue or green plants in isolation, “definitely encourage the possibility of people making the big investments that would be needed to really make this industry feasible.”

This proposed integration of the two methods “improves efficiency, reduces greenhouse gas emissions, and lowers overall cost,” says Kevin van Geem, a professor in the Center for Sustainable Chemistry at Ghent University, who was not associated with this research.

“The analysis is rigorous, with validated process models, transparent assumptions, and comparisons to literature benchmarks. By combining techno-economic analysis with emissions accounting, the work provides a credible and balanced view of the trade-offs.”

He adds, “Given the scale of global ammonia production, such a reduction could have a highly impactful effect on decarbonizing one of the most emissions-intensive chemical industries.”

The research team also included MIT postdoc Angiras Menon and MITEI research lead Guiyan Zang.

More information:

Sayandeep Biswas et al, A Comprehensive Costing and Emissions Analysis of Blue, Green, and Combined Blue-Green Ammonia Production, Energy & Fuels (2025). DOI: 10.1021/acs.energyfuels.5c03111

This story is republished courtesy of MIT News (web.mit.edu/newsoffice/), a popular site that covers news about MIT research, innovation and teaching.

Citation:

How to reduce greenhouse gas emissions from ammonia production (2025, October 8)

retrieved 8 October 2025

from https://techxplore.com/news/2025-10-greenhouse-gas-emissions-ammonia-production.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

Tech

Don’t Buy Some Random USB Hub off Amazon. Here Are 5 We’ve Tested and Approved

Other Good USB Hubs to Consider

Ugreen Revodok Pro 211 Docking Station for $64: Most laptop docking stations are bulky gadgets that often require a power source, but this one from Ugreen straddles the line between dock and hub. It has a small, braided cable running to a relatively large aluminum block. It’s a bit hefty but still compact, and it packs a lot of extra power. It has three USB ports (one USB-C and two USB-A) that each reached up to 900 MB/s of data-transfer speeds in my testing. That was enough to move large amounts of 4K video footage in minutes. The only problem is that using dual monitors on a Mac is limited to only mirroring.

Photograph: Luke Larsen

Hyper HyperDrive Next Dual 4K Video Dock for $150: This one also straddles the line between dock and USB hub. Many mobile docks lack proper Mac support, only allowing for mirroring instead of full extension. The HyperDrive Next Dual 4K fixes that problem, though, making it a great option for MacBooks (though it won’t magically give an old MacBook Air dual-monitor support). Unfortunately, you’ll be paying handsomely for that capability, as this one is more expensive than the other options. The other problem is that although this dock has two HDMI ports that can support 4K, though only one will be at 60 Hz and the other will be stuck at 30 Hz. So, if you plan to use it with multiple displays, you’ll need to drop the resolution 1440p or 1080p on one of them. I also tested this Targus model, which is made by the same company, which gets you two 4K displays at 60 Hz but not on Mac.

Anker USB-C Hub 5-in-1 for $20: This Anker USB hub is the one I carry in my camera bag everywhere. It plugs into the USB-C port on your laptop and provides every connection you’d need to offload photos or videos from camera gear. In our testing, the USB 3.0 ports reached transfer speeds over 400 MB/s, which isn’t quite as fast as some USB hubs on this list, but it’s solid for a sub-$50 device. Similarly, the SD card reader reached speeds of 80 MB/s for reading and writing, which isn’t the fastest SD cards can get, but adequate for moving files back and forth.—Eric Ravenscraft

Kensington Triple Video Mobile Dock for $83: Another mobile dock meant to provide additional external support, this one from Kensington can technically power up to three 1080p displays at 60 Hz using the two HDMI ports and one DisplayPort. It’s a lot of ports in a relatively small package, though the basic plastic case isn’t exactly inspiring.

Power up with unlimited access to WIRED. Get best-in-class reporting and exclusive subscriber content that’s too important to ignore. Subscribe Today.

Tech

Trump’s War on Iran Could Screw Over US Farmers

Global oil and gas prices have skyrocketed following the US attack on Iran last weekend. But another key global supply chain is also at risk, one that may directly impact American farmers who have already been squeezed for months by tariff wars. The conflict in the Middle East is choking global supplies of fertilizer right before the crucial spring planting season.

“This literally could not be happening at a worse time,” says Josh Linville, the vice president of fertilizer at financial services company StoneX.

The global fertilizer market focuses on three main macronutrients: phosphates, nitrogen, and potash. All of them are produced in different ways, with different countries leading in exports. Farmers consider a variety of factors, including crop type and soil conditions, when deciding which of these types of fertilizer to apply to their fields.

Potash and phosphates are both mined from different kinds of natural deposits; nitrogen fertilizers, by contrast, are produced with natural gas. QatarLNG, a subsidiary of Qatar Energy, a state-run oil and gas company, said on Monday that it would halt production following drone strikes on some of its facilities. This effectively took nearly a fifth of the world’s natural gas supply offline, causing gas prices in Europe to spike.

That shutdown puts supplies of urea, a popular type of nitrogen fertilizer, particularly at risk. On Tuesday, Qatar Energy said that it would also stop production of downstream products, including urea. Qatar was the second-largest exporter of urea in 2024. (Iran was the third-largest; it’s also a key exporter of ammonia, another type of nitrogen fertilizer.) Prices on urea sold in the US out of New Orleans, a key commodity port, were up nearly 15 percent on Monday compared to prices last week, according to data provided by Linville to WIRED. The blockage of the Strait of Hormuz is also preventing other countries in the region from exporting nitrogen products.

“When we look at ammonia, we’re looking at almost 30 percent of global production being either involved or at risk in this conflict,” says Veronica Nigh, a senior economist at the Fertilizer Institute, a US-based industry advocacy organization. “It gets worse when we think about urea. Urea is almost 50 percent.”

Other types of fertilizer are also at risk. Saudi Arabia, Nigh says, supplies about 40 percent of all US phosphate imports; taking them out of the equation for more than a few days could create “a really challenging situation” for the US. Other countries in the region, including Jordan, Egypt, and Israel, also play a big role in these markets.

“We are already hearing reports that some of those Persian Gulf manufacturers are shutting down production, because they’re saying, ‘I have a finite amount of storage for my supply,’” Linville says. “‘Once I reach the top of it, I can’t do anything else. So I’m going to shut down my production in order to make sure I don’t go over above that.’”

Conflict in the strait has intensified in the early part of this week, as the Islamic Revolutionary Guard Corps have reportedly threatened any ship passing through the strait. Traffic has slowed to a crawl. The Trump administration announced initiatives on Tuesday meant to protect oil tankers traveling through the strait, including providing a naval escort. Even if those initiatives succeed—which the shipping industry has expressed doubt about—much of the initial energy will probably go toward shepherding oil and gas assets out of the region.

“Fertilizer is not going to be the most valuable thing that’s gonna transit the strait,” says Nigh.

Tech

Google’s Pixel 10a May Not Be Exciting, but It’s Still an Unbeatable Value

The screen is brighter now, reaching a peak brightness of 3,000 nits, and I haven’t had any trouble reading it in sunny conditions (though it hasn’t been as sunny as I’d like it to be these past few weeks). I appreciate the glass upgrade from Gorilla Glass 3 to Gorilla Glass 7i. It should be more protective, and anecdotally, I don’t see a single scratch on the Pixel 10a’s screen after two weeks of use. (I’d still snag a screen protector to be safe.)

Photograph: Julian Chokkattu

Another notable upgrade is in charging speeds—30-watt wired charging and 10-watt wireless charging. I’ll admit I haven’t noticed the benefits of this yet, since I’m often recharging the phone overnight. You can get up to 50 percent in 30 minutes of charging with a compatible adapter, and that has lined up with my testing.

My biggest gripe? Google should have taken this opportunity to add its Pixelsnap wireless charging magnets to the back of this phone. That would help align the Pixel 10a even more with the Pixel 10 series and bring Qi2 wireless charging into a more affordable realm—actually raising the bar, which wouldn’t be a first for the A-series. After all, Apple did exactly that with the new iPhone 17e, adding MagSafe to the table. Or heck, at least make the Pixel 10a Qi2 Ready like Samsung’s smartphones, so people who use a magnetic case can take advantage of faster wireless charging speeds.

Battery life has been OK. With average use, the Pixel 10a comfortably lasts me a full day, but it still requires daily charging. With heavier use, like when I’m traveling, I’ve had to charge the phone in the afternoon a few times to make sure it didn’t die before I got into bed. This is a fairly big battery for its size, but I think there’s more Google could do to extend juice, akin to Motorola’s Moto G Power 2026.

-

Business6 days ago

Business6 days agoIndia Us Trade Deal: Fresh look at India-US trade deal? May be ‘rebalanced’ if circumstances change, says Piyush Goyal – The Times of India

-

Business1 week ago

Business1 week agoHouseholds set for lower energy bills amid price cap shake-up

-

Politics6 days ago

Politics6 days agoWhat are Iran’s ballistic missile capabilities?

-

Politics7 days ago

Politics7 days agoUS arrests ex-Air Force pilot for ‘training’ Chinese military

-

Business6 days ago

Business6 days agoAttock Cement’s acquisition approved | The Express Tribune

-

Fashion6 days ago

Fashion6 days agoPolicy easing drives Argentina’s garment import surge in 2025

-

Sports7 days ago

Sports7 days agoSri Lanka’s Shanaka says constant criticism has affected players’ mental health

-

Sports1 week ago

Sports1 week agoTop 50 USMNT players of 2026, ranked by club form: USMNT Player Performance Index returns

.png)