Tech

Robots can now learn to use tools—just by watching us

Despite decades of progress, most robots are still programmed for specific, repetitive tasks. They struggle with the unexpected and can’t adapt to new situations without painstaking reprogramming. But what if they could learn to use tools as naturally as a child does by watching videos?

I still remember the first time I saw one of our lab’s robots flip an egg in a frying pan. It wasn’t pre-programmed. No one was controlling it with a joystick. The robot had simply watched a video of a human doing it, and then did it itself. For someone who has spent years thinking about how to make robots more adaptable, that moment was thrilling.

Our team at the University of Illinois Urbana-Champaign, together with collaborators at Columbia University and UT Austin, has been exploring that very question. Could robots watch someone hammer a nail or scoop a meatball, and then figure out how to do it themselves, without costly sensors, motion capture suits, or hours of remote teleoperation?

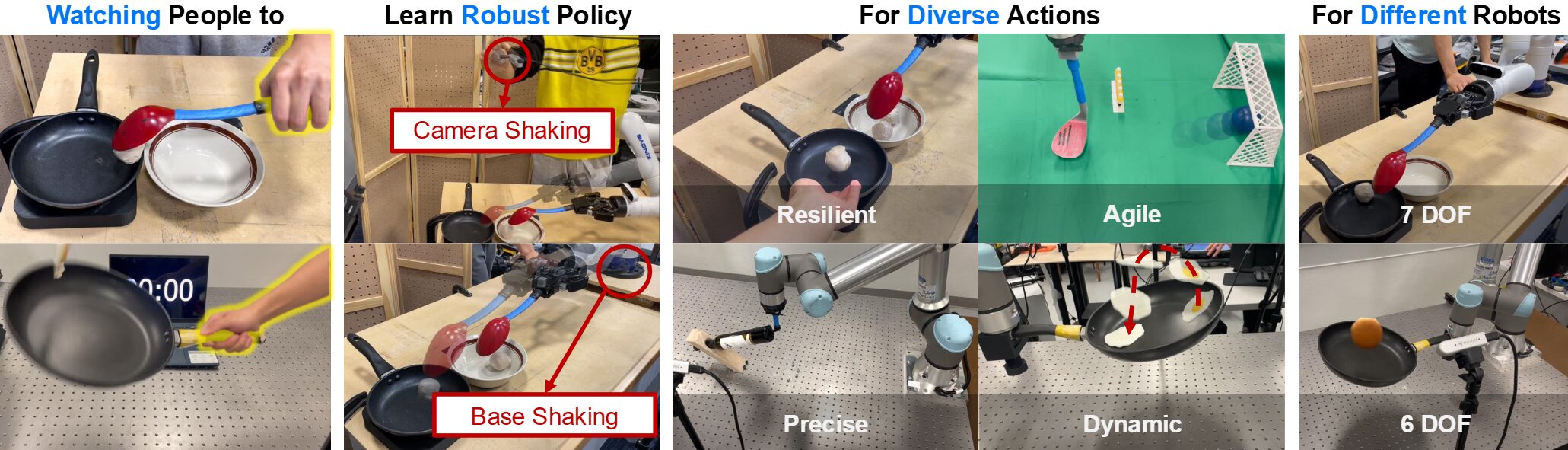

That idea led us to create a new framework we call “Tool-as-Interface,” currently available on the arXiv preprint server. The goal is straightforward: teach robots complex, dynamic tool-use skills using nothing more than ordinary videos of people doing everyday tasks. All it takes is two camera views of the action, something you could capture with a couple of smartphones.

Here’s how it works. The process begins with those two video frames, which a vision model called MASt3R uses to reconstruct a three-dimensional model of the scene. Then, using a rendering method known as 3D Gaussian splatting—think of it as digitally painting a 3D picture of the scene—we generate additional viewpoints so the robot can “see” the task from multiple angles.

But the real magic happens when we digitally remove the human from the scene. With the help of “Grounded-SAM,” our system isolates just the tool and its interaction with the environment. It is like telling the robot, “Ignore the human, and only pay attention to what the tool is doing.”

This “tool-centric” perspective is the secret ingredient. It means the robot isn’t trying to copy human hand motions, but is instead learning the exact trajectory and orientation of the tool itself. This allows the skill to transfer between different robots, regardless of how their arms or cameras are configured.

We tested this on five tasks: hammering a nail, scooping a meatball, flipping food in a pan, balancing a wine bottle, and even kicking a soccer ball into a goal. These are not simple pick-and-place jobs; they require speed, precision, and adaptability. Compared to traditional teleoperation methods, Tool-as-Interface achieved 71% higher success rates and gathered training data 77% faster.

One of my favorite tests involved a robot scooping meatballs while a human tossed in more mid-task. The robot didn’t hesitate, it just adapted. In another, it flipped a loose egg in a pan, a notoriously tricky move for teleoperated robots.

“Our approach was inspired by the way children learn, which is by watching adults,” said my colleague and lead author Haonan Chen. “They don’t need to operate the same tool as the person they’re watching; they can practice with something similar. We wanted to know if we could mimic that ability in robots.”

These results point toward something bigger than just better lab demos. By removing the need for expert operators or specialized hardware, we can imagine robots learning from smartphone videos, YouTube clips, or even crowdsourced footage.

“Despite a lot of hype around robots, they are still limited in where they can reliably operate and are generally much worse than humans at most tasks,” said Professor Katie Driggs-Campbell, who leads our lab.

“We’re interested in designing frameworks and algorithms that will enable robots to easily learn from people with minimal engineering effort.”

Of course, there are still challenges. Right now, the system assumes the tool is rigidly fixed to the robot’s gripper, which isn’t always true in real life. It also sometimes struggles with 6D pose estimation errors, and synthesized camera views can lose realism if the angle shift is too extreme.

In the future, we want to make the perception system more robust, so that a robot could, for example, watch someone use one kind of pen and then apply that skill to pens of different shapes and sizes.

Even with these limitations, I think we’re seeing a profound shift in how robots can learn, away from painstaking programming and toward natural observation. Billions of cameras are already recording how humans use tools. With the right algorithms, those videos could become training material for the next generation of adaptable, helpful robots.

This research, which was honored with the Best Paper Award at the ICRA 2025 Workshop on Foundation Models and Neural-Symbolic (NeSy) AI for Robotics, is a critical step toward unlocking that potential, transforming the vast ocean of human recorded video into a global training library for robots that can learn and adapt as naturally as a child does.

This story is part of Science X Dialog, where researchers can report findings from their published research articles. Visit this page for information about Science X Dialog and how to participate.

More information:

Haonan Chen et al, Tool-as-Interface: Learning Robot Policies from Human Tool Usage through Imitation Learning, arXiv (2025). DOI: 10.48550/arxiv.2504.04612

Cheng Zhu is second author of Tool-as-Interface: Learning Robot Policies from Human Tool Usage through Imitation Learning, UIUC BS Computer Engineering, UPenn MSE ROBO

Citation:

Robots can now learn to use tools—just by watching us (2025, August 23)

retrieved 23 August 2025

from https://techxplore.com/news/2025-08-robots-tools.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

Tech

Lenovo’s Latest Wacky Concepts Include a Laptop With a Built-In Portable Monitor

Do you like having a second screen with your computer setup? What if your laptop could carry a second screen for you? That’s the idea behind Lenovo’s latest proof of concept, the ThinkBook Modular AI PC, announced at Mobile World Congress in Barcelona.

Lenovo is never shy to show off wacky, weird concept laptops. We’ve seen a PC with a transparent screen, one with a rollable OLED screen, a swiveling screen, and another with a flippy screen. At CES earlier this year, the company showed off a gaming laptop with a display that expands at the push of a button. Sometimes, these concepts turn into real products that go on sale (often in limited quantities).

At MWC 2026, Lenovo trotted out three concepts. While it’s unclear whether any of them will become real, purchasable products, there’s some unique utility here, and a peek at how computing experiences could change in the future.

A Laptop With a Built-In Portable Screen

As someone with a multi-screen setup at home and a fondness for portable monitors, the ThinkBook Modular AI PC appeals to me the most. At first glance, it looks like a normal laptop. Take a look behind, and you’ll notice there’s a second screen magnetically hanging off the back of the laptop, like a koala carrying a baby on its back.

The screen is connected to the laptop using pogo-pin connectors, so you can use it in this state to display content to people in front of you, say, if you were making a presentation during a meeting. Alternatively, you can pop this second screen off, remove a hidden kickstand resting under the laptop, and magnetically attach it to the 14-inch screen so that you have a traditional portable monitor experience. (You’ll need to connect this to the laptop via a USB-C cable in this orientation.)

If you don’t have the desk space for that orientation, you can always remove the keyboard from the base and pop the second screen there—it’ll auto-connect to the laptop via the pogo pins, and you’ll be able to use the Bluetooth keyboard to type on a dual-screen setup that resembles the Asus ZenBook Duo. The whole system is a fantastically portable method of improving productivity on the go, and the laptop isn’t too thick or cumbersome.

Tech

The 5 Big ‘Known Unknowns’ of Donald Trump’s New War With Iran

More recently, Iran has been a regular adversary in cyberspace—and while it hasn’t demonstrated quite the acuity of Russia or China, Iran is “good at finding ways to maximize the impact of their capabilities,” says Jeff Greene, the former executive assistant director of cybersecurity at CISA. Iran, in particular, famously was responsible for a series of distributed-denial-of-service attacks on Wall Street institutions that worried financial markets, and its 2012 attack on Saudi Aramco and Qatar’s Rasgas marked some of the earliest destructive infrastructure cyberattacks.

Today, surely, Iran is weighing which of these tools, networks, and operatives it might press into a response—and where, exactly, that response might come. Given its history of terror campaigns and cyberattacks, there’s no reason to think that Iran’s retaliatory options are limited to missiles alone—or even to the Middle East at all.

Which leads to the biggest known unknown of all:

5. How does this end? There’s an apocryphal story about a 1970s conversation between Henry Kissinger and a Chinese leader—it’s told variously as either Mao-Tse Tung or Zhou Enlai. Asked about the legacy of the French revolution, the Chinese leader quipped, “Too soon to tell.” The story almost surely didn’t happen, but it’s useful in speaking to a larger truth particularly in societies as old as the 2,500-year-old Persian empire: History has a long tail.

As much as Trump (and the world) might hope that democracy breaks out in Iran this spring, the CIA’s official assessment in February was that if Khamenei was killed, he would be likely replaced with hardline figures from the Islamic Revolutionary Guard Corps. And indeed, the fact that Iran’s retaliatory strikes against other targets in the Middle East continued throughout Saturday, even after the death of many senior regime officials—including, purportedly, the defense minister—belied the hope that the government was close to collapse.

The post-World War II history of Iran has surely hinged on three moments and its intersections with American foreign policy—the 1953 CIA coup, the 1979 revolution that removed the shah, and now the 2026 US attacks that have killed its supreme leader. In his recent bestselling book King of Kings, on the fall of the shah, longtime foreign correspondent Scott Anderson writes of 1979, “If one were to make a list of that small handful of revolutions that spurred change on a truly global scale in the modern era, that caused a paradigm shift in the way the world works, to the American, French, and Russian Revolutions might be added the Iranian.”

It is hard not to think today that we are living through a moment equally important in ways that we cannot yet fathom or imagine—and that we should be especially wary of any premature celebration or declarations of success given just how far-reaching Iran’s past turmoils have been.

Defense Secretary Pete Hegseth has repeatedly bragged about how he sees the military and Trump administration’s foreign policy as sending a message to America’s adversaries: “F-A-F-O,” playing off the vulgar colloquialism. Now, though, it’s the US doing the “F-A” portion in the skies over Iran—and the long arc of Iran’s history tells us that we’re a long, long way from the “F-O” part where we understand the consequences.

Let us know what you think about this article. Submit a letter to the editor at mail@wired.com.

Tech

This Backyard Smoker Delivers Results Even a Pitmaster Would Approve Of

While my love of smoked meats is well-documented, my own journey into actually tending the fire started just last spring when I jumped at the opportunity to review the Traeger Woodridge Pro. When Recteq came calling with a similar offer to check out the Flagship 1600, I figured it would be a good way to stay warm all winter.

While the two smokers have a lot in common, the Recteq definitely feels like an upgrade from the Traeger I’ve been using. Not only does it have nearly twice the cooking space, but the huge pellet hopper, rounded barrel, and proper smokestack help me feel like a real pitmaster.

The trade-off is losing some of the usability features that make the Woodridge Pro a great first smoker. The setup isn’t as quite as simple, and the larger footprint and less ergonomic conditions require a little more experience or patience. With both options, excellent smoked meat is just a few button presses away, but speaking as someone with both in their backyard, I’ve been firing up the Recteq more often.

Getting Settled

Photograph: Brad Bourque

Setting up the Recteq wasn’t as time-consuming as the Woodridge, but it was more difficult to manage on my own. Some of the steps, like attaching the bull horns to the lid, or flipping the barrel onto its stand, would really benefit from a patient friend or loved one. Like most smokers, you’ll need to run a burn-in cycle at 400 degrees Fahrenheit to make sure there’s nothing left over from manufacturing or shipping. Given the amount of setup time and need to cool down the smoker after, I would recommend setting this up Friday afternoon if you want to smoke on a Saturday.

-

Politics1 week ago

Politics1 week agoPakistan carries out precision strikes on seven militant hideouts in Afghanistan

-

Business1 week ago

Business1 week agoEye-popping rise in one year: Betting on just gold and silver for long-term wealth creation? Think again! – The Times of India

-

Tech1 week ago

Tech1 week agoThese Cheap Noise-Cancelling Sony Headphones Are Even Cheaper Right Now

-

Sports1 week ago

Sports1 week agoKansas’ Darryn Peterson misses most of 2nd half with cramping

-

Sports1 week ago

Sports1 week agoHow James Milner broke Premier League’s appearances record

-

Entertainment1 week ago

Entertainment1 week agoSaturday Sessions: Say She She performs "Under the Sun"

-

Sports1 week ago

Mike Eruzione and the ‘Miracle on Ice’ team are looking for some company

-

Entertainment1 week ago

Entertainment1 week agoViral monkey Punch makes IKEA toy global sensation: Here’s what it costs